Overcoming the penetration depth limit in optical microscopy: Adaptive optics and wavefront shaping

Abstract

Despite the unique advantages of optical microscopy for molecular specific high resolution imaging of living structure in both space and time, current applications are mostly limited to research settings. This is due to the aberrations and multiple scattering that is induced by the inhomogeneous refractive boundaries that are inherent to biological systems. However, recent developments in adaptive optics and wavefront shaping have shown that high resolution optical imaging is not fundamentally limited only to the observation of single cells, but can be significantly enhanced to realize deep tissue imaging. To provide insight into how these two closely related fields can expand the limits of bio imaging, we review the recent progresses in their performance and applicable range of studies as well as potential future research directions to push the limits of deep tissue imaging.

1. Introduction

Recent advances in developments of novel optical imaging techniques in parallel with inventions of more powerful biomarkers have realized new observation windows that previous generation of researchers could only dream about. New calcium sensitive dyes and proteins with superior performance in terms of brightness, contrast, and temporal sensitivity now make noninvasive measurements of neural activity in living mammalian brains an everyday experiment.1 Development of the so-called “optical electrophysiology” technologies such as genetically encoded calcium indicators and genetically encoded voltage indicators, have enabled dynamic observation of up to tens of thousands of neurons.2,3,4,5,6,7 With respect to developments in the optical sciences, 3D imaging techniques with depth sectioning capabilities have played a crucial role for identifying the 3D network of functioning neurons.8,9,10

Comparing the different types of 3D imaging techniques that have been developed during the past century, we will realize that there are actually many different technologies that are currently available, such as confocal microscopy, optical coherence tomography (OCT), lightsheet fluorescence microscopy, and so on.11,12 However, to date, the major workhorse driving new discoveries in neuroscience based on in vivo imaging studies is arguably multiphoton microscopy. The reason is simple. Due to the longer excitation wavelength and the efficient collection of multiply scattered fluorescence signals, its penetration depth is superior compared to other 3D imaging technologies.

Based on this observation, we can see that if penetration depth can be increased for the variety of other optical measurement techniques that are available, the field of neuroscience, and in vivo deep tissue imaging in general, will be able to enjoy the full plethora of imaging techniques that widely differ in terms of spatial, temporal resolution and observable field of view (FOV), as well as the source of contrast. In this regard, the field of adaptive optics (AO) and wavefront shaping, which are able to correct for low-order aberrations and multiple scattering, respectively, have shown great potential and impressive demonstrations that have enabled high resolution imaging through highly turbid biological tissue or even increase the information throughput of conventional optical systems.13,14,15,16,17,18,19,20,21 Here, we describe the emerging field of AO and wavefront shaping and provide insight into their performance and applicable range of studies as well as current limitations and future figures of merit that should be achieved for a broader impact in the bioimaging community.

2. Difficulties in in vivo Deep Tissue Imaging

2.1. Aberrations and multiple scattering

The propagation of light is described by diffraction. The diffraction of light in turn is effected by the medium that the light is traveling through. The accumulated phase delay of light is decided by the refractive index and the distance that the light has passed through within the medium.22 Through this simple description, we can see that, if light with different incident angles, or spatial frequencies, are incident on a slab of homogeneous material, the acquired phase delay for the different spatial frequencies of light would vary; the component of light that was incident perpendicular to the slab would have acquired the least amount of phase delay while passing through the slab, while the component of light that was incident at the highest inclined angle would have acquired the largest amount of phase delay.16

This simple observation describes the difficulty we face when we try to focus light through a thin piece of homogeneous material, such as a coverslip. To obtain the sharpest focus that can be theoretically achieved, all of the different spatial frequencies of light within the numerical aperture (NA) of the optical system should constructively interfere at the focal plane. However, just by an addition of a thin coverslip within the optical path, the phase of different wave vectors no longer coincides at the focal plane which results in an enlargement of focus size as well as an alteration in its shape. This kind of phenomena that deteriorates image quality is called aberrations. Specifically, a thin coverslip within the optical path generates aberrations which are called defocus and spherical aberrations. In many cases, high NA objective lenses which are most vulnerable to aberrations have slidable lens components that can be “tuned” to correct for such aberrations from coverslips with varying thicknesses.

Aberrations can be induced by any object that lies in the optical path. One might then wonder whether we can just remove the source of aberrations. Unfortunately, this solution is not possible in most cases because it is often the sample of interest itself that is the source of aberrations. Aberrations caused by biological samples are caused by the different refractive index distribution of the cell membrane and cytoplasm as well as subcellular organelles and are in general inhomogeneous.17 Even aberrations within a single cell can be severe enough to deteriorate image resolution, especially for high resolution imaging systems such as fluorescence-based super-resolution microscopes.18,23

As we aim for imaging at deeper depths in biological tissue, aberrations keep accumulating. However, this does not mean that we can correctly model thick biological tissue by simply accumulating the phase delays into a single phase mask. In addition to the phase delay, the direction of light is also changed by scattering. The amount of scattering that light undergoes can be statistically described by the scattering mean free path ls of the biological tissue. The scattering mean free path describes the mean distance that light can propagate before being scattered and is around 100μm for most biological tissue.24 In addition to the scattering mean free path, the direction that the light is scattered at each scattering event is also an important factor and is described by the anisotropic factor g, which is the ensemble average of the cosine of the scattering angle. Based on the typical size and refractive index variation of cells, the anisotropy factor is around 0.9 for biological tissue which indicates dominant forward scattering. The combination of the scattering mean free path and the anisotropy factor defines another quantity called the transport mean free path lt=ls(1−g)−1 which describes the mean distance light travels before experiencing isotropic scattering,25,26 or in other words, the direction of light loses all correlation with its original intended trajectory.

2.2. Performance of different imaging modalities for deep tissue imaging

As discussed in the previous session, aberrations and ultimately multiply scattering limits the maximum imaging depth in biological tissue. As imaging thick samples naturally requires depth sectioning, only depth selective imaging systems can be used for high resolution volumetric imaging. Depth selective imaging systems typically require gating of light originating from different depths. For example, confocal microscopy obtains depth sectioning by placing a physical pinhole at the conjugate image plane to obtain spatial gating of ballistic light originating from the plane of interest. However, the amount of ballistic light exponentially decays in biological tissue by Beer–Lambert law as ∼e−z/ls, where z is the depth inside tissue. As ballistic light exponentially decays, the remaining scattered light follows random trajectories. Due to this process, a majority of the light originating at the depth of interest is now blocked by the pinhole while light originating at different depths can now enter the pinhole with higher probability. Therefore, confocal microscopy with sufficient signal to noise ratios is typically limited to about a single scattering mean free path inside tissue.

Although the principle of depth selective photon gating varies, the same logic can be applied to all depth selective imaging systems. OCT, which utilizes temporal coherence to obtain depth selectivity also assumes that backscattered light follows a ballistic trajectory. When the backscattered light is scattered and the path is altered, temporal coherence can no longer separate light backscattered from different depths and signals from different layers and positions start to overlap which limits the imaging depth. The same applies for multiphoton microscopy as well. Although multiphoton relies on the different process of nonlinear gating for depth selectivity, the sharp focus required for selective nonlinear excitation at specific depths is destroyed after about a single scattering mean free path. However, comparing all noninvasive optical imaging techniques, OCT generally boasts the largest imaging depth because it is based on coherent backscattering rather than fluorescence. Therefore, longer wavelengths in the telecom range can be used for imaging which benefit from longer scattering mean free paths in biological tissue. Multiphoton microscopy also can utilize longer excitation wavelengths to excite the same fluorophore which gives it a major depth advantage over single photon fluorescence microscopy techniques. For example, three photon microscopy has recently enabled high resolution imaging at up to ∼1.3mm depth in live mouse brains.27

For other depth selective imaging systems such as lightsheet fluorescence microscopy, where the depth selectivity is obtained by selective illumination of the specific plane, the situation is much worse. Since such systems do not have an additional gating mechanism to differentiate light from different depths, the slightest aberration can destroy depth selectivity as well as resolution which currently limit their application to relatively transparent samples.

3. Adaptive Optics

3.1. Brief history

Horace Babcock first proposed the principle of AO where he suggested to use a deformable optical element to remove the effects of atmospheric turbulence in astronomy in 1953.28 The idea was first realized by U.S. military and aerospace communities in late 60s and early 70s.29 In the early ages of AO, the high costs and the fact that the initial technology developments was classified by military restricted research to military purposes such as tracking satellites.30 Since the late 1980s, AO systems have been incorporated into large telescopes and have revolutionized ground-based astronomy.31 Much of the military research work was also declassified in the 1990s driving further research developments.32 Not long after, AO was also adopted by the bioimaging community as it enabled near diffraction limited imaging through biological tissue. The first application was demonstrated in ophthalmoscopy, where AO was combined with a fundus camera. As the cause of aberrations for the different varieties of imaging modalities are generally the same, since the early 2000s, AO has been widely applied to different bio imaging modalities such as scanning laser ophthalmoscopes and optical microscopy in general.33,34

3.2. Principles

The general principle of AO is based on the time reversal of light. Due to time reversal symmetry of the wave equation, if we could play back time, light would propagate exactly in the opposite direction that it came from without violating the laws of physics.20 In other words, if we reverse the direction of each wave vector constituting a light wavefront, the light would go back to its origin no matter how complex the shape of the wavefront. For monochromatic light, this is equivalent to phase conjugation.35 The concept of time reversal shows that it is possible to focus light beyond any type of aberrating media as long as there is negligible absorption.36 If we imagine that we can place a small beacon of light at the depth and position of interest, we will be able to detect the aberrated wavefront emanating through any type of overlaying aberrating structure. By designing an optical system that can play back a beam corresponding to the phase conjugate of the measured wavefront, we can focus light exactly where the small beacon was located.37

This is exactly how AO works in astronomy. In astronomical AO, a laser guide star is generated by shooting high power lasers at the sodium layer of the outer atmosphere. By targeting the laser to a targeted region of the sky where the astronomical object of interest is located nearby, a bright artificial star, also known as the guide star, can be made from fluorescence reemitted from the sodium layer. This artificial beacon acts as a point source located at the outer atmosphere. By measuring the resulting wavefront of this beacon at the ground, the distortions due to the inhomogeneous atmosphere can be measured using various wavefront sensing methods.38 A wavefront modulator can then be used to display the phase conjugate of this distortion to cancel out the distortions. Based on the linear shift invariance of optical imaging systems, astronomical objects near the guide star can then be imaged at high resolutions through this process. However, since the atmospheric aberrations spatially vary, the correction is usually limited to a small region which is known as the isoplanatic patch. AO in bioimaging works under the exact same principles as astronomy. However, there are some subtle differences such as the amount of aberrations, temporal dynamics, and type of guide star that can be generated which we will discuss below.

3.3. Guide stars (feedback mechanisms)

A major difference between AO in astronomy and bioimaging is that it is not trivial to generate a guide star at arbitrary positions for bioimaging.19 In astronomy, the sodium layer covers the entire outer atmosphere continuously and it is just a matter of aiming a laser to generate a guide star anywhere at will. However, in bioimaging the guide star should be generated by some type of endogenous biostructure or injectable exogenous probes that can be found throughout the sample.19 Along this regard, one of the most popular methods is to use the fluorescence signal emitted from target structures as the feedback guide star for wavefront measurements.39,40,41 However, in this case, if the fluorescence is emitted from multiple sources at once, the according wavefront measurement will not correspond to aberrations from the sample. To remedy this difficulty, gating mechanisms to select light emitted from single point sources is needed. This can be realized by using spatial gating in confocal microscopes42 or nonlinear gating in multiphoton microscopes43 to selectively excite and collect fluorescence from a single emitter for wavefront measurements.

An additional difficulty that bioimaging faces is the precious fluorescence signal budget for typical fluorescent dyes or fluorescent proteins. Since continuous excitation of strong fluorescence induces photobleaching and phototoxicity, using the fluorescent targets as a guide star can sometimes have an adverse effect on the object of interest itself. An interesting way to overcome this problem is by using elastic backscattered light rather than fluorescence. In this case, by using light sources that have minimal absorption in biological tissue, an efficient guide star can be made using arbitrary refractive index boundaries within the sample. To generate a single guide star within the 3D volume using backscattered light, coherence gating has been shown to be an efficient method.44,45

3.4. Wavefront sensing

After the appropriate type of guide star has been chosen, the wavefront emitted from the guide star must be detected. There are largely two approaches for wavefront detection, sensor-based direct wavefront detection methods46 and sensorless wavefront detection methods.20

Direct wavefront sensing literally requires a dedicated detector that can measure the optical wavefront. The most direct method is to set up an interferometer to measure the complex light field through interference fringes. For this purpose, all conventional holographic measurement methods such as phase shifting holography47 or off-axis holography48 can be utilized. This approach has the advantage that commercial megapixel CMOS or CCD detectors can be fully utilized to enable high resolution wavefront measurements. However, since an additional reference beam is needed, this method is limited to guide stars based on coherent scattering and cannot be used for incoherent processes such as fluorescence.

Due to this limitation, perhaps, the most popular direct wavefront sensor for AO is the Shack–Hartmann wavefront sensor which is preferred for many applications due to its ease of use. In a Shack–Hartmann wavefront sensor, an incident wavefront passes through a microlens array before impinging onto a 2D detector. The wavefront is discretized into multiple areas defined by the microlens array. Each spatial tilt of the discretized wavefront is then translated to a spatial shift by the Fourier transform property of the lens. By detecting the according shifts on the 2D detector, the incident wavefront can be reconstructed. The Shack–Hartmann wavefront sensor does not require an additional interferometric reference beam and can be directly applied to fluorescence signals. However, it has limited spatial resolution that is decided by the number of lenses in the microlens array. The wavefront tilt resolution and the measurable maximum tilt angle also have an inverse relation that is decided by the focal length of the lenses which can be a limiting factor.49

Taking another approach, some applications of AO have demonstrated that a dedicated wavefront sensor is not a must. However, due to the lack of a 2D sensor to directly measure the wavefront, these indirect, or sensorless wavefront detection methods instead utilize iterative measurements to reconstruct the aberrated wavefront. In this case, the wavefront modulator is usually used to generate iterative perturbations on the impinging light beam. When the light reaches the guide star or plane of interest, it will excite the feedback signal. Based on the perturbation induced on the impinging beam, the intensity of the feedback signal will vary. If the perturbation cancels the aberrations due to overlaying tissue, the intensity of the feedback signal will be maximum. By continually inducing this type of perturbation for the different modes of the incident beam, the optimum perturbation map can be found. We can see that this optimum perturbation map corresponds to the phase conjugate of the aberration wavefront that would have been measured through a direct wavefront sensor.50,51

Indirect, sensorless wavefront detection methods have the advantage that additional wavefront sensors do not need to be added reducing costs and hardware complexity. Since a single wavefront modulator is used to both indirectly measure the aberrated wavefront and also display the correction, the system is automatically aligned and corrects for all aberrations along the optical system. The type of feedback signal is also the same as the target measurement (fluorescence, backscattered light, photoacoustic signal, etc.) which allows minimum modification to conventional imaging modalities. In comparison, direct wavefront sensing requires dedicated detectors that are appropriate for the different kinds of feedback signal which can cause difficulties in incorporation into conventional imaging systems.

3.5. Wavefront modulators

After the aberration has been identified using the chosen guide star and wavefront sensing method, the final step to complete AO is to control the incident light beam so that the aberrations are canceled out. As previously described, this is achieved by controlling the wavefront of the incident light so that it corresponds to the phase conjugation of the induced aberrations. To accurately control the wavefront, various wavefront modulators which are commercially available can be used.

One of the most popular wavefront modulators are MEMS deformable mirrors which date back to the initial experiments in astronomy. These devices demonstrate fast update rates at up to several 10s of kHz and allow accurate analog phase only control of independent mirror elements. Current limitations of MEMS deformable mirrors are that it has a limited number of pixels and the related high costs. Although MEMS deformable mirrors with several thousand pixels are currently deployed in large telescopes, their costs are prohibitive to use in a small research lab. A 1000 mirror device costs nearly ∼$100,000 which can be an option for a well-funded lab. For applications like ophthalmoscopy where most of the aberrations are focused on low order aberrations, MEMS deformable mirrors with a smaller number of mirrors are also currently widely used due to its fast update rates which are crucial to utilize on human subjects where involuntary movement is inevitable.

In addition to MEMS deformable mirrors, another popular wavefront modulator is based on liquid crystal on silicon-based spatial light modulators (LCOS SLMs). LCOS SLMs rely on the physical rotation of liquid crystals to induce controllable phase delays. As such, update rates are currently limited to 100s of Hz or slower than MEMS-based devices by 2 to 3 orders of magnitude. The phase delay is also dependent on the polarization of light which can be problematic for unpolarized applications. However, standard devices can have up to millions of independent controllable pixels which can be a game changer for correcting high order aberrations or multiple scattering which require a large number of degrees of freedom.

As we can see from the two modulators described above, the ideal wavefront modulator should largely satisfy two conditions; fast update rate and large number of controllable pixels. MEMS deformable mirrors and LCOS SLMs have their pros and cons in the respective fields. Regarding these figures of merit, digital mirror devices (DMDs) have recently demonstrated their applicability as wavefront modulators. DMDs are binary devices that can only turn the light incident on individual pixels to either on or off states and are currently widely used in commercial displays. They boast up to millions of pixels and fast update rates reaching up to 20kHz. For some specific applications, especially in the multiple scattering regime, binary amplitude control has been shown to be sufficient for wavefront control or wavefront shaping. In other situations where analog phase modulation is needed, DMDs can still be used by using the Lee hologram method.52 However, in this case, light efficiency is extremely low reaching around 1%, which is currently limiting their widespread use.

3.6. Applications in bioimaging

3.6.1. General applicability among various imaging techniques

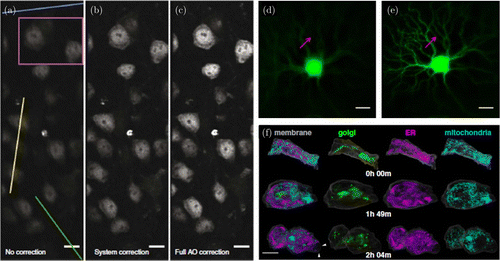

Since all high resolution optical imaging systems rely on the wave property of light, various forms of AO can be implemented to compensate aberration in all types of bioimaging. For example, in confocal microscopy, AO can be applied to correct aberrations in both illumination and detection pathways.42 However, the difference in wavelength for illumination and detection may require nontrivial engineering considerations which can make the system more complex when high levels of corrections are needed. Multiphoton microscopy has also benefited from AO, where deterioration of the optical focus in the illumination path can be easily corrected for using a single wavefront modulator.13,53 Since the total fluorescence is measured in multiphoton microscopy, aberrations in the detection pathway are not important as long as all the fluorescence can be detected. Since nonlinear excitation of the fluorescence guarantees that maximum fluorescence intensity is measured for the sharpest focus, total fluorescence intensity is often used as the figure of merit for feedback in many sensorless AO systems.54 In sensorless AO, various types of modes can be used to decompose the aberrated wavefront and find the appropriate proportional factors for each mode.51,55 Perhaps the most popular method utilizes Zernike modes to decompose optical aberrations.56 Since low levels of aberrations are also generally concentrated to low-order Zernike modes, the wavefront measurement time can be optimized by just measuring the correct correction amplitudes for the several Zernike modes that are expected.51,57,58,59,60 Another example of sensorless wavefront sensing is pupil segmentation, where the pupil of the optical system is divided into multiple segments and the appropriate tilt and phase delay for each segment is found iteratively to optimize the figure of merit.61 However, when using the modal approach for sensorless AO, one must be careful that the search is thorough enough to find the correct wavefront without falling into local minima (or maxima). For example, if the amplitude of spherical aberration was 3 lambda, defining the search from −1 to 1 lambda would never find the correct wavefront. Several representative results in application of AO in bioimaging are shown in Fig. 1.

For specific types of imaging systems that can also measure the phase of light in addition to the intensity, computational AO is also possible.62,63 Since the total optical field is measured, arbitrary aberrations can be digitally added to the measured image to simulate the corresponding unique response. By utilizing this unique degree of freedom, ideas in sensorless AO can be directly applied computationally. Instead of iteratively applying perturbations using a wavefront modulator, the perturbations can be applied in silico and the response can be calculated. By optimizing the figure of merit for perturbed images, the appropriate perturbation, or in other words, aberration correction can be found solely through computation.

Fig. 1. AO is a general approach that can be applied to all imaging modalities. (a) Two photon imaging of neurons in 300μm brain slice, (b) After correcting for system induced aberrations, (c) After correcting for both system and sample induced aberrations using pupil segmentation-based wavefront sensing.61 Scale bars 10μm, (d) Confocal fluorescence imaging of retinal ganglion cells, (e) After application of wavefront sensorless Zernike modal-based AO. Red arrows highlight fine structures originally invisible that can now be seen.64 Scale bars 20μm and (f) 4D high spatiotemporal imaging of entire living organisms using lattice lightsheet and direct wavefront sensing AO.65 Scale bar 10μm. Figures (a)–(c) reprinted with permission from Ref. 61, (d)–(e) from Ref. 64, and (f) from Ref. 65.

3.6.2. AO for super-resolution imaging

Fluorescence-based super-resolution imaging was recently awarded the Nobel prize in chemistry for bringing optical imaging into the realm of sub-100nm resolution imaging. In less than two decades since the initial invention, a wide variety of super-resolution microscopy technologies have been commercialized and widely distributed to help biologists study life at nanoscales. However, since all fluorescence-based super-resolution methods still have a resolution that is proportional to the original diffraction limited system, a small focus must still be generated to realize efficient super-resolution microscopes. For example, since single molecule localization accuracy is proportional to the point spread function (PSF) of the detection system, we must use a high resolution microscope rather than a low resolution microscope for localization.66,67 The same applies for structured illumination68 and stimulated emission depletion microscopy (STED).69 As the resolution is enhanced with respect to the conventional diffraction limit of the optical system, a high resolution imaging system must be implemented as the starting point to realize super-resolution imaging.

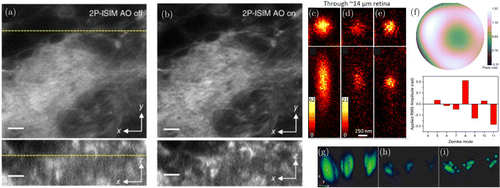

However, since super-resolution imaging requires high resolution optics, they are most vulnerable to aberrations. High resolution imaging systems work by modulating or detecting a large bandwidth of optical spatial frequencies. When in phase, the sum of these spatial frequencies generates the small PSF for high resolution imaging. However, in the presence of aberrations, different spatial frequencies can acquire diverse phase delays that can deteriorate the system PSF at a much faster rate than for low resolution imaging systems under the same degree of aberrations. To circumvent this issue, recent exciting works have demonstrated that AO can recover resolution for various super-resolution imaging modalities and enable super-resolution imaging at the tissue level18,43,70,71,72,73,74,75,76,77 as shown in Fig. 2.

Fig. 2. AO for super-resolution imaging. (a) Multiphoton structured resolution imaging of Drosophila larval brain. Lateral slice at 35μm from the surface, (b) After application of direct wavefront sensing-based AO.43 Scale bars 5μm, (c) Confocal imaging of fluorescent beads through zebrafish retina sections, (d) Conventional STED image, (e) Sensorless AO-based STED image, (f) Example aberration correction wavefront and respective Zernike mode amplitudes and (g)–(i) Volume rendering data for (c)–(e).77 Figures (a)–(b) reprinted with permission from Ref. 43, and (c)–(i) from Ref. 77.

4. Wavefront Shaping

4.1. Relation with AO

Multiple scattering shares its roots with aberrations. They are both due to inhomogeneous distribution of refractive index boundaries. Wavefront shaping therefore, which deals with multiple scattering, also shares its roots with AO. In both regimes, the underlying principle is based on time reversal symmetry of light.37 The requirement for appropriate guide stars, wavefront sensing methods, and wavefront modulators are all common for both wavefront shaping and AO. However, although multiple scattering is just due to the accumulation of multiple aberrating layers, multiple scattering displays some different properties from aberrations which we would mainly like to discuss in this section.

When correcting for aberrations, the most commonly used figure of merit to quantify the correction efficiency is the Strehl ratio, which is the ratio of the peak intensity of the corrected focus with respect to the theoretical focus that can be obtained based on the NA of the optical system. In AO, it is usually accepted that a Strehl ratio of above 0.8 has recovered diffraction limited resolution. This is possible because in biological samples thinner than the scattering mean free path, ballistic light is still dominant near the position of the focus. Therefore, by controlling the phase delay of the ballistic components, almost all of the incident energy can be concentrated into a single tight focus.

In comparison, let’s consider the case when the light has undergone many scattering events. In this case, the thickness of the turbid medium L would be much larger than lt. In this so-called diffusive regime, only ∼lt/L of the incident light passes through the thickness L. The rest of the light has been backscattered and we can see that we can never control this portion of light to reach the guide star. The guide star cannot interact with this portion of light to generate the feedback. Therefore, due to technical reasons, we cannot control all of the incident light to reach a single focus under multiple scattering conditions. Since the Strehl ratio in this case is a negligible value, comparing the Strehl ratio for the quality of wavefront shaping through highly scattering samples will not have great meaning as in AO.

Another difference between aberrations and multiple scattering that is also due to the different amount of ballistic light available is the shape of the distorted wavefronts. When using coherent light, multiple scattering results in a well-developed speckle that is spread out broadly within and beyond the turbid medium. In other words, multiple scattered light always results in a random complex speckle pattern which can also be seen in the phase of the wavefront. This is because no matter the shape of the incident wavefront, the output wavefront that is transmitted through or into turbid media contains a random sum of all of the wave vectors that are supported by the medium. Therefore, all well-developed speckles have an average size according to the diffraction limit decided solely by the wavelength. Due to this reason, when we control the wavefront of the incident beam to generate a tight focus at a specific depth, the size and shape of the focus does not change as the wavefront shaping quality is enhanced. The size of the focus was already diffraction limited to begin with. Therefore, in wavefront shaping the figure of merit describing correction efficiency is often described by the peak to background ratio (PBR) which describes the peak intensity of the recovered focus with the average intensity of the speckle background. Recent work has demonstrated PBR of over 100,000 using a megapixel DMD.78

4.2. Applications in bioimaging

4.2.1. Imaging through scattering media

Similar to AO, wavefront shaping for highly turbid biological tissue can also be applied to achieve high resolution imaging beyond scattering volumes. As we have previously discussed, the principles are exactly the same so similar methodologies are applied. However, there are some subtle differences. For example, if we consider multiphoton imaging through a thin layer of brain tissue, we can expect that the dominant aberration would be from spherical aberrations due to the effective refractive index mismatch.17 Therefore, using Zernike decomposition, we can expect to get considerable enhancement in resolution correcting for a single Zernike mode, the spherical aberration term. However, after multiple scattering, a single Zernike mode will no longer be effective in describing the related aberrations. After multiple scattering, the aberrations also take the shape of a random phase distribution which makes effective mode selection difficult. In other words, due to the randomness, finding a sparse basis for the final aberration will not be possible for random scattering. On the other hand, since multiple scattering scrambles the importance of different modes for wavefront correction, arbitrary orthogonal modes can be chosen for wavefront correction. One of the most popular methods, simply defining the individual pixels of a wavefront modulator as the basis works effectively as well. In this case the correction phase map can be found by modulating each pixel from 0 to 2 pi to find the optimum correction phase for each pixel. There is no risk of falling to a local minimum in this case. In contrast, if we use Zernike decomposition for aberration correction, defining the appropriate search range for aberration correction becomes more important. For example, if imaging depth in a scattering sample is small, the aberration correction map will probably be found in a small range of amplitude, but the larger the imaging depth, the larger the effective range of amplitude. Such a phase map is able to be wrapped and expressed between 0 and 2pi.

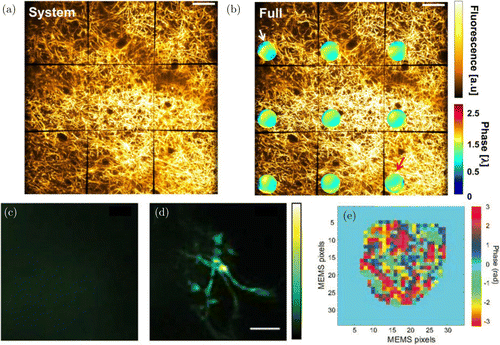

Examples of different levels of aberration in biological samples can be seen in Fig. 3. When the level of aberrations are small, utilizing Zernike modes as the correction basis (Fig. 3(b)) can elegantly reduce the number of degrees of freedom needed for efficient correction. When the level of aberrations increase and we reach the multiple scattering regime (Fig. 3(e)), it becomes more difficult to define representative effective modes and all controllable pixels become independent.

Due to such characteristics, correcting through highly scattering biological tissue requires measurement of a large number of independent to correctly identify the effective aberration map.79 If we use iterative sensorless wavefront detection methods, a large number of modes is equivalent to longer measurement times. This drawback has limited many wavefront shaping experiments to be conducted in proof of principle settings rather than dynamic live biological environments. To overcome this barrier, digital optical phase conjugation has shown to be a viable solution to enhance the speed of wavefront shaping by several orders of magnitude.39,80,81,82 In digital optical phase conjugation, the distorted wavefront emitted from a guidestar is measured by holographic methods in a single shot. The distorted wavefront is then phase conjugated and sent back into the scattering medium using a wavefront modulator which automatically focuses back to the guidestar due to time reversal symmetry. Since the measurement and playback of the correction wavefront is only limited by the signal to noise ratio and camera speed, digital optical phase conjugation has been demonstrated at up to millisecond rates which is comparable to the decorrelation time of many biological tissues.35,83

For some specific configurations where the highly scattering tissue is stationary, such as in head fixed mice, wavefront shaping has already shown potential to extend the limits of bioimaging. For example, functional imaging through the intact skull at subcellular resolution has been demonstrated by combing wavefront shaping with conventional multiphoton microscopy.84,85 However, in highly scattering tissue, spatial variations of aberrations severely limit the effective corrected FOV. Extending the effective FOV through highly scattering media is one of the most important factors in bringing wavefront shaping closer to studies that can bring new biological discoveries and is under extensive study.

Fig. 3. Imaging through aberrating and highly scattering tissue. (a) Maximum intensity projection (MIP) of 10μm thick volume of brain tissue at 75μm depth in live tissue obtained using conventional multiphoton microscopy, (b) After simultaneous-independent aberration correction for 9 different areas of the FOV. Inset shows the aberration maps for the respective FOV.58 Scale bars 50μm, (c) MIP of 20μm thick volume of brain tissue through the intact skull via conventional multiphoton microscopy. No structure can be seen due to multiple scattering, (d) After sensorless wavefront shaping. Fine structures of microglia are recovered and (e) According wavefront correction map. Each pixel of the wavefront modulator requires independent modulation due to multiple scattering induced randomness.85 Figures (a)–(b) reprinted with permission from Ref. 58 and (c)–(e) from Ref. 85.

4.2.2. Endoscopic applications

Current limitations in penetration depth have brought about innovations in the field of endoscopic imaging. Functional imaging and continuous monitoring of functioning brains in behaving animals have previously been realized by utilizing special endoscopic probes, such as fiber bundles86 and GRIN lenses.87,88,89 However, due to the physical size of the probes, they are still inevitably invasive and induce lesions on tissue which can cause inflammation and gliosis which can affect the physiology of neuronal networks and behavior of the animals. Reducing the size of imaging elements also does not come for free. For example, fiber bundles have severe pixilation and low resolution as each pixel is defined by the individual fibers constituting the fiber bundle. Increasing the sampling rate requires more fibers to be added which results in an increase in fiber bundle size. Grin lenses can provide continuous imaging but also suffer from severe aberrations and uneven imaging quality across the FOV.

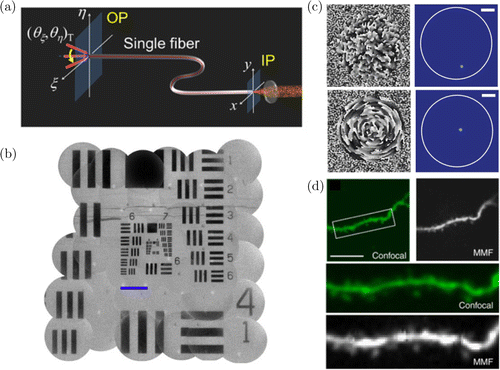

Considering the limitations of fiber bundles and GRIN lenses, multimode fibers can be another ideal option for endoscopic imaging. A single multimode fiber can have a diameter of ∼10s of μm which makes them much less invasive compared to other imaging probes. However, conventional multimode fibers cannot be used to deliver images as they scramble the input wavefront into an output speckle. This is because a single input mode is scrambled into the many different modes supported by the multimode fiber. However, this is exactly what happens in multiple scattering. Therefore, wavefront shaping can be used to calibrate a multimode fiber and transform the mode scrambler into a high resolution imaging system90,91,92 (Fig. 4). By shaping the incident wavefront, diffraction limited foci can be generated at arbitrary positions beyond the single multimode fiber, just like focusing through multiple scattering media. Using this approach, recent work has demonstrated high resolution fluorescence imaging of subcellular neural structures as well as functional brain activity in living mice93 with reduced tissue lesion volume by more than 100-fold.

Fig. 4. Imaging through multimode fibers. (a) A multimode fiber scrambles input modes into multiple output modes in analogy with turbid media. Therefore, the transmission matrix connecting the input and output modes of the fiber can be calibrated, (b) The transmission matrix can be used to transform a multimode fiber into a widefield microscope.90 Scalebar 100μm, (c) The transmission matrix information can be also used to focus light to specific positions beyond the multimode fiber.81 Scalebar 20μm (Left: Phase of incident wavefront. Right: Obtained focus after propagation through multimode fiber) and (d) Scanning the focus can be used to realize fluorescence imaging. Comparison between confocal microscope and multimode fiber imaging in a living mouse brain.93 Scalebar 20μm. Figures (a)–(b) reprinted with permission from Ref. 90, (c) from Ref. 81, and (d) from Ref. 93.

4.2.3. Various guide stars

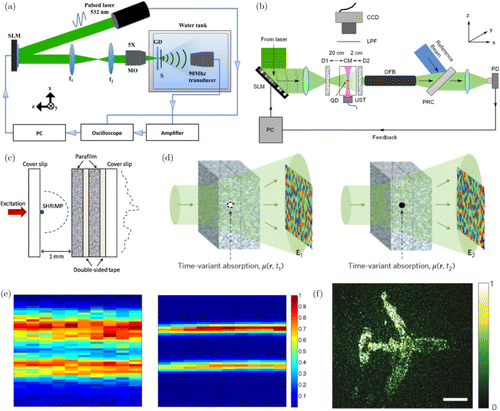

Recent developments in wavefront shaping have demonstrated that additional approaches to generate new types of guide stars are also possible. For example, instead of using fluorescence, the absorption of light can be used by using light absorbing targets within tissue as the guide star and measuring photoacoustic signals as the feedback (measuring sound rather than light)94,95,96 (Fig. 5(a)). In another approach, it was shown that sound can be used actively to generate arbitrary guide stars by simply moving the position of an ultrasonic focus97,98 (Fig. 5(b)). Since ultrasound undergoes much less aberrations and scattering, it is relatively easy to generate an ultrasound focus within biological tissue using commercial ultrasound transducers. The focused ultrasound then shifts the frequency of light passing through the focus which can be selectively detected using holographic detection (measuring sound tagged light). The ultrasound focus therefore acts as a virtual guide star that can be readily manipulated to different positions within biological tissue.

Rather than fluorescence, coherent signals were also shown to be efficient guide stars as well. For low levels of aberration, Shack–Hartmann wavefront sensors perform well and give accurate wavefront measurements using fluorescent light. However, when light from a fluorescent guidestar undergoes multiple scattering, each microlens of the Shack–Hartmann sensor now does not see a single angular tilt but rather a combination of multiple tilts due to multiple scattering. In this case, wavefront measurements become inaccurate which have limited the use of Shack–Hartmann-based direct wavefront sensing in multiple scattering regimes. To overcome this issue, it was shown that second harmonic generation from so-called Second Harmonic Radiation Imaging Probes (SHRIMPs) can be used to generate the feedback signal99,100 (Fig. 5(c)). Since second harmonic generation is a coherent process, the signal field can be detected using holography with the same wavelength for aberration wavefront mapping. This approach also has the additional advantage that the excitation wavelength is different from the second harmonic generation signal, therefore, the light emitted from the guide star can be detected exclusively with no background cross talk between the illumination beam.

Another recent approach demonstrated that dynamics of absorbing particles can also be used to detect the wavefront distortion101 (Fig. 5(d, f)). For example, when there is a light absorbing particle within a turbid medium, part of the light propagating through the medium would be absorbed at the position of the particle. If the particle is removed from the medium, the same incident light would pass through the medium without absorption. Therefore, by subtracting the transmitted fields beyond the turbid medium for the two cases, the field that originated from the position of the absorbing particle can be identified; the aberrations impinged upon the light emitted from the object can be identified. By playing back the phase conjugation of the measured aberrations, light can be focused inside turbid media exactly where the absorbing particle was. However, endogenous dynamic particles that are absorptive and move with predictable dynamics are hard to find in practical applications. To solve this problem, utilizing microbubbles that can be destroyed (or popped) at arbitrary target positions using focused ultrasound was recently demonstrated as a solution.102 However, as this method relies on injection of exogenous microbubbles, it is currently restricted to use only inside the blood stream and also has limitations related to the large size of the ultrasound focus.

Fig. 5. Various types of guide stars. (a) Photoacoustic signals from absorbing particles used as feedback,101 (b) Ultrasound focus used as virtual feedback to tag light passing through the ultrasound focus,98 (c) Using SHRIMPs for generation of second harmonic signals for feedback,99 (d) Principle of using time-variant absorption for wavefront measurements,102 (e) Left: Images of two capillaries filled with absorptive ink through a diffuser. Right: After using photoacoustic-based feedback for wavefront shaping101 and (f) Using time variant absorption for phase conjugation through highly scattering media.102 Figure (a) reprinted with permission from Ref. 101, Fig. (b) from Ref. 98, Fig. (c) from Ref. 99, Fig. (d) and (f) from Ref. 102, and (e) from Ref. 101.

5. Conclusions and Outlook

Although a variety of different imaging technologies have been developed during the past century, optical imaging still stands out with unique advantages in terms of spatiotemporal resolution, molecular sensitivity and noninvasiveness. Due to strong light-matter interactions, we can identify different subcellular species with ease using a diverse palette of contrast mechanisms which cannot be achieved using different ranges of the electromagnetic spectrum or other types of waves in general. However, the biggest limitation that light microscopy faces is the limited penetration depth. Compared to other imaging modalities that are currently widely used in medical settings such as MRI, X-ray, or ultrasound, the penetration depth is shallower by several orders of magnitude.103

OCT is a representative light microscopy technique that has found an important role in medical diagnosis. OCT revolutionized ophthalmology soon after it’s invention because it enabled fast 3D high resolution imaging of the eye which was previously impossible. This was possible because the eye is a very special type of organ that has evolved to be transparent. But even for observation of the eye, application of AO–OCT was crucial to obtain single photoreceptor level resolutions due to inherent aberrations in the human eye.104 From this example, we can see that if we can extend the penetration depth of other imaging modalities, various different fields of research or medicine will be revolutionized.

AO in bioimaging was first demonstrated in the late 1990s and wavefront shaping first demonstrated that principles of AO can also be applied up to the diffusive regime in 2007.79 Although AO and wavefront shaping in optical imaging are relatively new emerging fields, they have already shown that they can be beneficial for practically all existing imaging modalities such as confocal, multiphoton, brightfield, OCT, lightsheet, STED, STORM and Raman, just to name a few. Since time reversal is valid for all waves, developments in AO and wavefront shaping can be applied to other fields as well. In fact, many founding works that became the basis of optical wavefront shaping were previously conducted in acoustics.105,106

As the boundary between aberrations and multiple scattering is not strictly defined, many important bioimaging applications will require innovative approaches from both AO and wavefront shaping combined. For example, the optical memory effect originating in studies of mesoscopic physics which describes angular correlation of multiple scattered light was recently modified to describe multiple scattered light in highly forward scattering media such as biological samples to describe translation correlations which will be more useful in image inside biological tissue.107,108 As another example, inspired by multi-conjugate AO in astronomy,109,110 it was recently demonstrated that conjugating the correction plane in concentrated layers of highly scattering biological tissue can also enhance the correction FOV.85,111

As the importance of seeing the living function of organisms in their native 3D environment is becoming more evident, all bioimaging modalities will continue to aim for higher spatial temporal resolutions inside living tissue. The only fundamental limitation blocking this goal is aberrations and multiple scattering. Since AO and wavefront shaping holds the key to solve this barrier, we believe that the field will continue to grow on a par with is importance. Once issues regarding wavefront measurement time and limited corrected FOV are solved, they will likely become an integral part of any imaging system similar to current correction rings that are standard in high NA objective lenses. As achieving this goal will require advancements in all of the related fields; guide star generation, wavefront measurement, and wavefront control, interdisciplinary work between biochemists, engineers, physicists, and biologists would be important in driving new exciting developments.

Acknowledgments

This work was supported by the National Research Foundation of Korea (Nos. 2016R1C1B201530 and 2017M3C7A1044966), the Agency for Defense Development (UD170075FD), and the TJ Park Foundation.