Visualizing veins from color skin images using convolutional neural networks

Abstract

Intravenous cannulation is the most important phase in medical practices. Currently, limited literature is available about visibility of veins and the characteristics of patients associated with difficult intravenous access. In modern medical treatment, a major challenge is locating veins for patients who have difficult venous access. Presently, some products of vein locators are available in the market to improve vein access, but they need auxiliary equipment such as near infrared (NIR) illumination and camera, which add weight and cost to the devices, and cause inconveniences to daily medical care. In this paper, a vein visualization algorithm based on the deep learning method was proposed. Based on a group of synchronous RGB/NIR arm images, a convolutional neural network (CNN) model was designed to implement the mapping from RGB to NIR images, where veins can be detected from skin. The model has a simple structure and less optimization parameters. A color transfer scheme was also proposed to make the network adaptive to the images taken by smartphone in daily medical treatments. Comprehensive experiments were conducted on three datasets to evaluate the proposed method. Subjective and objective evaluations showed the effectiveness of the proposed method. These results indicated that the deep learning-based method can be used for visualizing veins in medical care applications.

1. Introduction

Intravenous cannulation is the most important procedure in many medical treatments. A cannula is inserted into the peripheral veins of hand dorsum or forearm for injecting fluid, maintaining hydration, parenteral nutrition, administering blood, chemotherapy, and administering drugs.1 According to statistical studies, around 80% of hospitalized patients need intravenous cannulation for blood sampling or medication injection.2,3 Presently, this procedure is performed manually. To localize veins, qualified nurses or medical personnel watches and/or touches the targeted site on the patient’s skin, and feels it with fingers. For patients having deep veins, dark skin tone and the presence of hair on skin, vein localization is a challenging and difficult task. In the case of elderly or dehydrated patients, the task becomes much tougher.4 In the USA, more than 400 million intravenous cannulations are performed every day, with a success rate of about 72.5% in the first attempt.5 Failed cannulation results in regional pain, blood clotting, allergy, extravasation, or damages to veins.6,7 Medication can leak into neighboring tissues and cause redness, swelling and sometimes can be poisonous. Multiple attempts of cannulation usually cause not only pain but also anxiety in patients, especially children.8,9 It also influences the self-confidence and performance of medical staff.

From December 2019, a crisis caused by a novel coronavirus pneumonia spread the whole China and many other countries. In January 2020, thousands of patients flew to hospitals in Wuhan in a very short time. It was very urgent to give the patients timely testing and treatment. For protection against the highly contagious virus, medical staff had to wear medical goggles and two pairs of surgical gloves, which made localizing veins even more difficult in intravenous cannulation. Many nurses had to guess the locations of veins based on their experience. Therefore, technologies which visualize veins readily and promptly can significantly benefit medical treatment, especially in emergent cases.

2. Related Work

Currently, some products of vein locators are available in the market to improve vein access. The devices like positron emission tomography (PET), computed tomography (CT) scan and magnetic resonance imaging (MRI) are designed to localize veins. They are not suitable for daily intravenous cannulation in clinics and hospitals due to their large size and high cost. Other commonly used devices can be divided into three groups. The first group makes use of near infrared light and camera.10 It works on the principle that hemoglobin in blood has lower absorption in the spectra range of 740–940nm. So, based on their different reflection and absorption, skin tissues can be differentiated from veins.11 These companies include Veinlite (Warrior Edge, LLC), Luminetx VeinViewer (Luminetx), Veinsite hands-free system (VueTek Scientific), and so on. These products can be applied repeatedly without any harmful effects to the subject. The second group is based on trans-illumination technique, where a single or combination of wavelengths from the visible range of the electromagnetic spectrum is transmitted through skin tissues to visualize veins inside skin.12,13,14,15,16 Blood usually absorbs more light as compared to the surrounding tissues, hence appear darker. These companies include Venoscope® (Venoscope), Wee Sight® (Children’s Medical Ventures), and Vein Locator® (Sharn Inc.). The major drawback of this technique is the need of contact with skin, and heat burns may occur due to the high intensity of illumination. The third group, Photo-acoustic tomography, is a technique which includes both optic and ultrasound subsystems. Skin is irradiated with illumination. It absorbs incident energy and its temperature would rise on the order of milli-Kelvin for a short period of time, which produces acoustic waves from the skin tissue. An ultrasound detector obtains the resulting acoustic radiation and forms an image which may contain information of veins. It is safe to be applied multiple times. However, it needs larger and more complex equipment, so the operating procedures are difficult and costly.17,18,19

A common problem with the aforementioned technologies is that they need auxiliary equipment, such as near infrared light and camera, optic and ultrasound subsystems, which add weight and cost to the devices, and cause inconveniences to daily medical care. Some technologies based on image processing are proposed recently to solve this problem. Tang et al.20 proposed a vein uncovering algorithm based on optics and skin biophysics. The inverse process of skin color formation in an image was modeled and the spatial distributions of biophysical parameters were derived from color images, where vein patterns can be observed. In Ref. 21, they took the hypodermis into consideration and further improved the optical model of skin. A more accurate model of radiation transfer, Reichman equation based on the Schuster–Schartzchild approximation, was also employed to replace the K-M model. A common problem with these optical methods is that they are pixel-wise algorithms, and no neighboring information is taken into consideration. Therefore, a lot of noises can be observed from the results. Tang et al.22 also proposed an algorithm based on image mapping to visualize vein patterns from color images. It extracts information from a pair of synchronized color and near infrared (NIR) images, and uses a three-layered feed-forward neural network (NN) with five neurons in the hidden layer to map RGB values to NIR intensities. Since only one pair of color/NIR images were utilized, the uncovering performance was not very satisfactory.

Song et al.23 proposed a vein visualization method based on multispectral Wiener estimation. A conventional RGB camera on a commercial smart phone was used to acquire reflectance information from veins. Wiener estimation was then applied to extract the multispectral information from the veins. In this method, a color calibration was necessary for the specific illumination, which affects the performance. Watanabe et al.24 proposed a method that visualized veins by emphasizing the saturation of color information in an image. It can achieve good results on dorsal veins since skin on hand is usually very thin, but no experiments on skin of other body parts were reported. Ma et al.25 proposed a generative adversarial network for uncovering veins from RGB images. Inspired by dual learning and works about inter-collection translation, it let two generators learn from a RGB-NIR dataset simultaneously. However, the accuracy of the model cannot be guaranteed since its loss function lacked the constraint of vein locations.

In recent years, deep learning methods have been demonstrated to outperform traditional feature-engineering approaches significantly in image processing and pattern recognition areas. In this paper, a convolutional neural network (CNN)-based vein visualization algorithm is presented. To the best of our knowledge, it is the first time that a CNN model is utilized to uncover veins from color skin images. The rest of this paper is organized as follows. Section 3 describes the CNN frameworks we designed. Section 4 reports and discusses the experimental results. Section 5 concludes the findings.

3. Methodology

The key idea of deep learning-based image processing is a nonlinear mapping based on regression. It is this nonlinear mapping that enables the learning model to achieve an appropriate representation for the relationship between different image domains. Among all deep learning methods, CNN is the most widely used architecture in the area of image processing, since it takes spatial relationship into consideration. We propose a CNN-based algorithm for visualizing veins from color images. In this section, we will introduce the method in detail.

3.1. Patch extraction and data representation

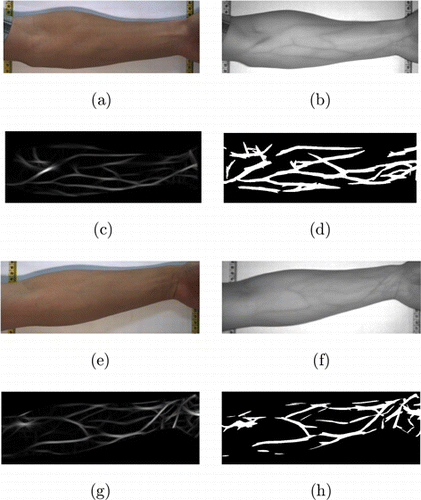

As a supervised method, training is a necessary process for a CNN model to achieve a specific task. To utilize prior information to uncover veins from color images, we used a JAI AD-080-CL camera to take synchronized RGB/NIR images of arm skin from 24 subjects. It is a 2-CCD multi-spectral prism camera which provides simultaneous images of different light spectrums through a single optical path. The camera splits incoming light into two separate channels — a visible color channel from 400nm to 700nm and a NIR channel from 750nm to 900nm.26 A pair of RGB/NIR arm images is shown in Figs. 1(a) and 1(b), respectively. Since hemoglobin in blood has an especially strong attraction to NIR spectrum, veins are visible in NIR images. Our CNN model tries to learn the nonlinear mapping between the RGB and NIR image domains.

Fig. 1. Extracting veins from NIR images (a) and (b) is a pair of RGB/NIR arm images; (c) is the enhanced vein image obtained from the Gabor filtered result of (b); (d) is the binarized result from (c) using Otsu’s method; (e–h) are another set of examples.

To locate veins, we use a filter bank composed of real parts of 16 Gabor filters with different scales and orientations to NIR images.21 Only the real parts of the Gabor filters are used because veins are dark ridges in NIR images. A real part of a Gabor filter in the spatial domain is defined as

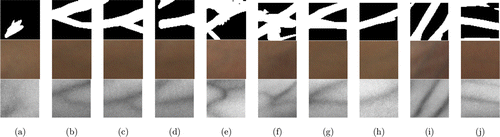

The enhanced vein images are then binarized using Otsu’s method.27 Figure 1(c) is the enhanced vein image obtained from Fig. 1(b). Figure 1(d) is the binarized result, and the white lines are the veins. Figures 1(e)–1(h) are another set of examples. In this way, we obtain 20 groups RGB/NIR/enhanced vein images. With a pixel on veins as a center, a 65×65 image patch can be cut from the RGB image, NIR image, and the/enhanced vein image, respectively. Sliding the center along the vein lines, totally 430,000 patch triples are obtained and form a training dataset. Figure 2 shows some patches in the training dataset, where each column of (a)–(j) is a triple. The first row is patches cut from the binarized vein images. The second and third rows are the corresponding RGB and NIR image patches, respectively.

Fig. 2. Extracting patches for CNN training Each column is a triple of patches. The first row is patches cut from the binarized vein images. The second and third rows are the corresponding RGB and NIR image patches, respectively.

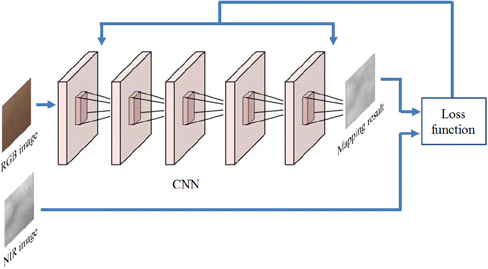

3.2. Network architecture and optimization

A typical CNN model is composed of three types of layers: convolutional layers, pooling layers, and fully connected layers. Pooling and fully connected layers are used for dimension reduction, which should not be included in our vein visualization task. We design a model which has five convolutional layers. The first layer is expressed as an operation F1 which convolves the input image by a set of filters :

| Name | Number of outputs | Kernel size | Pad size | Stride |

|---|---|---|---|---|

| Conv1 | 192 | 5 | 2 | 1 |

| Conv2 | 256 | 3 | 1 | 1 |

| Conv3 | 256 | 3 | 1 | 1 |

| Conv4 | 256 | 3 | 1 | 1 |

| Conv5 | 128 | 3 | 1 | 1 |

| Gen_image | 3 | 5 | 2 | 1 |

Fig. 3. Illustration of the proposed CNN model.

3.3. Loss function and training

Learning the nonlinear mapping function between the RGB and NIR domains needs to obtain the CNN model parameters Θ={Wi,Wv,bi,bv}. They are estimated through optimizing the loss function between the visualized image F(X;Θ) and the corresponding NIR image Y, which is regarded as the ground truth of vein distribution. Given a set of synchronized RGB/NIR image pairs {Xj} and {Yj}, the mean squared error (MSE) is used as the loss function

The loss function is minimized using stochastic gradient descent with the standard back propagation.28 The filter weights of each layer are initialized by drawing randomly from a Gaussian distribution with zero mean and standard deviation 0.01, and the biases are initialized as 0. The initialized learning rate is 10−6, the momentum is 0.1, and the weight decay is 0.1. The learning rate is dropped in “steps” in every 10,000 iterations, and the learning rate decay parameter γ is 0.1. The CNN model is trained with 50,000 iterations. The network has a batch size of 16 images.

3.4. A color transfer scheme for images taken by smartphone

For real-time medical treatment, a color skin image is usually acquired via the built-in camera on a commercial smartphone. For the reason of different lighting conditions and camera properties, the images taken by a smartphone may be very different from those taken by the JAI camera, which provides the training samples of our CNN model. It may affect the performance of the method. To make the model adaptive to the images taken by a smartphone, we propose a neural network to transfer the colors of skin images.

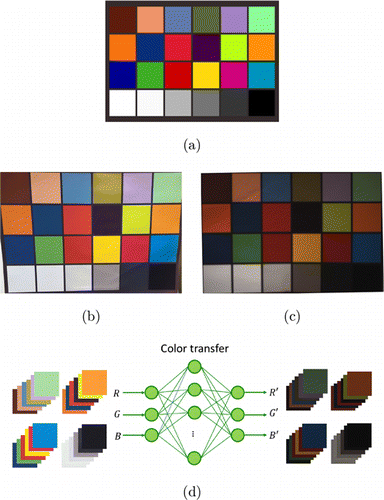

Color checker charts is a cardboard-framed arrangement of 24 squares of painted samples based on Munsell colors. It is widely used to manually adjust color parameters (e.g., color temperature) to achieve a desired color rendition. We used the JAI camera and a smartphone (Huawei P20) to take images of the Color Checker, respectively. Figures 4(a)–4(c) correspond to a Color Checker, the image taken by Huawei P20, and the image taken by the JAI camera. From the two images, we cut a 64×64 block from each of the 24 color squares. Totally 48 color blocks are collected. We used the RGB values of the color blocks from Huawei P20 as inputs, and those from the JAI camera as target outputs to train a three-layered feed-forward neural network. The transfer functions in the hidden and output layers are, respectively, tan-sigmoid and linear functions. The scheme is shown in Fig. 4(d). The network is trained with the Levenberg–Marquardt back-propagation algorithm.

Fig. 4. A color transfer scheme (a) is the Color Checker; (b) is the image of Color Checker taken by a smart phone Huawei P20; (c) is the image of Color Checker taken by the JAI camera; (d) is the neural network used in the scheme.

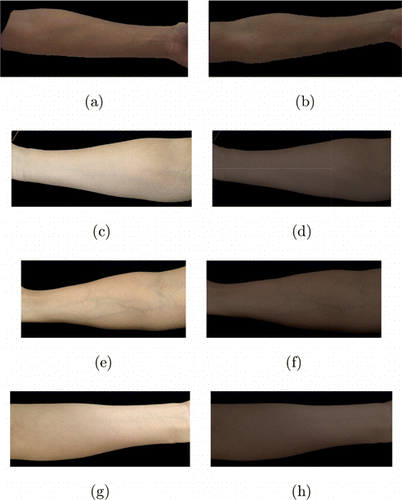

The color transfer model is applied to the skin images taken by Huawei P20. Figure 5 shows the transferred results. Figures 5(a) and 5(b) are images taken by the JAI camera. Figures 5(c), 5(e) and 5(g) are three images taken by Huawei P20. Figures 5(d), 5(f) and 5(h) are their corresponding transferred results. It can be seen that the color style has been changed to that of JAI images.

Fig. 5. Results of the color transfer scheme (a,b) are images taken by the JAI camera; (c), (e) and (g) are three images taken by Huawei P20; (d), (f) and (h) are their corresponding transferred result.

4. Experimental Results

Three datasets: JAI image dataset, DSLR image dataset and smartphone image dataset were employed to evaluate the performance of the proposed method. The first dataset was taken by the JAI camera, i.e., they were from the same camera of the training samples. In addition, each RGB skin image had a synchronous NIR image. The second dataset was taken by a DSLR camera, and the model was Cannon 500D. JAI image dataset and DSLR image dataset were constructed at the same time. Specifically, a pair of synchronized RGB/NIR images was taken by the JAI camera, and an RGB image was taken by the DSLR camera from each arm of 250 persons. The third dataset was taken by a smartphone, Huawei P20, from the arms of 20 students randomly chosen on campus. We used this dataset to evaluate the color transfer scheme. Qualitative evaluation was carried out in all of the three datasets. However, since only the images in the first dataset had synchronized NIR images, quantitative evaluation was carried out in this dataset.

We implemented our model on Intel Xeon E5-2690 CPU workstation with 32GB RAM, NVIDIA Quadro M6000 24GB and Ubuntu 14.04 OS. Caffe7 was used to implement the training and testing. We compared the proposed method with four state-of-the-art methods for vein visualization29: Tang et al. Optical method,22 Song’s Wiener method,23 Watanabe’s method,24 and the GAN model.25

4.1. Subjective evaluation

4.1.1. JAI image dataset

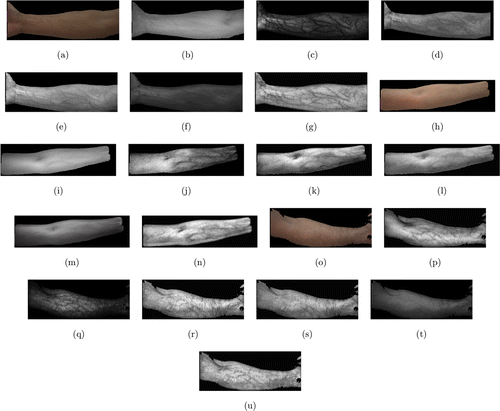

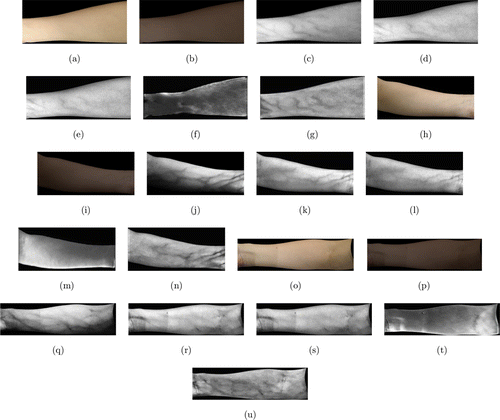

For the JAI image dataset, some experimental results are shown in Fig. 6. Figure 6(a) is a color skin image of a right inner forearm. Figure 6(b) is its corresponding NIR image. Figures 6(c)–6(f) are the visualization results from the Optical method, the Wiener method, Watanabe’s method, and the GAN model, respectively. Figure 6(g) is the result from the proposed method. It can be seen that the Optical method and the Wiener method can visualize veins, but their results are affected by the shadows on skin. They are quite sensitive to the lighting environment. In addition, the result from the Optical method contains a lot of noises. Watanabe’s method cannot obtain satisfactory result in some skin areas. The result from the GAN model is blurred and veins cannot be detected clearly. The proposed method can achieve good visualization result. Figures 6(h)–6(u) show another two sets of results. Figure 6(o) shows an inner forearm with quite a lot of hair on skin. The proposed method still can produce better visualization result than the other four methods, as shown in Fig. 6(u).

Fig. 6. Subjective evaluation on the JAI image dataset (a) is a color skin image of an inner forearm; (b) is its corresponding NIR image; (c)–(g) are the visualization results from the Optical method, the Wiener method, Watanabe’s method, the GAN model, and the proposed method, respectively; (h)–(u) show another two sets of results.

4.1.2. DSLR image dataset

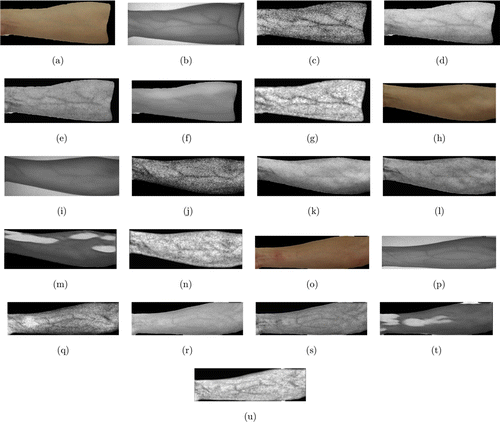

For the DSLR image dataset, some experimental results are shown in Fig. 7. Figure 7(a) is a color skin image of a right inner forearm taken by a Cannon 500D camera. Since it is not a synchronous camera, the NIR image of the corresponding body part taken by the JAI camera is shown in Fig. 7(b) for performance evaluation. Figures 7(c)–7(f) are the visualization results from the Optical method, the Wiener method, Watanabe’s method, and the GAN model, respectively. Figure 7(g) is the result from the proposed method. Figures 7(h)–7(u) show another two sets of results. The images in this dataset were not taken from the same camera as the training images, so it is more difficult to visualize veins from them. It can be seen that the results from the optical method are still very noisy. Watanabe’s method and the Wiener method can detect veins but the performance is not as good as the proposed method. The GAN model gave wrong results in Figs. 7(m) and 7(f).

Fig. 7. Subjective evaluation on the DSLR image dataset (a) is a color skin image of a right inner forearm; (b) is its corresponding NIR image; (c–g) are the visualization results from the Optical method, the Wiener method, Watanabe’s method, the GAN model, and the proposed method, respectively; (hu) show another two sets of results.

4.1.3. Smartphone image dataset

Some typical experimental results from the smartphone image dataset are shown in Fig. 8. Figure 8(a) is a color skin image of a right inner forearm taken by a Huawei P20 smartphone camera. Since the images were captured randomly from campus, no NIR images were available. The color transfer scheme proposed in Sec. 3.4 was adopted in this experiment. Figure 8(b) is the resultant image transferred from Fig. 8(a). Figures 8(c)–8(f) are the visualization results from the Optical method, the Wiener method, Watanabe’s method, and the GAN model, respectively. Figure 8(g) is the result from the proposed method.

Fig. 8. Subjective evaluation on the Handphone image dataset (a) is a color skin image of a left inner forearm; (b) is its corresponding NIR image; (c–g) are the visualization results from the Optical method, Wiener method, Watanabe’s method, the GAN model, and the proposed method, respectively; (h–u) show another two sets of results.

Figures 8(h)–8(u) show another two sets of results. The arm in Fig. 8(h) has an obvious posing angle, which produces a shadow near the lower boundary of the arm. The Optical method was severely affected by the shadow, therefore, the veins in the lower part of the arm could not be visualized. The Wiener and Watanabe’s methods were not affected that much, but the clarity of visualized veins was not as good as the result from the proposed method. Figure 8(o) also has shadow problem. Different from the previous example, the shadow came from lighting environment, instead of from posing angle. The optical method, the Wiener and Watanabe’s methods were all affected by the problem, which can be detected from the rectangle area in the resultant images. On the contrary, the proposed method is robust to the phenomenon. Veins can be clearly detected from the visualized result, as shown in Fig. 8(u).

4.2. Objective evaluation

Numerical measures were adopted to evaluate the proposed vein visualization algorithms quantitatively and compare it with the state-of-the-art methods. As mentioned in Sec. 3.1, we used a filter bank composed of the real parts of 16 Gabor filters to the resultant images and NIR images to locate veins. Then the information maps were enhanced and binarized using Otsu’s method. After that veins could be obtained. An example is given in Fig. 9. Figures 9(a) and 9(b) is a pair of RGB/NIR arm images. Figure 9(c) is the vein images obtained from Fig. 9(b). Figures 9(d)–9(h) are the vein images obtained from the visualization results of the optical method, the Wiener method, Watanabe’s method, the GAN model, and the proposed method, respectively. It can be seen that with the NIR image as a benchmark, the veins obtained by the proposed method are more complete and less noisy.

Fig. 9. Extracting veins from NIR images (a) and (b) is a pair of RGB/NIR arm images; (c) is the vein images obtained from (b); (d–h) are the vein images obtained from the visualization results of the Optical method, Wiener method, Watanabe’s method, the GAN model, and the proposed method, respectively.

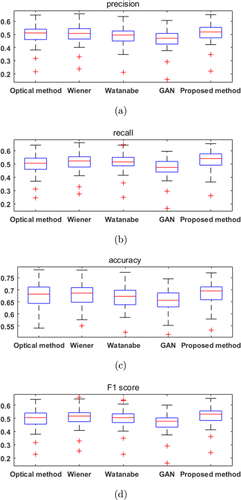

In the three image datasets, only the JAI dataset has synchronous RGB/NIR images, so the objective evaluation was performed in this dataset. With the NIR images as ground truth, four metrics which are commonly used in the field of pattern recognition were calculated: Accuracy, Precision, Recall and F1 score. The box plots of the four methods are shown in Fig. 10. The mean values of the four methods are shown in Table 2. The highest mean values are highlighted. It can be seen that the proposed method has the highest mean values for all of the four metrics.

| Metrics | Optical method | Wiener method | Watanabe’s method | GAN model | Proposed method |

|---|---|---|---|---|---|

| Precision | 0.5001 | 0.5055 | 0.4903 | 0.4669 | 0.5129 |

| Recall | 0.4982 | 0.5168 | 0.5120 | 0.4673 | 0.5307 |

| Accuracy | 0.6771 | 0.6809 | 0.6706 | 0.6561 | 0.6860 |

| F1 score | 0.4987 | 0.5107 | 0.5005 | 0.4666 | 0.5207 |

Fig. 10. Boxplots of Precision (a), Recall (b), Accuracy (c), and F1 score (d).

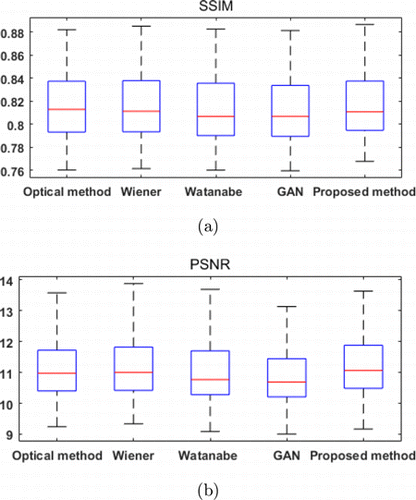

In the proposed method, the NIR images were used as targets to train the CNN, and the Euclidean distance between the NIR and resultant images were utilized as the loss function. Therefore, as an objective evaluation, we calculated the SSIM and PSNR between the NIR images and the visualization results. The box plots of the five methods are shown in Fig. 11. The mean values of the five methods are shown in Table 3. It can be seen that the proposed method has the highest mean values for both SSIM and PSNR. The experimental results show that the visualized veins produced by the proposed method are the most similar to NIR images.

| Metrics | Optical method | Wiener method | Watanabe’s method | GAN model | Proposed method |

|---|---|---|---|---|---|

| SSIM | 0.8173 | 0.8178 | 0.8143 | 0.8131 | 0.8181 |

| PSNR | 11.1271 | 11.1681 | 11.0265 | 10.8321 | 11.2327 |

Fig. 11. Boxplots of SSIM (a) and PSNR (b).

5. Conclusion

Deep learning has shown an explosive popularity due to their great success in object recognition and image classification. It also found a lot of applications in image denoising and super-resolution. In this paper, we proposed a specific deep learning method to visualize veins from color skin images for intravenous cannulation. A convolutional neural network model was designed to implement the mapping from RGB images to NIR images, where veins can be observed. A color transfer scheme was proposed to make the method adaptive to the images taken by smartphones. Objective and subjective evaluations on three image datasets show the effectiveness of the proposed method. The experiments on the smartphone image dataset further proved the performance of the color transfer scheme. In the future, we will develop a smartphone APP to implement our method which will benefit medical staff in daily intravenous cannulation.

Conflict of Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgments

Our thanks to Associate Professor Adams Kong Wai-kin, and his Ph.D. student, Xu Xingpeng, from Nanyang Technological University, Singapore for their support to the work. This work was supported by “the Fundamental Research Funds for the Central Universities, No. NS2019016”.