Automatic segmentation of foveal avascular zone based on adaptive watershed algorithm in retinal optical coherence tomography angiography images

Abstract

The size and shape of the foveal avascular zone (FAZ) have a strong positive correlation with several vision-threatening retinovascular diseases. The identification, segmentation and analysis of FAZ are of great significance to clinical diagnosis and treatment. We presented an adaptive watershed algorithm to automatically extract FAZ from retinal optical coherence tomography angiography (OCTA) images. For the traditional watershed algorithm, “over-segmentation” is the most common problem. FAZ is often incorrectly divided into multiple regions by redundant “dams”. This paper analyzed the relationship between the “dams” length and the maximum inscribed circle radius of FAZ, and proposed an adaptive watershed algorithm to solve the problem of “over-segmentation”. Here, 132 healthy retinal images and 50 diabetic retinopathy (DR) images were used to verify the accuracy and stability of the algorithm. Three ophthalmologists were invited to make quantitative and qualitative evaluations on the segmentation results of this algorithm. The quantitative evaluation results show that the correlation coefficients between the automatic and manual segmentation results are 0.945 (in healthy subjects) and 0.927 (in DR patients), respectively. For qualitative evaluation, the percentages of “perfect segmentation” (score of 3) and “good segmentation” (score of 2) are 99.4% (in healthy subjects) and 98.7% (in DR patients), respectively. This work promotes the application of watershed algorithm in FAZ segmentation, making it a useful tool for analyzing and diagnosing eye diseases.

1. Introduction

Foveal avascular zone (FAZ) is a circular capillary-free region surrounded by retinal vascular beds.1 The FAZ state reflects the condition of the macular microcapillary circulation.2 Studies have shown that the size and shape of the FAZ have a strong positive correlation with several vision-threatening retinovascular diseases, such as diabetic retinopathy (DR), retinal vein occlusion (RVO) and macular telangiectasia.3,4 Therefore, the identification, segmentation and analysis of FAZ are of great significance for clinical diagnosis and treatment.5

With the development of ophthalmic imaging technology, quantitative research on FAZ has become more and more extensive. Among these technologies, the optical coherence tomography angiography (OCTA) technology occupies an absolute advantage in FAZ detection and analysis due to its advantages such as no need for injection dyes usage, high resolution and three-dimensional (3D) imaging.6,7,8,9,10,11 Although the FAZ detection software provided by some commercial OCTA systems have achieved high repeatability,12,13 some studies have found that there are significant differences between the results from commercial software and the manually outlined ones,7,14 and the difference will become even greater when dealing with the retinal images with some retinovascular diseases. The reason is that the FAZ boundaries of the diseased retinal images are so irregular that they often lead to false or missed detection. In addition, some patients sometimes require long-term follow-up. In this process, different brands or models of test equipment may be selected. However, the analysis methods and evaluation criteria used by different commercial systems may be different, resulting in the lack of consistency in the quantitative parameters and making it difficult to compare.15,16

In recent years, machine learning methods developed rapidly and have been widely used in the field of medical image segmentation. Chan et al.17 proposed an automated method based on deep neural networks (DNNs) to quantify the FAZ parameters and perifoveal capillary density of OCTA images in healthy eyes and DR eyes. The accuracy rate is 80–83%. Al-Bander et al.18 used convolutional neural networks (CNNs) to identify the optic disc and FAZ, but it is difficult to fine-tune the optimal architecture and training hyper-parameters. Moreover, the huge amount of labeled datasets used in machine learning was hard to obtain. Relying solely on manual segmentation will make this task extremely difficult. Conventional image segmentation methods can provide a large amount of label data for machine learning under human supervision and adjustment, therefore it still has a certain practical value.

Among the conventional image segmentation methods, the region growing algorithm has been commonly used. Díaz et al. used region growing algorithm to extract the FAZ in OCTA images.5 Lu et al.20 first used the region growing algorithm to extract the initial FAZ, and then applied the morphological operator and the Snake model to obtain the final FAZ segmentation.19 However, affected by the quality of the OCTA image, the capillaries near the FAZ are often discontinuous. Some vascular gaps may appear which lead to the over-growth of the target region. Therefore, the resulting area of FAZ is often larger than it should be.

In contrast, the active contour model, also known as the Snake model, has been adopted by more scholars due to its higher accuracy and stability.20 The Snake model was proposed by Kass et al. in 1987.21 This method is an iterative algorithm, and the initial contour needs to be defined in advance. The contour shape is controlled by an energy function, and constantly deformed under the combined action of internal energy and external energy. When the energy converges to a minimum, the target edge is obtained.22 However, the early Snake model is a semi-automatic algorithm that requires manual outline of the initial contour, which is time-consuming, laborious and lacks objectivity. Additionally, the traditional Snake model can converge to an accurate position only when the initial contour is set near the target boundary. Thus, scholars successively proposed a series of optimized methods to improve the accuracy, such as gradient vector flow (GVF) Snake, generalized gradient vector flow (GGVF). In order to realize the automatic segmentation of FAZ, scholars combined the Snake model with other segmentation methods, such as morphological methods. Other methods are first used to identify the initial contour of FAZ, and then the Snake model is followed to extract the accurate FAZ.20

Watershed segmentation23 is a kind of morphological algorithm. It regards the signal strength of an image as the terrain height. If the water flows upward from the lowest point, multiple “reservoirs” will appear and merge gradually. In order to prevent the “reservoirs” from converging, “dams” are set to separate the different “reservoirs” at the location where they are about to converge. Finally, the image is divided into several regions. In view of the fact that FAZ is a uniform low-signal zone, it can be regarded as a “reservoir”. Therefore, the watershed algorithm can be applied to the FAZ segmentation.

Qureshi et al.25 extracted the FAZ using an efficient algorithm based on intensity, temporal arcade, watershed and morphological operators.24 Silva et al. used morphological methods to locate the initial region, and then used the watershed algorithm to extract the FAZ.25 However, as the most common problem of the traditional watershed algorithm, the problem of “over-segmentation” has not been well solved. For this reason, the FAZ is often incorrectly divided into multiple regions by redundant “dams”. The “regional merging” of the segmentation results is an effective method to solve over-segmentation. How to automatically determine the regions to be merged is a key issue.

In this paper, we proposed an adaptive watershed algorithm to automatically extract FAZ from the OCTA images. First, the original image is denoised, and then the region growing algorithm is used to extract the initial FAZ from the denoised image. This initial region is often much larger than the actual FAZ size. Next, the distance transformation is applied to the initial region to obtain a topographic map; the watershed algorithm is used to divide the topographic map into several regions. Finally, the length of the dam between the various reservoirs is detected. If the length of the dam is greater than a certain threshold, the reservoirs on both sides of the dam will be merged. The threshold is adjusted adaptively according to the maximum inscribed circle radius of FAZ. Quantitative and qualitative methods are used to verify the accuracy and stability of the algorithm.

2. Methods

2.1. Subjects and data acquisition

In this study, we recruited 58 subjects, consisting of 33 healthy controls and 25 DR patients. For each healthy subject, the images of the left and right eyes were collected, and for the DR patients, the images of the diseased eye on one side were collected. If both eyes meet the selected standard, the left eye data should be collected. All subjects were recruited from the First Hospital of Qinhuangdao. The study was performed in accordance with the tenets of the Declaration of Helsinki. Informed consents were obtained from all participants. Inclusion criteria were as follows: (1) Best corrected visual acuity of the participants should be 20/20 or higher and they must be over 18 years old, healthy or with type-2 DR confirmed by the diabetologist; (2) participants must have no history of ocular disease or significant media opacity, no previous eye intraocular treatment (laser, intravitreal injections, cataract surgery and vitreoretinal surgery, refractive error < 6 diopters; and (3) participants must have no ischemic heart disease or neurodegenerative disease, be without macular edema or other ocular diseases.

The retinal OCT images used in this study were obtained from a commercial SPECTRALIS OCT system (based on SPECTRALIS OCT2; Heidelberg Engineering, Heidelberg, Germany). This device operates at 85-kHz A-scan rate, with a central wavelength of 870nm and a bandwidth of 50nm, providing 3.9-μμm axial and 6-μμm lateral resolutions. The ocular light power exposure was within the American National Standards Institute safety limit. Each B-scan datum was composed of 512 A-scans, and each volume scan consists of 512 B-scans. The area covered by the entire 3D data is 3 × 3mm2.

2.2. Adaptive watershed algorithm

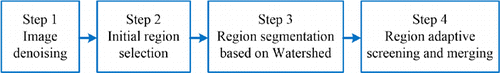

The adaptive watershed algorithm proposed in this paper is divided into four parts: (1) image denoising, (2) initial region selection, (3) region segmentation based on watershed and (4) region adaptive screening and merging, as shown in Fig. 1.

Fig. 1. Schematic of optimized watershed algorithm.

(1) Image denoising

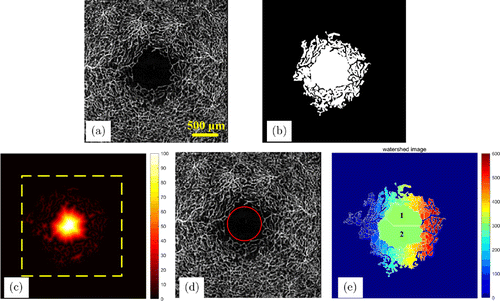

OCTA images are usually noisy, so there may be some bright spots in the FAZ that should not have any blood flow signals. In this case, there may be some loopholes in the initial region detected by the region growing algorithm. Therefore, denoising is needed before the image segmentation. This paper uses the 5 × 5 median filters to weaken the effect of speckle noise, and then uses the 5 × 5 Gaussian filter to smooth the images. The preprocessing can make the signal intensity in the FAZ to be consistent, which is convenient for subsequent processing. Figure 2(a) shows the filtered retinal blood vessel map.

Fig. 2. (a) The filtered retinal blood vessels map. (b) Initial FAZ. (c) Topographic map obtained by distance transformation. (d) Schematic diagram of the maximum inscribed circle of FAZ. Its radius is the maximum value in panel (c), and its center coordinate is the coordinate of the maximum value point. (e) Region segmentation result based on watershed.

(2) Initial region selection

The region growing algorithm is applied to obtain the initial FAZ. The algorithm operates as follows: First, one pixel in FAZ was selected automatically as the initial seed pixel. The automatic selection method is described in detail in Sec. 2.3. After the initial seed pixel was specified, a growth criterion was used between the value of a candidate pixel and the average value of the pixels in target region. In the traditional region growing algorithm, the growth criterion is described as

(3) Region segmentation based on watershed

First, a distance transformation operation is performed on Fig. 2(b). The signal intensity of each point in the image is converted into the distance from that point to the nearest blood vessel pixel, as shown in Fig. 2(c). Obviously, the distance between the pixel in the center of FAZ and the peripheral blood vessel pixels is the largest. The maximum value in FAZ is the maximum inscribed circle radius.25 Figure 2(d) shows a schematic diagram of the maximum inscribed circle. The transformed image is treated as a topographic map, and each pixel value is regarded as the height. We multiplied the entire image pixels by “−1−1”, the FAZ becomes a reservoir and then the FAZ was segmented by the watershed algorithm. The segmentation result is shown in Fig. 2(e).

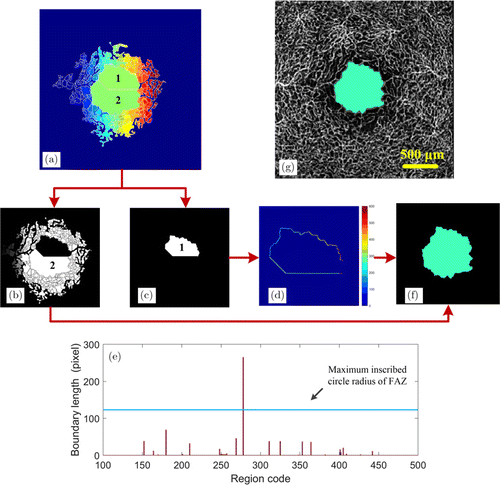

(4) Region adaptive screening and merging

As can be seen from Fig. 2(d), the watershed algorithm divides the topographic map into several regions, each region is assigned a label and the different regions are separated by “dams”. If the FAZ is dumbbell-shaped, i.e., wide on both sides and narrow in the middle, the watershed algorithm may divide it into two or more regions, as shown in Fig. 2(d). This is caused by the over-segmentation of the watershed algorithm. In order to obtain the FAZ accurately, the over-segmentation problem of the watershed algorithm must be solved.

After research, we found that when FAZ is divided into multiple regions, the length of the “dams” between these regions is much greater than that of the gaps between blood vessels. Therefore, by detecting the length of the “dams” between different regions and merging the regions whose dam length is greater than a certain threshold, the over-segmentation problem can be solved. As shown in Fig. 3(a), the FAZ is divided into two regions (regions 1 and 2). First, we define the region where the seed point is located as the target region [Fig. 3(c)], and other regions as candidate regions [Fig. 3(b)]. The “dams” between the target region and the surrounding regions are extracted, and each “dam” is assigned the same code as the corresponding candidate region. In order to make the “dam” clearly visible, we amplified the image, as shown in Fig. 3(d). The length of each “dam” is calculated and compared with a threshold. The threshold is automatically generated based on the maximum inscribed circle radius of FAZ. The maximum value point in the distance transformation image [Fig. 2(c)] has the largest distance to the peripheral blood vessel pixels, and this distance roughly reflects the maximum inscribed circle radius of FAZ. Figure 3(e) shows the relationship between the length of the dam around the target region and the radius of the maximum inscribed circle of FAZ. The abscissa represents the coding of the candidate region, and the ordinate represents the length (number of pixels) of the dam between the candidate region and the target region. Blue horizontal line indicates the maximum inscribed circle radius of FAZ. The regions where the length of the dam is greater than the maximum inscribed circle radius will be merged. Then, the “dam” length around the new target region is checked again until there are no eligible regions that can be merged. Finally, the obtained FAZ [Fig. 3(f)] is superimposed on the blood vessel map, as shown in Fig. 3(g).

Fig. 3. Region adaptive screening and merging. (a) Region segmentation result based on watershed. (b) The candidate regions. (c) The target region where the initial seed point is located. (d) “Dams” between the target region and the surrounding regions. (e) The relationship between the length of the dam around the target region and the maximum inscribed circle radius of FAZ. Blue horizontal line indicates the maximum inscribed circle radius of FAZ. (f) The final FAZ. (g) Fusion of FAZ and blood vessel images.

2.3. Automatic selection of seed point

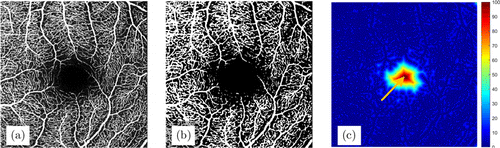

In order to realize the fully automatic operation of the algorithm, we used an automatic seed point selection method in the region growing section (Fig. 4). First, the Otsu algorithm was used to binarize the original image. The OCTA image is roughly divided into two parts, the blood vessel and the background, and the FAZ belongs to the background part. Perform distance transformation on the binary image. Obviously, the pixel in the center of the FAZ has the farthest distance from the blood vessel pixel. Therefore, this point is selected as the seed point for region growth.

Fig. 4. Automatic selection of seed point. (a) Original OCTA image. (b) The binary vascular image obtained by Otsu algorithm. (c) The distance image obtained by distance transformation. The orange arrow refers to the maximum point in the distance image, which will be selected as the seed point.

As can be seen from Fig. 4(b), the blood vessels extracted using the Otsu algorithm are not accurate. Part of the capillaries around FAZ are not extracted due to the low signal value. Therefore, this method can only be used for the selection of seed point, and cannot be used for the extraction of FAZ.

2.4. Algorithm running speed improvement

On the other hand, in order to increase the running speed, we changed the termination conditions of the region growing algorithm. This is because the initial region obtained by the region growing algorithm only needs to contain the complete FAZ, there is no need for detecting all the candidate pixels. Therefore, the termination condition of the region growing algorithm is set as the number of candidate pixels grown at each step being less than 50. Of course, the algorithm is allowed to run for 10 steps so that the number is greater than 50. This will not affect the final extraction accuracy of FAZ but can greatly improve the operating speed.

3. Results

3.1. FAZ segmentation results

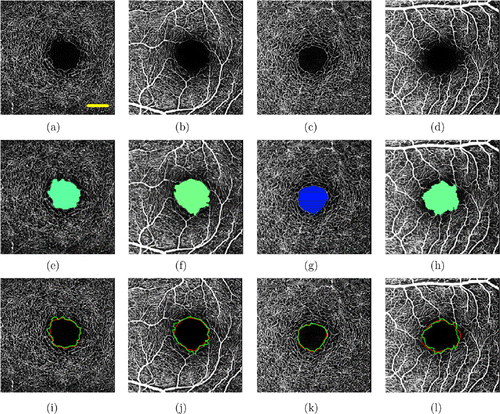

The retina contains two main capillary plexuses: superficial capillary plexus resides in the nerve fiber layer or ganglion cell layer; and deep capillary plexus is located in the inner nuclear layer. We reconstructed two images of the superficial capillary and the deep capillary from each group of fundus OCT data. Therefore, we have a total of 132 healthy retinal vascular images and 50 DR retinal vascular images. Among the healthy retinal images, 22 low-quality images were screened into another group. Figure 5 shows the retinal blood vessels and the FAZ segmentation results in healthy subjects. Figures 5(a) and 5(c) denote deep vascular plexus; Figs. 5(b) and 5(d) denote superficial vascular plexus. The yellow solid line is a scale, the length represents 0.5mm. Figures 5(e)–5(h) are the corresponding FAZ segmentation results. Three ophthalmologists were invited to manually segment all images using MATLAB software, and the segmentation results were compared with the automatic segmentation results. Figures 5(i)–5(l) show the comparison results in healthy subjects. The green curves indicate the automatic segmentation results, and the red curves are the results of manual segmentation (one of the three ophthalmologists).

Fig. 5. The retinal blood vessels and the FAZ segmentation results in healthy subjects. Panels (a) and (c) denote deep vascular plexus, while panels (b) and (d) denote superficial vascular plexus. Panels (e)–(h) are the corresponding FAZ segmentation results. Panels (i)–(l) show the comparison of results between automatic and manual segmentation methods in healthy subjects. The green curves are the automatic segmentation results, and the red curves are the manual segmentation results.

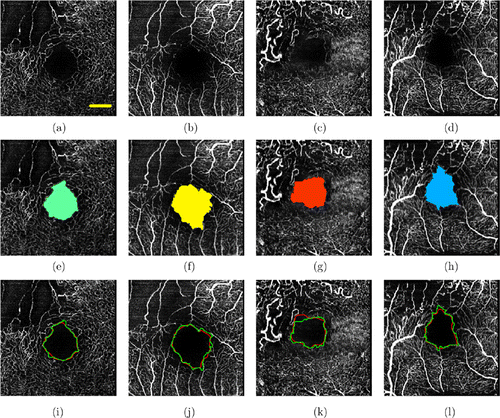

Figure 6 shows the retinal vascular plexus [panels (a)–(d)] and the corresponding FAZ segmentation results [panels (e)–(h)] in DR patients. It can be seen that compared with healthy retinal blood vessel images, the FAZ is significantly enlarged, the shape becomes irregular and the border blood vessels are severely missing. The automatic segmentation result and the manual segmentation result are compared, and the comparison results are shown in Figs. 6(i)–6(l).

Fig. 6. Retinal vascular network and the corresponding FAZ segmentation results in DR patients. Panels (a)–(d) show the original OCTA images. Panels (e)–(h) are the corresponding FAZ segmentation results. Panels (i)–(l) show the comparison of results between automatic and manual segmentation methods in DR patients.

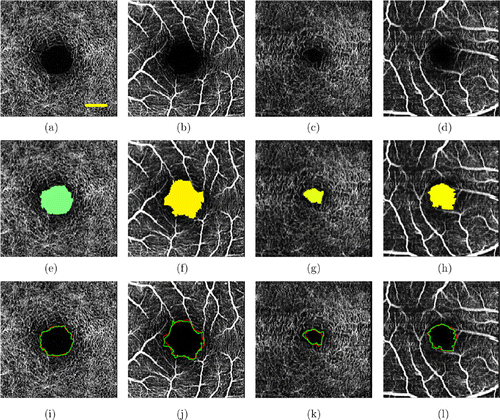

Since the imaging principle of OCTA is based on motion contrast, minor eye movements will have a great impact on the OCTA results. In addition, the opacity of the vitreous body is also the reason for the poor image quality of OCTA. In order to verify the anti-interference ability of the proposed algorithm, we specifically screened a set of low-quality retinal blood vessel images (n = 22) from healthy retina images for testing. Low-quality images are defined as vascular blurred images due to eye motion or vitreous turbidity. Figure 7 shows the FAZ segmentation results of low-quality retinal blood vessel images and the comparison results of automatic and manual segmentation methods on low-quality retinal images.

Fig. 7. FAZ segmentation results of low-quality retinal vascular images. Panels (a)–(d) show the original OCTA images. Panels (e)–(h) are the corresponding FAZ segmentation results. Panels (i)–(l) show the comparison of results between automatic and manual segmentation methods on low-quality retinal vascular images.

3.2. Quantitative evaluation

Quantitative evaluation is a common method to verify the accuracy of an algorithm. In this paper, we invited three experienced ophthalmologists to manually outline the FAZ. The correlation coefficient between the manual segmentation result and the result of the proposed algorithm is calculated and counted. The statistical results are expressed as mean ± standard deviation (SD). All the above specialists were senior ophthalmologists from the First Hospital of Qinhuangdao City.

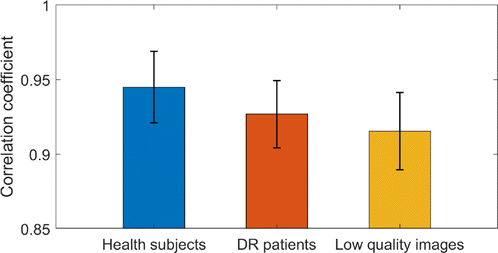

Table 1 shows the correlation coefficient statistics between the results of the proposed algorithm and those of manual segmentation by the three experienced ophthalmologists, including the retinal vascular images of healthy subjects and DR patients as well as the low-quality retinal vascular images. Figure 8 shows a histogram of the average correlation coefficients and standard deviations of the three types of blood vessel images.

| Subject | Specialist 1 | Specialist 2 | Specialist 3 |

|---|---|---|---|

| Healthy retinal images | 0.948 ± 0.017 | 0.946 ± 0.024 | 0.941 ± 0.033 |

| DR retinal images | 0.930 ± 0.024 | 0.926 ± 0.026 | 0.925 ± 0.021 |

| Low-quality images | 0.904 ± 0.037 | 0.920 ± 0.031 | 0.925 ± 0.022 |

Fig. 8. Histogram of the average correlation coefficients and standard deviations of the three types of blood vessel images.

From the statistical results, it can be seen that the method proposed in this paper maintains high segmentation accuracy for any kind of blood vessel images, and the average correlation coefficient for the artificial segmentation results is above 0.9. Whereas, the average correlation coefficients for healthy subjects and DR patients are 0.945 and 0.927, respectively. The average correlation coefficients for healthy subjects and DR patients obtained in the literature5 are 9.3 and 0.88, respectively. It can be seen that the performance of the proposed algorithm is higher than that in literature5 for both healthy subjects and DR patients. On the other hand, the average correlation coefficient for low-quality images is 0.915. There are no other reports on the FAZ extraction for low-quality images.

3.3. Qualitative evaluation

The correlation coefficient between automatic segmentation and manual segmentation can only evaluate the difference between the two methods, and cannot truly reflect which method is more accurate, because manual segmentation is not a gold standard either. So, the qualitative evaluation can be used as a supplement to determine whether an algorithm is accurate.

The qualitative evaluation is achieved by inviting specialists (n = 3) to score the automatic segmentation results, with a score ranging from 0 point to 3 points. A score of 3 means a perfect or nearly perfect segmentation; a score of 2 represents a good segmentation, possibly needing minor corrections; a score of 1 indicates the segmentation results have major problems; and a score of 0 shows the segmentation result is totally unacceptable. All specialists who received the invitation were senior ophthalmologists from the First Hospital of Qinhuangdao City. Table 2 shows the scores of three specialists on different types of image segmentation results. The values in the table indicate the numbers of images with different scores. Among them, the healthy retinal images, DR retinal images and low-quality images that received 3 points were 308, 135 and 55, accounting for 93.3%, 90% and 83.3%, respectively. The numbers of images that scored 2 points were 20, 13 and 8, accounting for 6.1%, 8.7% and 12.1%, respectively. The numbers of images that scored 1 point were 2, 2 and 3, and the proportions were 0.6%, 1.3% and 4.5%, respectively. There are no images with 0 point.

| Numbers of images with different scores | ||||

|---|---|---|---|---|

| Subject | Score 3 | Score 2 | Score 1 | Score 0 |

| Healthy retinal images(110 images × three specialists) | 308 | 20 | 2 | 0 |

| DR retinal images(50 images × three specialists) | 135 | 13 | 2 | 0 |

| Low-quality images(22 images × three specialists) | 55 | 8 | 3 | 0 |

4. Discussion

The watershed algorithm is widely used in the field of image processing because of its ability to obtain continuously close target boundaries, fast calculation speed and accurate positioning. Watershed segmentation algorithms mainly include the following categories: (1) distance transformation-based watershed algorithm, which is suitable for region segmentation. (2) Markers-based watershed segmentation algorithm. This method starts from the marked point to submerge, which can effectively limit the number of regions. But the markers are difficult to obtain automatically. (3) Gradient-based watershed segmentation algorithm, which is suitable for edge detection. (4) Deep learning-based watershed segmentation algorithm. This method uses machine learning to filter the required segmentation lines in the watershed algorithm to obtain more effective boundary lines and segmentation results. However, a large number of training samples are difficult to obtain in a short time. The method in this paper is an improved watershed algorithm based on distance transformation.

FAZ is a uniform low-signal area and can be seen as a reservoir. Therefore, the watershed algorithm is very suitable for FAZ segmentation. The main reason why it has not been widely reported is that the over-segmentation problem has not been well resolved. The watershed segmentation algorithm is very sensitive to image noise, texture features, external interference, etc., and incorrect local minima may be generated in the flat zone of the image, resulting in an over-segmentation phenomenon.

Extinction value-based watershed algorithm is a hierarchical watershed approach used for solving over-segmentation problems.26 The extinction value corresponds to a geometric measurement of the smallest lake and is used to evaluate the relevance of the merging. After the flooding process is completed, the extinction of small lakes is allowed (the merging is performed), whereas the big lakes are preserved (the merging is not performed).27 In this paper, FAZ is often divided into several lakes with different sizes; it is difficult to judge which lakes need to be merged by the extinction value. Therefore, whether extinction value-based watershed method is suitable for FAZ segmentation remains to be studied.

The size of FAZ varies greatly among healthy subjects or patients. If a fixed “dam” length threshold is used, it is difficult to achieve accurate segmentation of all images. Therefore, we adopted an adaptive threshold. The maximum point of the distance-transformed image reflects the distance from the point to the peripheral blood vessel pixels, which is approximately equal to the maximum inscribed circle radius of the FAZ. If the FAZ is divided into multiple regions, the “dam” often spans the entire FAZ, i.e., its value is approximately equal to the diameter of the FAZ, or between the radius and the diameter of the FAZ. Therefore, we use the maximum value of the distance-transformed image as the dam length threshold, which just meets the demand.

In some DR patients, the blood vessel density of the retina is severely reduced, and even a cavity larger than the area of the FAZ is formed. In this case, the seed point may be positioned incorrectly. Therefore, in the process of automatic seed point positioning, regional restrictions need to be added. By restricting the search area of the seed point to approximately within 1mm2 in the center of the image, accurate results can be obtained.

Although the accuracy of the algorithm in this paper is slightly reduced due to the image quality, it still maintains a very high accuracy overall. It shows that the method in this paper is very resistant to interference. Another advantage of the algorithm in this paper is the fast running speed. As the termination condition of the region growing algorithm has changed, the time consumption reduced significantly. The described method was implemented using MATLAB’s (The MathWorks, Inc.) M-file code. The program runs on a personal computer (64-bit OS, Intel Core i7 CPU at 3.6GHz and 8-GB RAM). It only takes 3s to complete the FAZ segmentation of a blood vessel image (900 × 900). The method in this paper can also be used in combination with other methods, such as the Snake model. Taking the result obtained by the method as the initial region, the Snake model is used for fine segmentation after the contour is extracted. The initial contour obtained by the watershed algorithm segmentation is very close to the real target edge, which can effectively reduce the number of iterations of the Snake model and improve the segmentation accuracy.

In summary, FAZ may be used as a biomarker for retinovascular diseases. The adaptive watershed algorithm proposed in this paper can accurately and stably segment the FAZ from the OCTA retinal blood vessel image, and it is expected to become a useful tool for clinical diagnosis of ophthalmological diseases.

Conflict of Interest

The authors declare no potential conflicts of interest with respect to the research, authorship and/or publication of this paper.

Acknowledgments

This work was supported in part by the National Natural Science Foundation of China (61771119, 61901100 and 62075037) and the Natural Science Foundation of Hebei Province (H2019501010, F2019501132, E2020501029 and F2020501040).