Improving Arabic Sentiment Analysis Using LSTM Based on Word Embedding Models

Abstract

In recent times, online users freely express their sentiments in different life aspects because of the huge increase in social networks. Sentiment Analysis (SA) is one of the main Natural Language Processing (NLP) fields thanks to its important role in identifying sentiment polarities and making decisions from the public’s opinions. The Arabic language is one of the most challenging languages for SA due to its various dialects, and morphological and syntactic complexities. Deep Learning (DL) models have shown significant capabilities, especially in SA. In particular, Long Short-Term Memory (LSTM) networks have proven perfect abilities to learn sequential data. This paper proposes a comparative study result of Word2Vec and FastText word embedding models that are used to create two Arabic SA (ASA) LSTM-based approaches. The experimental results confirm that the LSTM model with FastText can significantly ameliorate the Arabic classification accuracy.

1. Introduction

Various social networks have attracted today a large part of internet users to globally communicate and interactively cooperate with each other as Facebook, Twitter, Instagram, etc., in social networks, internet users can freely express their sentiments and share them by using various social data kinds. An enormous data amount is produced every day out of social networks, this huge data reflects the tendencies of the public’s opinions towards various trending applications in many fields (like social subjects, economics, politics, and business).

Social data are quickly evolving contents, informal, and unstructured. As a result, analyzing and processing this data employing traditional analysis methods is a job that is very resource-intensive and consumes time.

Sentiment Analysis (SA) or opinion mining attempts to identify the sentiment polarity of the social network user with respect to a certain event or topic. It can be realized on diverse levels: “word level”, “sentence level”, “document level”, and “topic level”. In this research work, we relied on sentence-level Arabic SA (ASA), to select the sentiment polarity of users’ tweets, whether it is “positive” or “negative”. Due to its rich morphological system, the varying dialects, the resources’ rareness, and ambiguity, the complex structure of the Arabic language imposes various challenges on the ASA research advances.

In recent times, Deep Learning (DL) techniques1 have shown remarkable results and good improvements in the ASA domain.2 DL relies on Neural Network techniques and approaches.3 In general, the various processing layers in DL permit processing a massive data amount more excellently.

Our work in this paper complements a series of deep comparative studies and evaluations at several levels:

In the first comparative analysis,4 we discussed the issue of the most useful models of word embedding that are very significant in the ASA domain. We discovered through this evaluation study that “FastText” is among the models that give very good results.

In the second comparative analysis,3 we compared and treated the most valuable Neural Network models that gain very good results in this domain: “Artificial Neural Network (ANN)”, “Convolutional Neural Network (CNN)”, “Recurrent Neural Network (RNN)”, and “Long Short-Term Memory (LSTM)” particularly. We found through this deep evaluation that the Neural Network models, “CNN” and “LSTM” (a kind of RNN), have various advantages in the ASA field.

Based on the previous deep evaluations and studies, we chose the elements that hold significant results in the domain of ASA. That is why the major contribution of our research work is to propose two ASA LSTM-based approaches relying on the Word2Vec and FastText word embedding models.

The different points between our contribution and other similar research studies are as follows:

Based on our previous evaluation results confirming that LSTM held remarkable results in the domain of ASA, we will create two ASA LSTM-based approaches based on Word2Vec and FastText.

As the experimental results approved that the LSTM with FastText approach can significantly ameliorate the accuracy of Arabic text classification. We will confirm the results of our previous comparative evaluation confirming that FastText outperforms Word2Vec.

The following sections are organized in this manner: Sec. 2 presents an overview of several works related to our research work. Section 3 shows the proposed approach and architecture. Section 4 lays emphasis on experimental results, this section provides the classification at two levels: Classification using LSTM with Word2Vec and classification using LSTM with FastText. The results are discussed and debated in detail in Sec. 5, and the research work is terminated with the definitive ideas in Sec. 6.

2. Related Work

The following section will bring an overview of various research works directly related to the work of this paper.

The research work6 offers the implementation and comparison of “GloVe” and “FastText” word embedding models and LSTM implemented in “single-layer”, “double-layer”, and “triple-layer” architectures. Besides, the accuracy of this study was compared on an Arabic dataset for SA. The suggested algorithm was evaluated with the “ASAD” dataset of 55,000 annotated tweets in three diverse classes. The dataset was increased to obtain the same percentage of positive, neutral, and negative classes. Relying upon the results of this evaluation, the triple-layer LSTM together with FastText word embedding attained the greatest testing accuracy: 90.9%.

The proposed approaches of study9 are evaluated by relying on a reference dataset of reviews of Arabic Hotels. The results confirm that these suggested approaches outperform baseline research on the two tasks with an enhancement of 39% for aspect-OTE extraction and 6% for aspect sentiment polarity classification. The suggested approaches’ results were as follows: for the first proposed approach, the “Bi-LSTM-CRF” focused on Word2Vec word embedding models attained F−1=“66.32%″ and F−1=“69.98%″ relying on FastText character embedding models for similar job. The second suggested approach “AB-LSTM-PC” obtained an accuracy of 82.6%.

The authors of Ref. 10 fine-tuned the Arabic BERT (AraBERT) parameters and they employed it on three merged datasets to impart its knowledge for the ASA for that reason, they lead the experiments by doing a comparison of the AraBERT model with “AraVec” and “FastText” (a static pre-trained word embedding methods) on the one hand in the word embedding step, and on the other hand in the classification step, they compared the hybrid model with “LSTM”, “Bidirectional LSTM (Bi-LSTM)”, “CNN”, and “GRU”, which are prevalently preferred in the SA field. The results confirm that the fine-tuned AraBERT model, integrated with the hybrid network, attained peak performance with up to 94% accuracy.

The goal of the proposed research study11 is to suggest a new methodology for ASA that employs hybrid embedding models with a target for enhancing obtainable pre-trained embedding features. Suggested hybrid embedding models rely on “Part of Speech tagging” and “word2position” vectors over FastText with varied assortments of attached vectors to the vectors of pre-trained embedding. The consequent hybrid embedding model shape is fed to their ensemble “Convolutional RNN (CRNN)”. The authors have tested the methodology for accuracy via various ensemble models of DL and standard sentiment dataset with an accuracy value of: 90.21 employing “Movie Review (MVR) Dataset V2”. The results confirm that the suggested methodology is efficient for the domain of SA and is qualified in order to incorporate even more linguistic knowledge-based techniques to improve the results of SA.

The researchers in Ref. 12 propose a CNN-LSTM deep Neural Network combination for performing the task of SA on Twitter datasets. The suggested model performance is analyzed with the classifiers of machine learning: “the Random Forest (RF)”, “Support Vector Machine (SVM)”, “Stochastic Gradient Descent (SGD)”, “logistic regression”, “a Voting Classifier (VC) of RF and SGD”, and state-of-the-art classifier models. Moreover, Word2Vec and term frequency–inverse document frequency methods are also examined to define their effect on the accuracy of prediction. Three datasets: “hate speech”, “the reviews of women’s e-commerce clothing”, and “US airline sentiments” are used to assess the suggested model performance. The results of the experiment prove that the ensemble “CNN-LSTM” realizes the best accuracy compared to other classifiers’ accuracy.

The major aim of Ref. 7 is to suggest a novel “Arabic sentiment analysis based on deep learning” model with CNN architecture (one layer) in order to extract local features, and LSTM architecture (two layers) for maintaining “long-term dependencies”. Besides, the “feature maps” learned by LSTM and CNN are passed to the classifier SVM for generating the definitive classification. This novel model relies on the model FastText of word embedding. The experiment results (achieved on a corpus that is multi-domain) confirm the good classification with an accuracy value of 90.75%. Moreover, the suggested model is validated with various classifiers and embedding models. The experiment results approve that the FastText, and skip-gram word embedding models with the classifier SVM are more useful options for the domain of ASA.

The research8 adopts the deep Neural Network architectures together with the word embedding approaches in order to perform the sentiment classification of Arabic texts. The models employed are the bidirectional multi-layer LSTM, the RNN, and the FastText word embedding model. Researchers of this work used several hyperparameters for training each model. Moreover, Bi-LSTM which is a Neural Network of multi-layer trained on top of the GloVe word embedding model with 1.5 million words and 1.75 billion tokens overcome other methods.

The suggested model of Ref. 13 displays the SA in the Arabic language based on the DL approach. This research work relied on LSTM in order to train the model connected with word embedding (as a first hidden layer) for feature extraction. There are numerous strategies for learning word embedding models including GloVe, Word2Vec, Fastest and Embedding layer (by Keras). The evaluation results achieve an accuracy of about 82% based on the DL method.

Compared to other approaches in the literature, our suggested work is based on three goals:

The creation of two ASA LSTM-based approaches based on Word2Vec and FastText.

The comparative evaluation of these two ASA LSTM-based approaches based on Word2Vec and FastText.

Discovering the most significant, easier to implement and less costly approach “Arabic Sentiment Analysis LSTM-based approaches using FastText word embedding model” that significantly ameliorate the accuracy of Arabic text classification.

3. Proposed Approach and Architecture

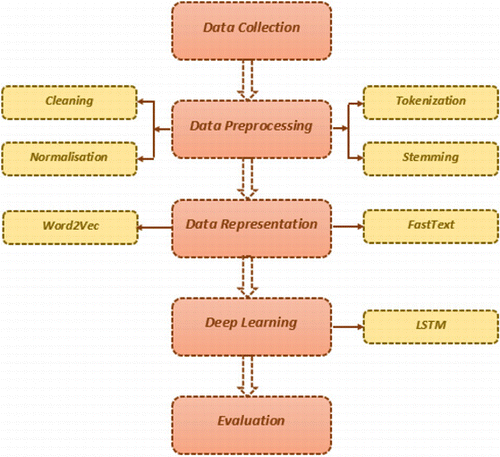

Our proposed methodology and architecture contain five steps for classifying Arabic text relying on the LSTM deep Neural Network algorithm with Word2Vec and FastText word embedding models. The steps to be followed are presented in Fig. 1.

Fig. 1. The proposed methodology and architecture.

Source: Self-made.

3.1. Data collection

This step of SA is considered the most significant. If the collected data is not enough in quantity or quality then this might influence in a negative way the model performance. At the present time, internet users express their opinions on various platforms of social media (such as “micro-blogs”, “blogs”, “Twitter”, and “Facebook”). For the Arabic language, the sentiments are expressed in various dialects without taking the usage of “MSA” into consideration. Therefore, opinions are expressed in several manners, with diverse vocabulary and context of writing in these posts. All these challenges produced disorganized data that is very hard to treat. Specific programming languages like “Python”, “Java”, and “R”) and various techniques are applied to analyze, understand and treat the data collected.

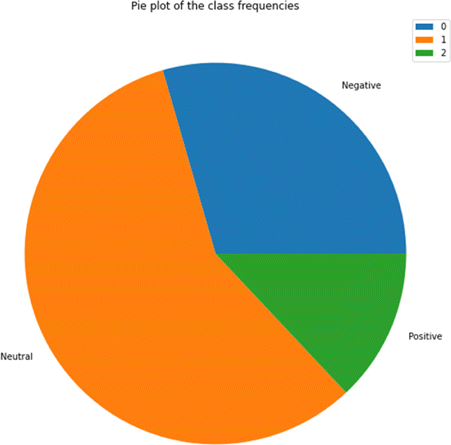

The dataset used to create and test our approaches is an open-access dataset14 that was gathered from the Arabic dialect SA for social media posts. It has around “52,155” tweets which fall into three main classes: “Positive”, “Neutral”, and “Negative” (the major part of tweets was labelled with a neutral sentiment).

Fig. 2. Pie plot of the class frequencies.

Source: Self-made.

3.2. Data preprocessing and cleaning

This phase aims to filter the extracted and selected data before the analysis treatment. It allows the identification and removal of content that is “non-textual” and “irrelevant” to the research domain from the data. This phase includes various steps in order to prepare the data to be treated by the classifier.

Several steps should be executed for preparing the data to be treated by the classifier. The suggested architecture and methodology pretreat the collected data through the following operations: “Cleaning”, “Normalization”, “Tokenization”, and “Stemming”.

| (1) | Cleaning process: The cleaning treatment aims to correct wrong or lost records and remove “Extra white spaces”, “Null values”, and “Foreign characters (like non-Arabic digits, and punctuation marks)”. | ||||||||||||||||||||||||||||

| (2) | Normalization process: This step attempts to convert the Arabic text to its basic shape. It is used to manipulate the explicit Arabic text format in order to avoid difficulties in the data training phase. The normalization step aims to

| ||||||||||||||||||||||||||||

| (3) | Tokenization process: It is the operation of splitting a sentence into a series of “tokens”. The goal of this operation is to specify the boundaries of the word that is mostly a white space character. In other words, this phase relies mainly on “the white spaces” between words to retrieve the “tokens”. | ||||||||||||||||||||||||||||

| (4) | Stemming process: This step attempts to get the root of the words. In other words, it removes all “prefixes” and “suffixes” from the word and keeps it to the original shape. | ||||||||||||||||||||||||||||

The NLTK library was employed. It is a free Toolkit for Natural Language Processing (NLP), and it is designed and implemented for Arabic language and diverse other languages.

3.3. Data representation

The word embedding techniques have significant results for ASA among other traditional techniques in the data representation step. These techniques map a word into a vector space and allow the network to learn about the meaning of the words. Moreover, word embedding techniques learned by DL carry contextual semantic and syntactic information, which is beneficial for the ASA task. That is why we chose FastText and Word2Vec word embedding model techniques in the data representation step in our model.

3.3.1. Word2Vec word embedding model

Word2Vec is considered one of the most useful tools to learn word embeddings relying on shallow Neural Networks.15 It was built at Google in 2013 by Tomas Mikolov. It is a method to build such an embedding. It can be gained by relying on the Continuous Bag of Words Model (CBOW) and the skip-gram methods that involve Neural Networks.

In this step of our research study, we used skip-gram word embedding model.

The model used was built using Gensim Python library. To load and use this model, we followed these steps:

| (1) | Install genism. | ||||||||||||||||||||||||||||||||||||||||

| (2) | Download the pre-trained model. For this, we used a World Wide Web pages model “web_sg_300” which has the following characteristics :

| ||||||||||||||||||||||||||||||||||||||||

| (3) | Extract the compressed model files to a directory [e.g. web_sg]. | ||||||||||||||||||||||||||||||||||||||||

| (4) | Keep the .npy files. You are going to load the file with no extension. | ||||||||||||||||||||||||||||||||||||||||

| (5) | Load and use the model. | ||||||||||||||||||||||||||||||||||||||||

3.3.2. FastText word embedding model

FastText is an extension of the Word2Vec word embedding model.4 In contrast to the Word2Vec model accounts for sub-word information, FastText trains embeddings for sub-word sequences of length n (n-grams). It manipulates perfectly the “rare words”. Word2Vec fails to offer any representation of vectors for words that do not occur in the dictionary. This is a powerful benefit of the FastText word embedding model.

In order to use FastText word embedding model, we followed the next steps:

| (1) | The first step aims to download the pre-trained model in order to work with FastText. For this, we used a model of pre-formed Arabic words vectors “cc.ar.300.vec” which has the following characteristics :

| ||||||||||||||||||||||||||||||||||

| (2) | The second step attempts to load the pre-trained model to word vectors, where FastText offered the format for the word vectors loading. | ||||||||||||||||||||||||||||||||||

| (3) | In the third step, the Embedding Matrix will be employed in the embedding layer for every word weight in the training data. This is done by

| ||||||||||||||||||||||||||||||||||

3.4. Classification

This operation aims to assign a specific class to an Arabic text relied on the content of this text. This step can be realized by DL techniques. In our research work, we focused on a very popular technique: “LSTM”. These classification models (Word2Vec with LSTM and FastText with LSTM) will be optimized to discover the excellent model with the most significant weight values.

LSTM: It is an ANN algorithm employed in the fields of Artificial Intelligence (AI) and DL.11,16 A common “LSTM” unit comprises a cell, an “input gate”, an “output gate”, and a “forget gate”. The values are remembered by the cell over arbitrary time intervals and the information flow is regulated into and out of the cell by three gates. Dissimilar to standard “feedforward neural networks”, “LSTM” has connections of feedback.17

“LSTM” is very appropriate to classify, treat, and make predictions relying on “time series” data since there can be lags of the strange period between significant events in a “time series”. “LSTMs” were created to manipulate the problem of “vanishing gradient” that can be confronted when training traditional “RNNs”. An advantage of “LSTM” over “RNN” is “relative insensitivity” to gap length, covered Markov models, and other succession learning methods in several applications.

3.5. Evaluation

The results generated by the classifier are examined by relying on the accuracy measure, for comprehending the quality of classifier performance:

Accuracy measure: It is “the ratio” of “the correct classified entities” to “the total number” of “the dataset”.

Accuracy=CorrectPredictionsTotalNumber.(1)

4. Experimental Results

This experiment step aims to highlight the results of the classification after setting the LSTM parameters relying on our dataset. In order to place the parameters, the training set (80% representing 41,724 comments) and testing set (20% representing 10,431 comments) are employed. The operation of running the classifier relying on various parameter combinations of LSTM can significantly vary the results. The major parameters applied are as follows:

Epoch: It is the number of “iterations”.

Batch Size: It is the number of “samples per gradient update”.

Loss Function: It is a method applied for measuring the inconsistency between “predicted” and “real” values.

Optimizer: It is a method for adjusting “the weights” relying on “the input values”.

Classification Accuracy: It is a method to be employed for evaluating the results.

For this reason, several tries are executed and the obtained results are logged as presented in Table 1. The very popular optimizer (Adam) is used with the different values of the batch size (100, 250, 500) and the various values of epoch (10, 20, 30, 40).

| Epoch | 10 | 20 | 30 | 40 | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Batch size | 100 | 250 | 500 | 100 | 250 | 500 | 100 | 250 | 500 | 100 | 250 | 500 |

| Training accuracy (%) | 83.37 | 84.16 | 83.06 | 82.21 | 83.54 | 83.37 | 82.21 | 81.92 | 83.88 | 84.11 | 84.21 | 83.09 |

| Testing accuracy (%) | 82.0 | 82.0 | 81.57 | 81.79 | 81.57 | 81.34 | 81.70 | 81.66 | 81.69 | 82.14 | 81.92 | 81.64 |

| Training loss (%) | 42.29 | 40.31 | 43.04 | 45.10 | 41.64 | 41.87 | 45.10 | 46.02 | 41.03 | 40.29 | 40.12 | 42.89 |

| Testing loss (%) | 47.58 | 47.80 | 47.61 | 47.37 | 47.49 | 48.0 | 47.49 | 47.52 | 47.14 | 48.08 | 47.29 | 47.97 |

| Time (s) | 1881.6 | 1708.4 | 1781 | 3992.8 | 3343.9 | 3532.4 | 5788.9 | 4972.6 | 5147.1 | 7821.9 | 6645.6 | 6869.4 |

4.1. Classification using LSTM with Word2Vec

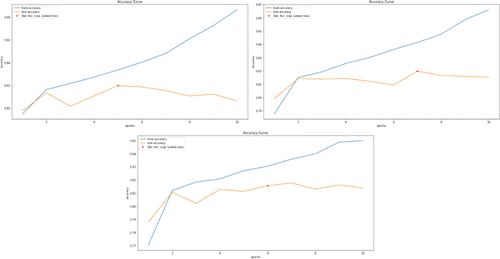

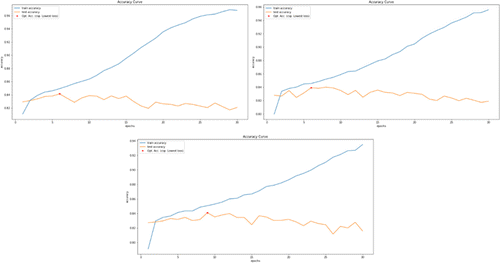

For selecting the value of the good parameter attaining significant accuracy, we will rely on the obtained results that are presented in Table 1 and Figs. 3–6.

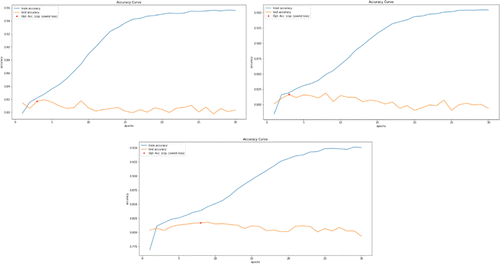

Fig. 3. The training and testing accuracy values of the classification of LSTM with Word2Vec according to epoch 10 (batch size: 100 on the left, 250 on the right, and 500 on the bottom).

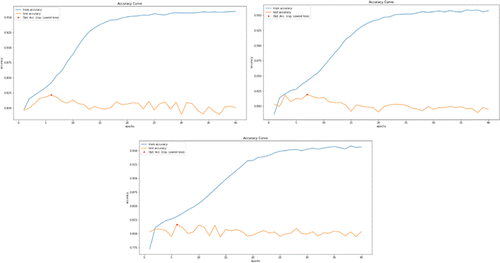

Fig. 4. The training and testing accuracy values of the classification of LSTM with Word2Vec according to epoch 20 (batch size: 100 on the left, 250 on the right, and 500 on the bottom).

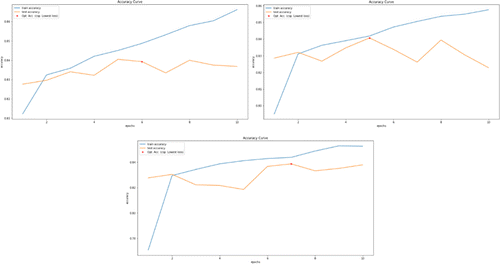

Fig. 5. The training and testing accuracy values of the classification of LSTM with Word2Vec according to epoch 30 (batch size: 100 on the left, 250 on the right, and 500 on the bottom).

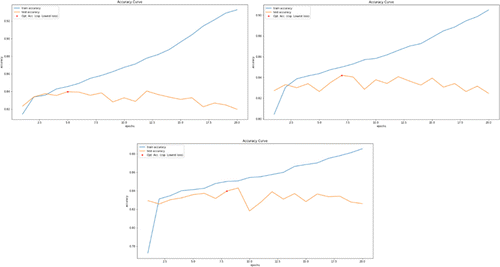

Fig. 6. The training and testing accuracy values of the classification of LSTM with Word2Vec according to epoch 40 (batch size: 100 on the left, 250 on the right, and 500 on the bottom).

In Fig. 3, the results of the training accuracy for the optimizer “Adam” are presented for three batch sizes (batch size: 100 on the left, 250 on the right, and 500 on the bottom) over a rising number of epochs. It can be distinguished that the batch sizes of 100 and 250 started with the lowest accuracy values of 79.5% and 78.7%, respectively, but they progressed with stability by the increasing epochs number (in epoch 10 the accuracy values are, respectively: 88.6% and 86.7%). For batch size 500, it started with a low accuracy of 77%. Besides, it can be recognized that the results are stable when attaining epoch 9 with an accuracy value of 84.8%.

In Fig. 4, the results of the training accuracy of the Adam optimizer are highlighted for various batch sizes (batch size: 100 on the left, 250 on the right, and 500 on the bottom) over a growing epochs number. It can be distinguished that 100-batch size is started with the lowest accuracy of 79.5% and it can be noticed the stability of the results when accomplishing epochs 19 with an accuracy value of 95.1%. We can notice that the batch sizes 250 and 500 started with the lowest accuracy values of 78.7% and 77.6%, respectively, but they progressed with stability by the increasing epochs number (in epoch 20 the accuracy values are, respectively: 94.5% and 93.3%).

Figure 5 presents the results of the training accuracy for the optimizer Adam for three batch sizes (batch size: 100 on the left, 250 on the right, and 500 on the bottom) over a rising epochs number. We can confirm that the 100, 250, and 500 batch sizes started with the lowest values of accuracy: 79.8%, 78.5%, and 76.7%, respectively, but they progressed with stability by the increasing epochs number. Moreover, it can be distinguished the stability of the results when attaining epochs 24 (with an accuracy value of 95.6%), 26 (with an accuracy value of 95.2%), and 29 (with an accuracy value of 95%), respectively, for the three Batch sizes.

For the optimizer Adam in Fig. 6, the results of training accuracy are illustrated for these batch sizes (batch size: 100 on the left, 250 on the right, and 500 on the bottom) over a rising epochs number. We can distinguish that the 100, 250, and 500 batch sizes started with the lowest values of accuracy 79.7%, 78.5%, and 77%, respectively, but they progressed with stability by the increasing epochs number. Moreover, we can notice the stability of results when accomplishing the epochs 28 (with an accuracy value of 96%), 28 (with an accuracy value of 95.8%), and 31 (with an accuracy value of 95.4%), respectively, for the three batch sizes.

4.2. Classification using LSTM with FastText

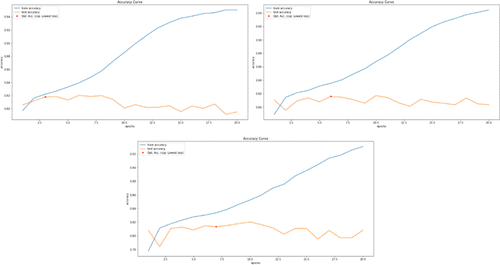

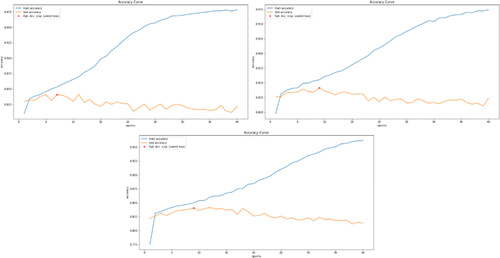

For selecting the value of the good parameter attaining significant accuracy, we will rely on the obtained results that are presented in Table 2 and Figs. 7–10.

Fig. 7. The training and testing accuracy values of the classification of LSTM with FastText according to epoch 10 (batch size: 100 on the left, 250 on the right, and 500 on the bottom).

Fig. 8. The training and testing accuracy values of the classification of LSTM with FastText according to epoch 20 (batch size: 100 on the left, 250 on the right, and 500 on the bottom).

Fig. 9. The training and testing accuracy values of the classification of LSTM with FastText according to epoch 30 (batch size: 100 on the left, 250 on the right, and 500 on the bottom).

Fig. 10. The training and testing accuracy values of the classification of LSTM with FastText according to epoch 40 (batch size: 100 on the left, 250 on the right, and 500 on the bottom).

| Epoch | 10 | 20 | 30 | 40 | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Batch size | 100 | 250 | 500 | 100 | 250 | 500 | 100 | 250 | 500 | 100 | 250 | 500 |

| Training accuracy (%) | 84.87 | 84.18 | 84.38 | 84.56 | 85 | 85.02 | 84.94 | 84.57 | 85.06 | 85.3 | 85.51 | 84.99 |

| Testing accuracy (%) | 83.93 | 84.07 | 83.37 | 83.97 | 84.20 | 83.98 | 84.14 | 83.92 | 84.1 | 84.07 | 84.14 | 84.04 |

| Training loss (%) | 38.11 | 40.15 | 39.35 | 38.99 | 38.05 | 38.23 | 38.28 | 38.95 | 37.55 | 37.2 | 36.7 | 37.91 |

| Testing loss (%) | 42.09 | 42.23 | 42.43 | 42.19 | 41.81 | 42.19 | 41.61 | 41.8 | 42.77 | 41.99 | 42.83 | 42.41 |

| Time (s) | 1971.6 | 1690.5 | 1916.8 | 3908.2 | 3335.5 | 3447.9 | 6158.9 | 5055.3 | 5420.5 | 8124.3 | 6719.7 | 6930.2 |

For the optimizer Adam in Fig. 7, the results of the training accuracy are presented for three batch sizes (batch size: 100 on the left, 250 on the right, and 500 on the bottom) over a progressing epochs number. We can approve that the 100 and 250 batch sizes started with the lowest values of accuracy 81.2% and 79.6%, respectively, but they progressed with stability by the increasing epochs number (in epoch 10 the accuracy values are, respectively: 86.7% and 85.8%). The batch size 500 started with a low accuracy of 77.2%. Also, it can be seen that the results are stable when reaching epoch 9 with an accuracy value of 85.5%.

The results of the training accuracy in Fig. 8, for the Adam optimizer, are shown for various values of batch sizes (batch size: 100 on the left, 250 on the right, and 500 on the bottom) over a progressing epochs number. We can notice that the 100, 250, and 500 batch sizes started with the lowest values of accuracy 81.3%, 80.6%, and 77.4%, respectively, but they progressed with stability by the increasing epochs number (in epoch 20 the accuracy values, respectively: 93.3%, 90.6%, and 88.8%).

The results of training accuracy in Fig. 9, for Adam optimizer, are presented for three values of batch sizes (batch size: 100 on the left, 250 on the right, and 500 on the bottom) over a growing epochs number. We can confirm that the 100-batch size started with the lowest accuracy of 81.2% and it can be distinguished the stability of the results when attaining epochs 29 with an accuracy value of 96.9%. We can notice that the 250 and 500 batch sizes started with the lowest accuracy values of 80% and 79%, respectively, but they progressed with stability by the increasing epochs number (in epoch 30 the accuracy values are, respectively: 95.5% and 93.6%).

Figure 10 shows the results of training accuracy for the Adam optimizer for various batch sizes (batch size: 100 on the left, 250 on the right, and 500 on the bottom) over a growing epochs number. We can distinguish that the 100 batch size started with the lowest accuracy of 81% and it can be distinguished that the results are stable when reaching epochs 36 with an accuracy value of 98%. It can be approved that the 250 and 500 batch sizes started with the lowest accuracy values of 79.7% and 77.5%, respectively, but they progressed with stability by the increasing epochs number (in epoch 40 the accuracy values are, respectively: 97.5% and 96.2%).

5. Discussion

There are various Neural Network models that could be very useful in the field of ASA. However, this evaluation study relies on LSTM because of its very high performance and its numerous advantages in the ASA domain compared to others models.

In our comparative study, we focused on Word2Vec and FastText word embedding models. We can confirm that every model in word embeddings has its own advantages, and characteristics for ASA, and each one was created to address the specific needs of users in the use of Neural Network models in the domain of ASA.

In the experiment step, we described two types of architectures: “LSTM with Word2Vec” and “LSTM with FastText”. Besides, we have compared and assessed these architectures using the popular optimizer Adam with various parameters: batch sizes: “100”, “250”, “500”, epochs: “10”, “20”, “30”, “40”, Loss Function, and Classification Accuracy.

This section is reserved to analyze and discuss the experiment results of these two architectures. The figures and tables highlight the training and testing accuracy results for several batch sizes and epochs using the Adam optimizer. It can be concluded that the training and testing accuracy values increase with progressing batch size values of every epoch, besides the time values go up with the rise of epoch values.

To sum up the results of the experiment phase relying on the LSTM parameters setting using Adam optimizer together with the Word2Vec and FastText word embedding models. We conclude that the LSTM model based on FastText can successfully ameliorate and rise the accuracy of text classification.

6. Conclusion

In this deep comparative evaluation, we described a very famous and popular Neural Network algorithm “LSTM” together with two-word embedding models “Word2Vec” and “FastText” that are deemed very useful in the domain of ASA.

In the experiment phase, the model LSTM using Word2Vec performance is compared with that of LSTM using FastText. The results of our experimental phase, confirm that the LSTM model with FastText can successfully ameliorate and improve text classification accuracy.