Deep learning algorithms to segment and quantify the choroidal thickness and vasculature in swept-source optical coherence tomography images

Abstract

Accurate segmentation of choroidal thickness (CT) and vasculature is important to better analyze and understand the choroid-related ocular diseases. In this paper, we proposed and implemented a novel and practical method based on the deep learning algorithms, residual U-Net, to segment and quantify the CT and vasculature automatically. With limited training data and validation data, the residual U-Net was capable of identifying the choroidal boundaries as precise as the manual segmentation compared with an experienced operator. Then, the trained deep learning algorithms was applied to 217 images and six choroidal relevant parameters were extracted, we found high intraclass correlation coefficients (ICC) of more than 0.964 between manual and automatic segmentation methods. The automatic method also achieved great reproducibility with ICC greater than 0.913, indicating good consistency of the automatic segmentation method. Our results suggested the deep learning algorithms can accurately and efficiently segment choroid boundaries, which will be helpful to quantify the CT and vasculature.

1. Introduction

Choroid is a complex vascular layer lying between the retina and sclera, responsible for the nutrition of photoreceptor metabolism and supplies oxygen and blood flow to the outer retinal layers, especially the avascular area of macula. Structural and vascular abnormality of choroid has been discovered to associate with many ocular diseases, such as central serous retinopathy,1 polypoidal choroidal vasculopathy,2 diabetic retinopathy,3 age-related macular degeneration,4 and retinal vein occlusion.5 Recently, the relationship between the choroidal thickness (CT) and choroidal vasculature has raised increasing attentions in multiple ocular diseases.6,7 For example, CT is found to have a positive correlation with the density of large choroidal vessels in eyes with nonexudative age-related macular degeneration.6 Therefore, accurate measurements of CT and vascular structure changes are of great importance, which will facilitate the study of choroid and its vasculature in different ocular diseases.

Due to the light scatter from the retinal pigment epithelium (RPE) layer, the posterior boundary of choroid is insufficient to visualize with low intensity contrast. Benefitted from the deeper penetration ability through the RPE layer, swept-source optical coherence tomography (SS-OCT) allows better visualization of the choroid, including choroid-sclera junction. As described in our previous studies, we showed the semi-automatic algorithms based on the principle with gradient information and shortest path search had high repeatability in the segmentation of choroid, and manual correction will be carried out if the segmentation errors were found.8,9,10,11,12 However, this method and the current manual approaches are not appropriate to apply to the clinical work because outlining the choroid boundary manually is a time-consuming and tedious work. The manual measurement lacks objectivity can also bring operator bias and inter-observer variability. Therefore, a more effective and precise automatic approach to segment and quantify the choroid is needed in the clinical practice. In order to facilitate the emergence of a fully automatic method for segmenting and quantifying the choroidal information, we developed a strategy based on the deep learning algorithms using optical coherence tomography images. The purpose of this study is to develop and validate a fully automatic method capable of segmenting and quantifying the CT and vasculature using deep learning algorithms.

2. Methods

This study was consistent with the tenets of the Declaration of Helsinki and approved by the Ethics Committee of Wenzhou Medical University. Written informed consent of all subjects recruited were obtained before the examination.

2.1. Study subjects

About 23 normal healthy subjects including nine males and 14 females were recruited from the staff of the Eye Hospital of Wenzhou Medical University. Their average age was 26.27±2.65 with an average axial length of 24.94±1.17mm and the average refraction of subjects recruited was −3.01±2.15 diopter. All subjects had best corrected visual acuity of 20/20 or better in both eyes. These subjects were examined by an experienced ophthalmologist (YJ) using a slit-lamp biomicroscope and all subjects were imaged at working time (from 9:00 AM to 5:00 PM). Subjects who had a refractive error between −6.00D and +3.00D were recruited. The exclusion criteria include the history of ocular surgery and trauma, systematic diseases and relevant ocular treatment. All subjects with hypertension, diabetes, age-related macular degeneration, and other similar retinopathy which will affect retinal morphology were also excluded.

2.2. Image acquisition and manual annotation of choroid

All subjects were imaged by a SS-OCT (VG200S, SVision Imaging, Henan, China) with 18-line radial scan patterns. This SS-OCT system has a central wavelength of 1050nm, the scan depth is 3mm in tissue, axial resolution is less than 6.3μm and lateral resolution is less than 20μm. Each image has an average of 64 overlapped consecutive scans focused on the fovea and has a resolution of 2048×1561, representing an actual area of 12mm×3mm. These subjects were imaged by the same experienced operator (YJ), each subject was instructed to stare at the internal fixation target, two images were acquired at the same location and between these sequenced image acquisition, the subject was instructed to move away their head, take 30 seconds rest and put back their head before the scanner. The signal strength represents the overall intensity of the imaged feature. OCT signal intensity in linear = (OCT signal in linear-background)2. Displayed in user interface is the mean linear intensity. OCT signal strength less than 60 was excluded in this study. In total, 450 images from 12 healthy subjects was divided into training and validation set as the ratio of 4:1 and were selected for the manual annotation for use as the ground truth. Semi-automatic algorithm based on the principle with gradient information and shortest path search was used to segment choroid, if the segmentation was wrong, we made the corrections manually as described in our previous studies.8,9,10,11,12 These images were annotated by an experienced clinician (SC). For each image, two lines were needed to annotate, upper bound marked at the hyper-reflective line corresponding to junction of RPE layer and Bruch membrane, the lower bound was the light pixel at the outer border of the choroid stroma.13

2.3. Data augmentation

We used data augmentation during the training phase to increase our data variability. The Image Data Generator based on Keras was used to do the data augmentation, several approaches were applied (rotation range was set as 0.2, width shift range was set as 0.05, height shift range was set as 0.05, shear range was set as 0.05, zoom range was set as 0.05, fill mode was set as nearest), and all images were randomly horizontal flipped. After data augmentation, the whole training image number was increased to 1436.

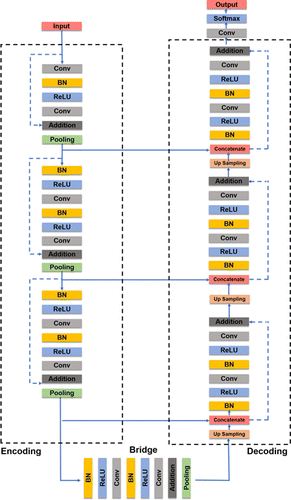

2.4. Deep learning approach to identify choroid boundary

The neural networks were implemented using the Keras library with TensorFlow backend. The segmentation of the choroid boundaries was performed with a modified residual U-Net architecture as shown in Fig. 1.14 The main difference between residual U-Net and traditional U-Net was residual U-Net is residual units that had been applied into deep network replaced by the conventional convolution unit. The residual U-Net can achieve better performance with less fewer parameters than traditional ways. The total number of trainable parameters in current network was 43,588,832, the initial SS-OCT image size was resized from 2048×1561 pixels to 512×512 pixels, are also implied as input images. The batch size was set as 1, and training epoch was set as 800. When the learning rate in the latest 10 times did not decrease, the training procedure will stop. The training method was implemented with two NVIDA TITAN RTX graphic cards.

Fig. 1. Architecture of the residential U-Net for the localization of choroidal boundaries.

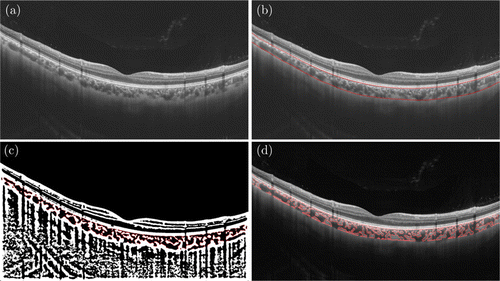

2.5. Choroidal parameters

After the segmentation of the choroidal boundaries, we obtained the corresponding parameters automatically such as choroidal vascularity index (CVI), choroidal stromal index (CSI), luminal area (LA), stromal area (SA), the total choroidal area (TCA), and CT. Similar approaches were applied into previous studies15,16,17,18,19,20,21 (Fig. 2). In brief, the images were converted to red, green, blue (RGB) color to select the dark pixels. Subsequently, the LA and SA were demarcated based on Niblack’s auto local threshold algorithm using Matlab2017a software. In the binarization images, LA and SA were represented by 0 and 1, respectively. Then the boundary of LA and SA were marked with red dotted line. The total subfoveal circumscribed choroidal area and area of dark pixels were calculated. The LA was defined as the area of dark pixels. After subtracting the LA from the TCA, SA was further calculated. The vascularity statues of the choroid were calculated by dividing LA by TCA.

Fig. 2. Example of raw SS-OCT image and the procedure of automatic identification. (a) Raw SS-OCT image; (b) automatic identification of the upper and lower choroid boundaries; (c) binarization image of the choroid and (d) demarcation of luminal area (LA) and stromal area (SA) with red dotted line.

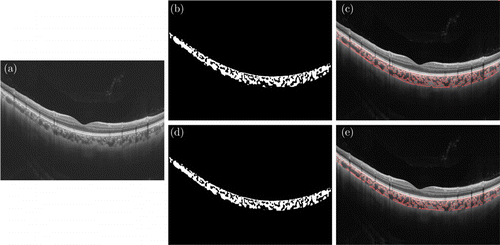

2.6. Application of residential U-Net in SS-OCT images

Another 217 SS-OCT images from 11 subjects as test set were selected separately and analyzed by manual and automatic methods, respectively, to test segmentation results, as shown in Fig. 3. To test the repeatability and reproducibility of the automatic segmentation system using deep learning algorithm, 129 repeated SS-OCT scans at same location from eight subjects (258 images in total) performed by the same operator (YJ) were used.

Fig. 3. Illustration of the difference between manual and automatic methods. (a) raw SS-OCT image; (b, c) upper row: automatic results based on deep learning and (d, e) lower row: manual results based on our previous methods.

2.7. Statistical analysis

Statistical analysis was performed with SPSS 22.0 (SPSS Inc, Chicago, IL, USA). All data was presented as mean±standard deviations (SD). A paired t-test was used to compare the automated and manual results. P-values less than 0.05 was considered as statistically significant. The Coefficient of variability (CoV) was defined as the within-subject SD divided by the within-subject mean. Intraclass correlation coefficients (ICC) was calculated by the basis of the analysis for mixed models corresponding to each condition. ICC value close to 1 means high performance of repeatability and reproducibility. About 95% limits of agreement for repeatability and reproducibility (LOA) was defined by the means of the difference±1.96 SD between the two measurements.

3. Results

3.1. Efficiency of the residential U-Net

We measured the location of the choroidal boundaries based on the deep learning algorithms and compared the results with the ground truth to assess the efficiency of the residential U-Net. We analyzed the failure ratios ranged from 0 to 3 quantitatively. Failure ratio 0 represented the distance between the automatic segmentation and ground truth was less than 0.02mm, while failure ratio 1 represented the distance was between 0.02mm and 0.05mm and failure ratio 2 represented the distance ranges from 0.05mm to 0.08mm. Failure ratio 3 represented the distance was more than 0.08mm. Table 1 showed the results of failure ratios between manual and automatic segmentation methods on the upper and lower choroidal boundaries.

| Failure ratio 0 (%) | Failure ratio 1 (%) | Failure ratio 2 (%) | Failure ratio 3 (%) | |

|---|---|---|---|---|

| Upper boundary | 91.92 | 3.54 | 0.22 | 4.32 |

| Lower boundary | 45.76 | 31.58 | 13.23 | 9.43 |

| Total | 68.84 | 17.56 | 6.72 | 6.88 |

3.2. Comparison between manual and automatic segmentation

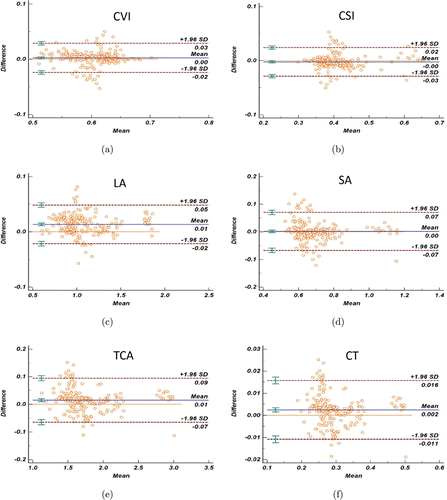

For the comparison between manual and automatic segmentation methods, Table 2 showed the difference results of two segmentation methods (automatic results minus manual results) on six choroid-relevant parameters. The agreement between the automatic and manual segmentation in the same SS-OCT images was high. The Bland–Altman plot (Fig. 4) showed that almost 95% points which means the relative difference of the automatic and manual values located between the LOA. For the CT, the ICC value was 0.994 with a CoV of 2.284. For the other five choroidal vasculature parameters such as CVI, CSI, LA, SA, and TCA, the value of ICC were 0.966, 0.977, 0.977, 0.964, 0.994 with CoV values of 2.230, 3.277, 1.653, 5.024, and 2.284, respectively.

Fig. 4. Difference assessment between automatic and manual segmentation in 217 SS-OCT images on various parameters (Bland–Altman plots): (a) choroidal vascularity index (CVI); (b) choroidal stromal index (CSI); (c) luminal area (LA); (d) stromal area (SA); (e) the total choroidal area (TCA); (f) choroidal thickness (CT). Blue lines represent the mean relative difference of segmentations (automatic results minus manual results), red dashed lines represent the 95% LOA respectively. 95% confidence interval of the mean relative difference and 95% LOA are added on the corresponding dashed lines in all choroidal parameters.

| Parameters | CVI | CSI | LA (mm2) | SA (mm2) | TCA (mm2) | CT (mm) |

|---|---|---|---|---|---|---|

| Mean±SD | 0.002±0.013 | −0.002±0.013 | 0.013±0.018 | 0.001±0.035 | 0.015±0.041 | 0.002±0.007 |

| 95% LOA | [−0.024, 0.029] | [−0.029, 0.024] | [−0.022, 0.048] | [−0.068, 0.070] | [−0.065, 0.094] | [−0.011, 0.016] |

| ICC | 0.966 | 0.977 | 0.997 | 0.964 | 0.994 | 0.994 |

| CoV | 2.230 | 3.277 | 1.653 | 5.024 | 2.284 | 2.284 |

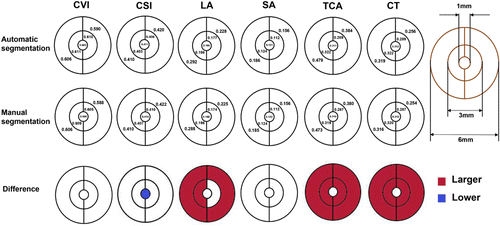

We further divided the 6mm×6mm macula-centered analyzed area into five regions, including a 1mm diameter circle centered over the fovea surrounded by an inner concentric ring 1–3mm from the fovea and an outer concentric ring 3–6mm from the fovea. The inner and outer concentric ring were then vertically divided into another two equal regions (Fig. 5). Briefly, the values of CVI, CSI, and SA were basically equal between the automatic and manual segmentation, whereas the automatic segmentation values in most regions of LA, TCA, and CT were higher than manual segmentation values significantly.

Fig. 5. Difference assessment between automatic and manual segmentation in 217 SS-OCT images in five subregions (automatic results minus manual results). Red represents automatic segmentation value is higher than the manual segmentation value significantly, blue represents the opposite.

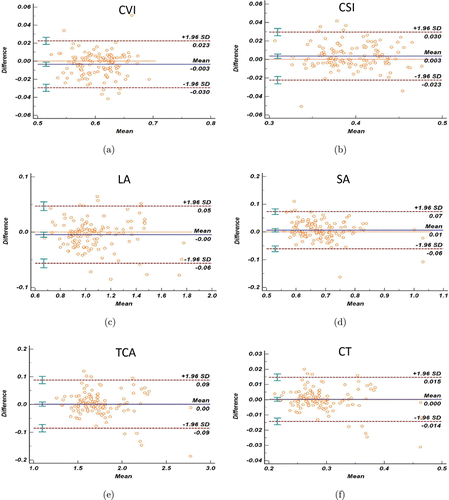

3.3. Comparison between repeated automatic segmentation

For the repeatability and reproducibility of automatic segmentation based on deep learning algorithm, the Bland–Altman plot (Fig. 6) showed the difference results between the repeated automatic results (the second automatic results minus the first automatic results) were relatively small. As shown in Table 3, there was a high agreement of the repeated automatic segmentation in various choroidal parameters. For example, for the choroidal structure parameters like CT, the CoV of the determined average difference was 2.526 with an ICC of 0.987. For the choroidal vasculature parameters like CVI, the CoV of the average difference was 2.187 with an ICC of 0.923.

Fig. 6. Difference assessment between the automatic segmentation in 129 repeated SS-OCT scans at the same location (258 images in total) on various parameters (Bland–Altman plots): (a) choroidal vascularity index (CVI); (b) choroidal stromal index (CSI); (c) luminal area (LA); (d) stromal area (SA); (e) the total choroidal area (TCA); (f) choroidal thickness (CT). Blue lines represent the mean relative difference of segmentations (the second automatic results minus the first automatic results), red dashed lines represent the 95% LOA, respectively. 95% confidence interval of the mean relative difference and 95% LOA are added on the corresponding dashed lines in all choroidal parameters.

| Parameters | CVI | CSI | LA (mm2) | SA (mm2) | TCA (mm2) | CT (mm) |

|---|---|---|---|---|---|---|

| Mean±SD | −0.003±0.013 | 0.003±0.013 | −0.005±0.026 | 0.006±0.034 | 0.001±0.044 | 0.000±0.007 |

| 95% LOA | [−0.030, 0.023] | [−0.023, 0.030] | [−0.056, 0.047] | [−0.061, 0.073] | [−0.085, 0.088] | [−0.014, 0.015] |

| ICC | 0.923 | 0.923 | 0.992 | 0.913 | 0.987 | 0.987 |

| CoV | 2.187 | 3.350 | 2.475 | 4.982 | 2.526 | 2.526 |

4. Discussions

The results of this study showed the high repeatability and reproducibility of the proposed fully automatic method based on deep learning algorithms, which was capable of segmenting and quantifying the choroidal structure and vasculature in SS-OCT images.

In SS-OCT images, the choroidal boundaries were identified accurately due to good image quality and the high intensity contrast of choroid-sclera interface. The results presented in Table 1 indicated that the residential U-Net we proposed in this study had a great efficiency. In general, the upper choroidal boundary in 95.68% images had the difference within 0.08mm between the location measured automatically and the ground truth (except 4.32% images more than 0.08mm), while 90.57% images had the difference less than 0.08mm related to lower choroidal boundary (except 9.43% images more than 0.08mm). This indicated the residential U-Net proposed obtained great segmentation performance with great matching to ground truth. Moreover, the Bland–Altman plots and the ICC results of manual and automatic methods as well as the repeated automatic segmentation methods both showed the outstanding reproducibility of the deep learning algorithm.

Accurate segmentation of choroid is important in the exploration of the choroid-related diseases. There are some manual and semi-automatic methods to accurately segment the choroid,8,12,22,23 whereas, it is not appropriate for the clinical application. Thus, fully automatic segmentation method can provide a more suitable approach with a great prospect. For instance, Zhou et al.24 suggested an attenuation correction method to improve the segmentation of choroidal boundaries whereas a soft-constraint graph-search with shape model was proposed by Zhang et al.25 to help segment the choroidal boundaries. Moreover, other methods such as multi-scale adaptive thresholding and a level set method based on the choroidal boundaries were proposed to segment choroidal vessels.26,27,28 Recently, due to the outstanding ability to recognize the image features hierarchically, deep learning methods has been a great alternative approach for image segmentation. For example, Masood et al.29 segmented the choroidal layer based on the convolutional neural networks, with the Bruch’s Membrane segmenting out by morphological operations. However, this patch-based method trained the patches independently, which may lead to a lack of spatial consistency of adjacent pixels. In order to overcome the limitations, Sui et al.30 used a deep convolutional neural networks for choroidal segmentation, Liu et al.31 also introduced the RefineNet, an end-to-end fully convolutional networks variant, to help segment choroidal boundaries. In this study, we proposed a novel method modified from residual U-Net, which unites the advantages of both deep residual learning and U-Net, allowing us to achieve a network with less training but strong performance.14 The proposed residual U-Net based on the deep learning algorithms combined with automated vasculature segmentation algorithm is able to segment and quantify the choroidal structure and vasculature automatically, which may be a great choice to provide more abundant choroidal information from another aspect. In detail, CT was one of the most commonly used parameters to show the changes of choroid. However, CT was more vulnerable to be affected by multiple factors like age, axial length, and intraocular pressure. On the contrary, some other parameters such as CVI, CSI, LA, SA, and TCA were regarded as more robust indexes exhibited lower covariance compared to CT.15,32 In this study, different choroidal parameters between the automatic and manual methods in the 6mm×6mm macula-centered images were comparable except some subregions of LA, TCA, and CT. Nevertheless, some researchers discovered the difference of the vascular parameters mentioned above that were much larger in most choroid-related ocular diseases.32,33,34,35 For example, Agrawal et al.32 discovered the difference of TCA and LA in patients with central serous chorioretinopathy was 0.08mm2 and 0.14mm2 compared to controls, respectively. Demirel et al.33 also found a similar result that the difference of LA and SA in patients with central serous chorioretinopathy was 0.14mm2 and 0.05mm2 compared to controls, respectively. For patients with branch retinal vein occlusion, Coban-Karatas et al.34 and Kim et al.35 considered the difference of CT was 0.016mm and 0.065mm compared to the controls. Moreover, Querques et al.36 and Chen et al.8 proposed the CT variation between the controls and patients with diabetic retinopathy that were much larger than 0.007mm, even in diabetic patients without apparent retinopathy. In brief, the accuracy of the fully automatic segmentation could satisfy the application in identifying lesions caused by choroid-related ocular diseases, suggesting the high value for clinical practice.

There are some limitations in this study. First, the sample size in the deep learning algorithms was relatively small, a larger sample size is needed to further verify the repeatability and reproducibility of this novel automatic method. Second, the subjects selected in this study were healthy and relatively young adults, evaluation of elderly and disease patients will be the subject of future research.

In summary, the proposed automatic method based on the residential U-Net facilitated accurate automatic segmentation of the choroid and showed excellent performance to segment and quantify the choroid. Therefore, the application of the deep learning algorithm in the segmentation of choroid may provide a powerful tool for the measurement and quantification of CT and vasculature, supporting the further studies of choroid-related ocular diseases.

Conflict of Interest

The authors declare that there are no conflicts of interest relevant to this article.

Acknowledgments

This study was supported by the National Key Project of Research and Development Program of Zhejiang Province (Grant No. 2019C03045), Wenzhou Municipal Science and Technology Bureau (Grant No. 2018ZY016), and the National Nature Science Foundation of China (61675134). Gu Zheng and Yanfeng Jiang are co-first authors.

Authors’ Contributions

Study design and supervise (FL, MS); data collection (GZ, YJ, SC); establishment of neural network (XY, CS); analysis and interpretation of data (GZ, YJ, CS, HM, YW, SC, ZL, WW); paper preparation and review (GZ, MS). All the authors have read and approved the final paper.

Ethics Approval and Consent to Participate

This study was conducted in accordance with the Declaration of Helsinki and approved by the Ethics Committee Board of the Wenzhou Medical University (KYK 2017 [41]). All subjects recruited were informed about the study and signed a written informed consent.

Availability of Data and Materials

Available from the corresponding author on reasonable request.