Artificial intelligence-assisted light control and computational imaging through scattering media

Abstract

Coherent optical control within or through scattering media via wavefront shaping has seen broad applications since its invention around 2007. Wavefront shaping is aimed at overcoming the strong scattering, featured by random interference, namely speckle patterns. This randomness occurs due to the refractive index inhomogeneity in complex media like biological tissue or the modal dispersion in multimode fiber, yet this randomness is actually deterministic and potentially can be time reversal or precompensated. Various wavefront shaping approaches, such as optical phase conjugation, iterative optimization, and transmission matrix measurement, have been developed to generate tight and intense optical delivery or high-resolution image of an optical object behind or within a scattering medium. The performance of these modulations, however, is far from satisfaction. Most recently, artificial intelligence has brought new inspirations to this field, providing exciting hopes to tackle the challenges by mapping the input and output optical patterns and building a neuron network that inherently links them. In this paper, we survey the developments to date on this topic and briefly discuss our views on how to harness machine learning (deep learning in particular) for further advancements in the field.

1. Introduction

When coherent light propagates within complex media, the optical wavefront is distorted by either the multiple scattering due to inhomogeneous refractive index profile or modal dispersion in multimode fiber (MMF). The strong distortion leads to random interference with randomly distributed bright and dark spots, namely optical speckle patterns. Such a process of light propagation, albeit seemingly random, is actually deterministic within a certain temporal window1,2 and can be analytically described by a transmission matrix.3 This allows feasibility to probe the complex medium by shaping the incident wavefront onto the medium so that the medium can be learned with the induced speckle patterns. The information from the probed speckle patterns can be used for wavefront control and imaging object through the medium.

Optical wavefront shaping (WFS)1,2,4,5,6 is developed to compensate for the scattering-induced phase distortions, where a spatial light modulator (SLM) with discrete elements is typically employed to resolve the continuous wavefront.7 By controlling millions of modes to shape the incident light, not only diffraction-limited optical focusing2,4,8,9 but also arbitrary intensity profile (e.g., images) transmission10,11,12 can be achieved through or within highly scattering media. Depending on how we optimize the wavefront displayed on the SLM, the current implementations can be categorized into two groups: time-reversed WFS and iterative WFS.13 The former is generally enabled through optical phase conjugation (OPC),14,15,16,17,18,19,20 where the distorted wavefront is directly measured by an interferometry configuration. A phase-conjugated copy of the wavefront distortion is then generated to time-reverse the light propagation and form a focus inside or through the complex medium. This process can be extremely fast, thus is suitable for real-time or even in vivo applications.21,22,23 In the latter category, the best modulation is achieved by iterative optimization5,6,24,25 or transmission matrix (TM) measurement.3,12,26 In iterative optimization, an algorithm is used to update the SLM pattern so that the feedback signal is enlarged step by step.24,28 In the TM method, a linear transformation of a static medium is analyzed to determine how the incident wavefronts are discretely weighted to the outputs.3 The static assumption allows a set of incident wavefronts of the orthogonal basis to probe the response from the medium (i.e., the output wavefronts). Note that the performance of modulation (e.g., focusing) in this way sees some limitations while the iterative method modifies the wavefront in a real-time manner.29 Nevertheless, the TM physically describes the interaction between the medium and the random optical process, where features like polarization,29 spectrum,30,31 as well as eigenchannels32,33,34,35,36 of the transmission can be extracted. Furthermore, the measured TM can be used to derive the desired modulation for raster scanning37 and image transmission.38 Such capabilities, however, are highly dependent on the stability of medium; small perturbations could possibly result in a new matrix.4,39 Besides, it is assumed that the optical processes are linear and can be modeled with a single matrix, which is not met well under noisy environments. Lastly, the performance and complexity of both iterative optimization and TM measurement increase proportionally to the number of resolved modulating elements , leading to a time-consuming optimization procedure when is up to millions for high-quality control.2,4

Adaptive optics (AO) is a twin of WFS that shares similar goals and challenges, although it works in regimes of weaker scattering where residual but distorted optical focus exists. AO was initially invented to correct the optical wavefront distortion induced by turbulent atmosphere in astronomical observation.40 Recently, this technique has been transplanted to biomedicine,41 mainly on microscopic42,43 and retinal imaging44,45 to compensate for aberrations induced by the specimen or human eye. Conceptually, an AO system includes a wavefront sensor for measuring the distorted wavefront directly, a dynamic optical element (e.g., deformable mirror, DM46) in a feedback loop to correct the distortion.

Another topic of interest is to image or computationally recover hidden objects from the speckle patterns. In this application, the TM of the scattering medium is usually measured in advanced,3,12 and then inverted to focus light47,48,49 or descramble transmitted image12,50 through a complex medium, such as diffusers3,49 or MMFs.48,50,51 To be noted, however, such TM approach is usually of complexity and susceptibility. There are also other phase retrieval approaches, such as through holography,52 transport of intensity equation,53 and iterative algorithms (e.g., Gerchberg–Saxton–Fienup algorithm).54,55

While promising, the implementation performance of wavefront control and computational imaging thus far have seen limitations, mainly because a large number of independent modulating elements are usually needed, and the modulation process is time-consuming. It is especially critical when living biological tissues are involved where physiologic motions, such as breathing, heartbeat, and blood flow, resulting in optical speckle patterns that decorrelate fast.2,4 In the past few years, the rapidly developing artificial intelligence (AI) techniques dominated by machine learning subfield,56 have been introduced to this field and brought new hopes, and deep learning a major workhorse. For example, deep learning can help build up a computational architecture as a generic function approximating the light propagation process across a complex medium. That is, the transformation from the input wavefronts to the output speckle patterns can be learned and linked through a complex yet data-driven neural network that is implicit to actual physical states. This opens up possibilities to directly determine the optimum wavefront compensation for any desired output pattern,28,57,58 or reconstruct objects from the recorded speckles, namely computational imaging.59,60,61

In this paper, we survey these recent developments of AI-assisted light control and computational imaging through scattering media, and the rest of this article is organized as follows: In Sec. 2, we discuss how AI techniques can be utilized to assist coherent light wavefront control within or through complex media of different degrees of scattering; In Sec. 3, we discuss how AI can assist AO bioimaging, computational imaging reconstruction, as well as MMF-based image transmission; In Sec. 4, we briefly summarize the developments thus far, compare different networks employed for wavefront control and image recovery, and outline existing challenges and potentials for further advancement in this exciting field.

2. AI for Coherent Optical Wavefront Control through Scattering Media

2.1. Integration of AI with WFS

In the model of transmission matrix, and are usually denoted to present the input and output complex fields in the model of transmission matrix, and they are linked by , where is the TM of the complex medium. Since regular photodetectors can only detect intensity of light, the phase information is missed. Therefore, phase shifted3,37,62,63 or interferometry47 methods have been used to measure the TM. In machine learning, the input–output transformation is extended and generalized; the propagation of light in scattering media, albeit complex, is mathematically simplified as , where represents the forward propagation operator. The inverse scattering propagation thus can be expressed by , with indicating the inverse operator. Unlike in TM method, the output here can be directly defined as the intensity profile of the output wavefront, which can generalize the transformation by learning the . Note that nonlinear effects associated with the propagation process could be included here, and through training input–output pairs, the forward and inverse operators of the medium can be statistically modeled, with which the relationship between and is determined. Now, let us consider how machine learning functions in optical wavefront control. In this context, the desired or target output profile is given or known. Thus, the optimized input can be determined by the learned and the target through . By doing so, when is displayed on the SLM, a desired output identical or similar to will be produced at the targeted position.

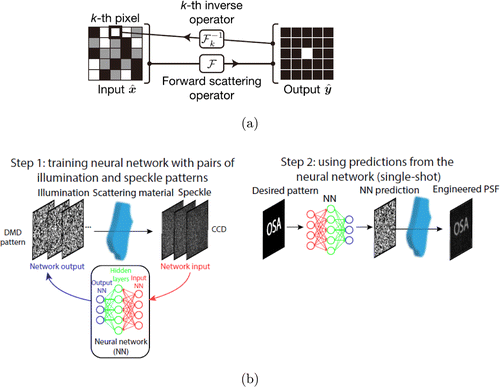

Such idea was first demonstrated by Horisaki et al. in 2017,28 when the authors demonstrated single-shot light focusing through a scattering medium by utilizing the trained inverse scattering operator (Fig. 1(a)). Based on a number of training pairs of the input and output patterns, they used Support Vector Regression (SVR) to calculate the pixel-wise inverse scattering operator. The input pattern was stepwise optimized with the trained inverse scattering operator, and the target output pattern was successfully reproduced, which could be single and multi-focus behind the complex medium. However, the study sees limitations in terms of field of view, signal to noise ratio, and training duration (97min).

Shortly afterwards, Alex Turpin et al.57 proposed a two-step neural network (NN) approach for light scattering control (Fig. 1(b)): First, the pairs of illumination patterns displayed on a digital micromirror devices (DMD) and the corresponding speckle patterns recorded with a CCD camera were generated and used to train a NN, with a goal to infer the relationship between the pairs; Second, the desired or arbitrary target pattern was fed into the trained network to generate a prediction (of the input modulation), which was then displayed on the DMD to produce the desired pattern through the scattering material. Good agreement has been achieved between the desired target pattern fed into the network and the final produced pattern, proving that the trained network essentially functions as the inverse transmission matrix of the medium.

In their research, the authors have demonstrated that NNs can be used to efficiently shape light through various scattering media. Single-layer NNs (SLNNs) proved to take advantage of the linearity of light scattering to focus through several scattering media or even generate any desired light distributions through a glass diffuser. Although easy to implement and train, SLNNs are still constrained by underlying linearity. The authors then found a three-layer convolutional neural network (CNN) for light scattering control. The CNN can efficiently lower the number of parameters required for training, advantageous for nonlinear situations. As seen, the integration of deep learning and WFS enables coherent light control in transmission through scattering media.

Fig. 1. Illustration of AI-assisted coherent light control through scattering media. (a) Light focusing through a scattering medium assisted by machine learning. The input pattern was stepwise optimized with the trained inverse scattering operator, and the target output pattern can be reproduced through the scattering medium and (b) Two-step NN-assisted WFS for controllable light delivery through scattering media. The illumination and the corresponding speckle patterns are formed into pairs to train the NN, with the speckle patterns as the input and the DMD patterns as the output. The trained network is then used to predict a DMD pattern that can generate a desired pattern after the medium. The prediction is subsequently displayed on the DMD, resulting in a pattern identical or very similar to the desired one through the scattering medium. Reproduced from Refs. 28 and 57.

2.2. Integration of AI with AO

If the medium is not very thick or diffusive, fewer scattering events are involved, and residual optical focus, rather than random speckle patterns, can be observed at the region of interest. Optical wavefront control in such a scene is usually referred to as AO but not WFS. AO typically works in an imaging system to improve imaging quality through wavefront modulation. AI techniques have been currently applied in AO systems for aberration measurements and wavefront reconstruction in astronomical observation.

Applications of artificial neural networks (ANN) are seen in this field to estimate the wavefront distortions in AO system.64,65,66 These works, however, will not be discussed here; instead, we will focus on the recent developments that are related to direct wavefront control. In AO implementations, the distorted wavefronts are typically measured by a Shack–Hartmann (SH) wavefront sensor, with which the slopes of each SH lenslet are recovered. Then the recovered slopes are applied to the DM via command matrix to induce phase correction to the upcoming light. Such implementations, however, are inherently limited by the number of lenslets in the system and prone to certain error modes.67 The concepts of AI, especially ANN, have not been used for AO for long time,68,69,70 but this area has recently revived, bringing new hopes to overcome the aforementioned limitations. For example, in 2014 Osborn et al.71 reported a single hidden layer multi-layer perceptron to infer the Zernike polynomials from the SH slopes for wavefront reconstruction; in 2018 Swanson et al.67 employed a U-Net architecture to learn a mapping from the SH slopes to the true wavefront slope data, based on which the latent atmospheric wavefront was reconstructed and a convolutional LSTM network was employed to predict future wavefronts with only five previous data samples; also in 2018, Suarez et al.72 proposed a CNN-based reconstructor relying on the full measurement images of the SH wavefront sensor; in 2019 Ma et al.73 used a CNN to extract features from the two intensity images of wavefront distortions and obtained the corresponding Zernike coefficients, based on which satisfactory AO compensation effect was achieved.

To our best knowledge, wavefront reconstruction algorithms have been dominated by Zernike polynomials model74,75 in current astronomical AO imaging system. Deep learning has thus been utilized to mainly infer Zernike coefficients for wavefront correction, or to map the measured SH slope maps to the true wavefront data. Since no systematic study regarding the integration of AI and AO bioimaging till now, more efforts for deep learning techniques to boost AO bioimaging are highly desired.

3. AI for Microscopy and Computational Imaging Through Scattering Media

AI techniques can be employed for image post-processing to deal with residual aberrations that degrade imaging quality in AO bioimaging system, and by extension, in general microscopic system. Here, we also report recent advances about AI techniques in imaging through complex scattering media.

3.1. AI-assisted AO bioimaging post-processing

AO has been introduced to bioimaging41 — mainly on optical microscopy42,44 and retinal imaging44,45 — from the field of astronomy, providing correction for aberrations induced by the specimens and the imaging system. The value of AO microscopy can be seen in many applications where focusing deep into specimens is essential. Take neuroscience as an example, adaptive correction of two-photon microscopy76,77 and confocal microscopy78 deep in mouse brain tissue have been demonstrated with enhanced performance. Another example is the compensation for ocular aberrations79 to achieve near diffraction-limited retinal imaging in modalities like confocal scanning ophthalmoscopy and optical coherence tomography.

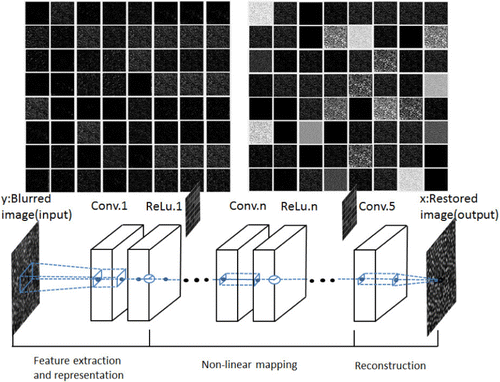

With imperfect wavefront correction, in AO bioimaging residual aberrations inevitably exist and downgrade the imaging quality,80 making it indispensable for appropriate imaging post-processing. This can be obtained by using image restoration techniques, such as nonblind deconvolution based on PSF measurement81 or more generalized blind deconvolution algorithms,82,83 which allow simultaneous recovery of blurred images and PSF distributions. Many machine learning-based approaches have also been developed. For example, to deblur AO retinal images, a method using the deconvolution method and Random Forest was proposed in 2017 to learn the mapping of retinal images onto the space of blur kernels.84 The reconstruction performance of this method, however, is limited due to the dependency of the system specificity on a nonblind deconvolution algorithm. More recently, deep learning has been proposed to restore the degraded AO retinal images80 (illustrated in Fig. 2). In this method, a CNN was trained based on a synthetically generated dataset of 500,000 image pairs; 100 ideal retinal images were first created and then 5000 PSFs were generated for each of them to produce the training dataset. Note that this method does not need to predict the PSF of the imaging system and allows directly learning an end-to-end mapping between the blurred and restored images. With both synthetic and real images, the trained CNN was validated to restore clean and sharp retinal images from corrupted images effectively.

Fig. 2. The schematic diagram of the CNN used for AO retinal image deblurring. The network comprises five layers that combine convolutions with element-wise rectified linear units (ReLU). A blurred image goes through the layers and is transformed into a restored one, and some sample feature maps are drawn for visualization. Reproduced from Ref. 80.

In additional to AO bioimaging, deep learning has also seen wide applications for other microscopic scenes employed for biomedicine, such as resolution improvement,85,86,87,88,89 depth-of-field extension,88,90 and aberration correction.66,91,92 For this topic, it is first reported that researchers from UCLA proposed a deep learning-based microscopy that works with a single image captured using a standard optical microscope in 2017.88 They trained a CNN framework providing low-resolution “input” images (lung tissue) and high-resolution “training labels”. Once the training is complete, with any low-resolution image taken in, a higher-resolution version with improved field of view and depth of field can be quickly (0.69s/image) generated by the network. Furthermore, they reported that with appropriate training, the entire framework (and derivatives thereof) might be effectively applied to other optical microscopy and imaging modalities, and even assist in designing computational imagers. For beneficial reference, we expect to find inspirations from these extended techniques, which may open up new avenues for learning-based aberration correction for AO microscopy.

3.2. AI-assisted computational imaging through scattering media

Imaging through complex scattering media is a pervasive challenge in many scenes, of which deep-tissue optical imaging93 is of particular interest. Optical scattering scrambles the wavefront phases and impedes the delivery of information, with the result of no clear image of the object being produced or observed but only random speckles out of complex media. To address this challenge, approaches based on digital holography,94,95,96 WFS,3,6,95 and memory effect97,98 have been developed, albeit complex optical setups are usually required. As for computational imaging, in particular, the TM-based method has been exploited widely to descramble the distorted images.3,12 However, as discussed earlier, the characterization of the “one-to-one” input–output relationship is of experimental and computational complexity, which is, further, susceptible to external perturbations. Moreover, in TM method it is inherently assumed that the optical propagation and interaction with the medium are linear and can thus be mathematically modeled as a single matrix. Such assumptions, however, are probably invalid or incorrect under noisy or inadequate experimental conditions.28

In this part, we would like to report recent developments of learning-assisted computational imaging through scattering media, which has been proven to be simpler and more robust. The key to such inverse scattering process is to recover the target objects from the recorded speckles via “learning” to approximate solutions to the inverse problems in computational imaging. Now, let us denote as the unknown target object, as the recorded speckle pattern, and as the forward imaging operator. With an additional regularization item , we can model the inverse problem through the Tikhonov–Wiener optimization function: , where is the regularization parameter, and is the estimate of the target.60 To work out this ill-posed reconstruction function, and must be known explicitly or parametrically. Although there are several approaches99,100,101 to determine these prior representations, the process is often complicated and prone to errors. Recent years have seen AI techniques in solving this inverse problem by learning these operators implicitly through the training of target examples. In 2016, Horisaki et al.102 first demonstrated such an idea by proposing a SVR method to learn the scattering operator, where light is scattered by three sequential scattering plates and modulated by two SLMs. After the operator is learned, faces being imaged through random media can be reconstructed from the speckle patterns. The authors also made it clear that the SVR leads to a promising generalization. Such a machine learning-based approach in the context of computational imaging through scattering media has a positive impact in boosting other related works, especially for the successful introduction of deep learning-based techniques.

A deep neural network (DNN) can be trained to learn implicitly, and to act as the inverse operator to recover the target object from a recorded speckle, described as the expression mathematically. This learning approach requires no prior knowledge and is expected to be a more robust approximator to nonlinear operators, but a large training dataset of known image pairs of the target objects and their corresponding speckle patterns must be available. In 2017, Lyu et al.60 used a DNN model with two reshaping layers, four hidden layers, and one output layer to retrieve the handwritten digit images from Mixed National Institute of Standards and Technology (MNIST) database behind a 3mm thick white polystyrene slab. In this method, objects locating at one side of the scattering medium were mapped to the corresponding speckle patterns observed on the other side, simple yet opening up avenues to explore optical imaging through more general complex systems. Also in 2017, Sinha et al.61 experimentally built and tested a lensless imaging system, where a “U-net”-based DNN was trained to recover phase objects created by an SLM; the corresponding resultant intensity diffraction patterns were used as the input. Note that the network has learned a model of the underlying physics of the imaging system. Even though it was trained exclusively on images from the ImageNet database, it was still able to accurately reconstruct images of completely different classes from Faces-LFW database. In 2018, the same group further proposed a network architecture called IDiffNet, a U-net with densely connected CNN, and introduced negative Pearson correlation coefficient (NPCC) as the loss function for network training.59 The IDiffNets trained on different databases all seem to learn automatically the physical characteristics of the scattering media, including the degree of shift invariance and the priors restricting the objects. But the type of object, which can be reconstructed, is restricted to the type of object (e.g., face, scene or digit) in the training dataset. This U-net based architecture was shown to achieve higher space-bandwidth product of reconstructed images than the previously reported, and to exhibit robustness toward the choice of the priors. In addition, the team implemented IDiffNets to achieve phase retrieval in a wide-field microscope shortly after,103 where the network was trained using the data generated by a transmissive SLM and tested with images captured from a microscope. The reconstruction results of a phase target showed the value of IDiffNets to build a quantitative phase microscope.

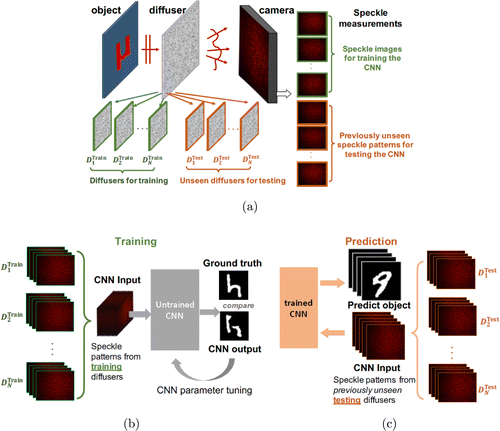

To address the susceptibility to speckle decorrelation in the deterministic TM method (one-to-one mapping), in 2018, Li et al.39 proposed a “one-to-all” deep learning scheme (see Fig. 3), where a CNN, similar to the model used in Ref. 60, was built to learn the statistical information about the speckle intensity patterns measured on a series of diffusers with different microstructures yet the same macroscopic parameters. The trained CNN can “invert” speckles captured from different diffusers to the corresponding target object accurately. This can significantly improve the scalability of imaging by training one model to fit various scattering media of the same class.

Fig. 3. Diagram of deep learning-based scalable imaging through scattering media. (a) Speckle measurements are repeated on multiple diffusers, (b) During the training stage, only speckle patterns collected through the training diffusers are used and (c) During the testing stage, objects are predicted from speckle patterns collected through previously unseen testing diffusers , demonstrating the superior scalability of this deep learning-based approach. Reproduced from Ref. 39.

3.3. AI-assisted MMF-based image transmission

A typical MMF can support tens of thousands of optical modes and is capable of delivering spatial information. However, MMFs behave like scattering media due to modal dispersion, resulting in seemingly random speckle patterns, if coherent light is in use. Since 1967, researchers have started to explore the feasibility of image transmission across MMFs.104 The development of optical WFS has opened a new era. In recent years, methods based on phase conjugation,11,105 iterative optimization,10,106 and transmission matrix measurement48,50 have been proposed to compensate for the effects of modal dispersion. They either focus light at the distal end of the fiber or transmit desired images through the MMF. Although WFS techniques are indeed indispensable for controlling coherent light propagation across MMFs, some challenges remain with these studies as discussed earlier.

Being simpler and more robust, deep learning techniques has been introduced for imaging or image transmission through MMFs. Here, we report some recent examples that recover object images from the intensity measurements collected at the output side of MMFs, transforming MMFs into useful imaging fibers, which is especially promising for endoscopic applications with ultra slim fibers.50 In practice, most of the learning-based methods train a neural network with the resultant speckle patterns as the network inputs and the input images to the fiber as the training labels, in order to classify and reconstruct the input image with a new provided speckle pattern. There are early demonstrations107,108 of using two-layer networks to train and to recognize a few images across a 10m long step-index fiber. In 2017, Takagi et al.109 demonstrated the binary classification of the face and nonface targets based on their corresponding speckle patterns transmitted through an MMF. Three supervised learning methods (support vector machine, adaptive boosting and neural network) were used and all of them achieved high accuracy rates of 90% for classification. Later on, Rahmani et al.110 used both VGG-net and Res-net to perform a nonlinear inversion mapping between the amplitude of the speckle patterns and the phase or the amplitude at the input of a 0.75m long MMF. The authors showed that the networks can be trained for reconstructing images that belong to another class not used for training/testing. Borhani et al.111 trained a “U-net” to reconstruct the SLM input images from the recorded speckle (intensity) patterns from the MMF, and a VGG to classify the distal speckle images and reconstructed SLM input images. The authors further showed that such capability was observed for MMFs of up to 1km long through training the DNNs with a database of 16,000 handwritten digits. Most recently, Fan et al.112 showed that a CNN can be trained under multiple MMF transmission states to accurately predict the input patterns at the proximal side of the MMF at any of these states, exhibiting a significant generalization capacity for different MMF states. The aforementioned recent studies are summarized in Table 1.

| Network | ||||

|---|---|---|---|---|

| Reference | structure | MMF states | Database | Features |

| Takagi et al. (2017)109 | One hidden layer | Static situation | Caltech computer vision database | Object classification |

| Rahmani et al. (2018)110 | VGG-net Res-net | 0.75m long | Handwritten Latin alphabets | ① Amplitude-to-amplitude and amplitude-to-phase mapping ② Transfer learning |

| Borhani et al. (2018)111 | VGG U-net | 0.1m, 10m and 1000m | Handwritten digits | ① Classifier and Reconstruction ② Large dataset for robustness |

| Fan et al. (2018)112 | CNN | Dynamic shape variations | MNIST database | ① Generalization capability ② Robustness to variability and randomness of MMF |

4. Discussion

In this paper, we have reviewed recent progress on the applications of AI to assist WFS and AO to achieve controllable light delivery, imaging, and image recovery through scattering media. Most of these studies rely on machine learning-based approaches, deep learning in particular, and have enabled powerful and robust performance. In terms of different NNs deployed for various applications in wavefront control and image recovery, we here make a brief comparison and reveal the key points in network model design.

Deep learning for different WFS applications often requires a careful search for optimal architecture to match the data complexity and specific task demands. To be first, for coherent light control through scattering media, usually simple network architectures could be well enough. In Ref. 57, SLNNs can be qualified for the case where the light scattering is linear within simple scattering materials. As SLNNs are easy to implement and train, they are suited to shape light to correct for scattering in media (like MMFs) with slow dynamics (on the order of a few tens of seconds). And for nonlinear situations, simply combining multiple NN layers densely may make it challenging to train data due to the increased number of parameters. Such multi-layer NNs need to be specifically designed (e.g., using CNN) and trained (usually larger training set is required compared to SLNNs) to generate the desired type of light distribution through scattering media.

For AO wavefront reconstruction networks, many recent works that either to infer Zernike coefficients for wavefront correction73 or to map SH slopes to true wavefront data,67 have adopted the CNN architecture, for its advantage to extract image features. And there is nothing obvious about these networks. When it comes to image restoration in computational imaging, the network configurations are usually complex for good effects (i.e., image enhancement for microscopy or image reconstruction from speckle patterns). A remarkable feature in most current reconstruction networks39,59,61 is that the “U-net” architecture with skip connection layers are widely employed. These skip connections through the encoder–decoder paths can pass the high-frequency information learned in the initial layers toward the output reconstructions. What more, there are also residual blocks113 in Ref. 61 to ensure learning new features, or dense blocks114 in Refs. 39 and 59 to increase receptive field of the convolution filters and accelerate convergence.

To advance new developments of this integrative field by AI techniques, we also would like to provide some views here, including existing challenges and potential directions, for further discussion. First, more advanced statistical machine learning methods, especially the deep learning models, are highly desired. For AI-assisted WFS-based light control, take Ref. 28 as an example, the SVR and kernel method were chosen to calculate the pixel-wise inverse scattering operator. Note that such a statistical process is quite time-consuming (97min) and not suitable for focusing with a low signal-to-noise ratio. Hence, more effective regression algorithms need to be explored. Concerning computational imaging and microscopy, take Ref. 80 as an example. Apart from more complex AO retinal imaging process for further modeling and training with the network, more effective regularization effects for the deep neural network such as dropout and batch normalization can improve the network’s performance in deblurring the AO retinal images. There are also many examples67,110,111 where popular networks from the field of computer vision are employed to train and exploit data for wavefront reconstruction, image enhancement and restoration.

Second, deep learning techniques can be introduced for wavefront reconstruction in AO bioimaging, which has not yet been achieved since the aberration measurement and control in adaptive optical microscopy and retinal imaging remain intractable. This may be attributed to the fact that current deep learning-based approaches highly rely on the measured SH slope data, while direct wavefront sensing is challenging inside specimens of adaptive optical microscopes. There have already been quite a few reports about learning-based wavefront sensing and reconstruction for the astronomical AO system, where inspirations might be drawn from. As regards to adaptive optical bioimaging system, with the proper design of input and output for the DNN, perhaps deep learning can be adapted to indirectly deal with phase corrections based on the collected sequence of distorted wavefront images.

Last but not least, deep learning can be especially promising to allow light control or imaging through dynamic biological tissue. Inspired by Ref. 40, the CNN model can be designed to capture sufficient statistical variations to interpret speckle patterns under different conditions. By adopting such a statistical “one-to-all” deep learning method, the trained network may be made compatible with biological samples with live macroscopic parameters or in motion. Of course, further investigations are needed to explore the accessibility to the in situ light fields through or within biological tissues, for which internal guidestars such as ultrasonic modulation,23 photoacoustic signal,9 and magnetically controlled absorbers19 may be considered.

Moving forward, we envision that with further development in WFS, computational imaging and AI, the integration of them has the potentials to enable controllable optical delivery and imaging transmission or recovery through or within dynamic scattering media, such as in vivo biological tissue and free moving multiple mode fibers. It is not hard to imagine that such capability could benefit widely for many optical applications, such as imaging, sensing, control, stimulation and communication.

Acknowledgments

Shengfu Cheng and Huanhao Li contributed equally to the work. The work has been supported by the National Natural Science Foundation of China (Nos. 81671726 and 81627805), the Hong Kong Research Grant Council (No. 25204416), the Shenzhen Science and Technology Innovation Commission (No. JCYJ20170818104421564), and the Hong Kong Innovation and Technology Commission (No. ITS/022/18).