Recent advancement of chemical imaging in pharmaceutical quality control: From final product testing to industrial utilization

Abstract

Chemical imaging (CI) possesses a strong ability of pharmaceutical analysis. Its great strength relies on the integration of traditional spectroscopy (one dimension) and imaging technique (two dimensions) to generate three-dimensional data hypercubes. Data pre-processing or processing methods are proposed to analyze vast data matrixes and thereby realizing different research objectives. In this review paper, various pharmaceutical applications of quality control over the past few years are summed up in two groups of final product test and industrial utilization. The scope of “quality control” here includes traditional analytical use, process understanding and manufactural control. Finally, two major challenges about undesirable sample geometry and lengthy acquisition time are discussed for prospective commercial or industrial application.

1. Introduction

Chemical imaging (CI) is a burgeoning technology that integrates spectroscopic testing and scanning imaging. It generates three-dimensional hypercubes D(X×Y×λ)D(X×Y×λ) where XX and YY represent two-dimensional space and λλ denotes spectral wavelength. The hypercube D(X×Y×λ)D(X×Y×λ) is unfolded as D′(XY×λ) to perform the classical spectral processing as spectroscopic technique does. Compared to traditional spectroscopy, CI owns a theory-based superiority of additional spatial information that makes it suitable for broader pharmaceutical scenarios, especially in the case of component visualization and distribution homogeneity. But individual spectroscopic technique could only have concentration test or polymorph analyzed. In addition, this plane-based technology enables multiple planes test of different depths indicating that it is possible to explore the inner structure of pharmaceutical dosage.1

“Hyperspectral imaging (HSI)” is a synonym of CI in some sense. But strictly speaking, they are not the same concept. CI contains a broader scope, according to spectral resolution (i.e., wavebands number), it could be divided into three categories: multispectral imaging (MSI), HSI and ultraspectral imaging (USI). It is said that the term of “hyperspectral imaging” corresponds to more than 10 wavebands being recorded. With that, almost all the CI in this paper refers to HSI and two terms are interchangeable.

Here are several subclasses under the broad range of CI based on the different spectroscopies coupled. They are near-infrared (NIR) imaging, Raman imaging, terahertz pulsed imaging (TPI), ultraviolet (UV) imaging and Fourier transformed infrared (FT-IR) imaging.2,3,4,5,6 NIR imaging and Raman seem to be the most used ones among them because of the deep spectral understanding and relatively low prices but each imaging technique has their own special expertise. Data fusion of various spectroscopy sources is therefore put forward such as the combination between NIR and Raman, or between visible and NIR (435–1042nm) and short-wave infrared (898–1751nm).7,8 The inherent complementarity of different spectroscopic techniques makes these joints a big success.

CI originates from remote sensing for the first time and gains a big boost in the medical field.9 In the area of pharmaceutical study, CI is still a new technique but received increasing interest. Various applications of quality control have accumulated over several decades’ development ranging from final product test to industrial manufacturing. The scope of “quality control” here is go beyond the traditional analytical use and include process understanding and manufactural control. That means researchers could set processing parameters scientifically (i.e., determine blending endpoint) and obtain potential insights, such as being transferred to scale-up or achieve real-time real-test (RTRT) strategy. All the blueprints are very attractive to the researchers.

The purpose of this paper is to introduce the emerging CI technique. Much attention is attached to its theory-based superiority of additional spatial information. The instrument configuration, imaging principle and parameter setting are presented in the first part to give readers a fundamental knowledge of CI. Several main data pre-processing and processing methods are presented afterwards since they are of great help in handling vast data hypercube. Subsequent part is about typical pharmaceutical application. Groups of final product test and industrial application are listed, respectively. The industrial application part covers the workflow including blending, granulation, tableting and coating. In the end of this paper, two major challenges of undesirable sample morphology and lengthy acquisition time are discussed.

2. Image Acquisition

2.1. CI system composition

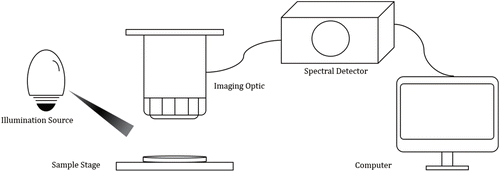

CI instrumentation varies among different scanning patterns, imaging optic or exciting light used but typically a CI system usually consists of illumination source, imaging optic, spectral detector and computer for data storage and processing. They are schematically represented in Fig. 1.

Fig. 1. The composition of a typical CI system.

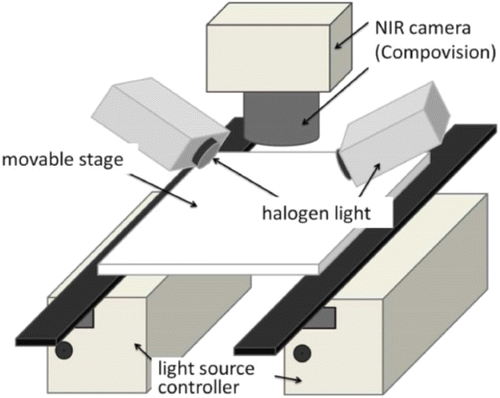

A detailed description of imaging system set-up is beyond the scope of this paper. But in order to obtain a robust and reliable chemical image, we will pay special attention to illumination configuration. Due to the difference in refractive indices, about 5–15% of the photons may show specular reflection.9 These photons could never penetrate the sample and therefore provide no useful information. It is important to correct illumination angle to avoid specular reflection. Figure 2 illustrates a schematic of illumination configuration, where two halogen lights are mounted at an angle of 45∘ and the detector camera is placed down to the sample stage perpendicularly.10 Similar set is found in another paper but there were three illumination sources at 45∘.11 However, based upon a study in 2012, such 45R0 configuration (illuminate at 45∘, detect at 0∘) only reduced specular reflection and shadows were still existing at the sample boundary.9 In their study, the configuration of 0R0 (diffuse light source, detect at 0∘) was suggested to achieve total diffuse reflectance. It has been executed in the tablet for API content monitoring in 2017, where the Ulbricht sphere was applied to generate homogeneous illumination.12

Fig. 2. A schematic of illumination configuration. Reproduced from Ref. 10.

2.2. Imaging principle

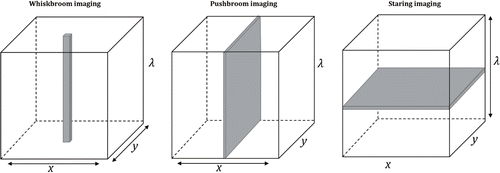

Chemical image is made up of hundreds of adjacent wavebands for each spatial pixel, comprising a hypercube (X×Y×λ) of two spatial (X and Y) and one wavelength dimension (λ). It is currently not feasible to obtain data in all three dimensions simultaneously. According to the way to construct hypercubes, CI can be grouped into three categories as illustrated in Fig. 3.

Fig. 3. Hypercubes constructed with three different patterns.

| (1) | Whiskbroom imaging (Fig. 3 left). This pattern scans the sample point-by-point in x-y-plane (sample area) and sequentially measures the whole plane. | ||||

| (2) | Pushbroom imaging (Fig. 3 middle). The line-scan pattern moves in x-direction, and the spectra of whole line in y-axis direction in the sample area are simultaneously recorded. | ||||

| (3) | Staring imaging (Fig. 3 right). The whole x-y-plane is scanned at a sole wavelength. Change the wavelength until the whole spectral range completed. The pattern is also known as “wide-field imaging” or “global imaging”. | ||||

The biggest distinction of three patterns is in the spatial resolution. High spatial resolution requires large acquisition time but is suitable for sophisticated scenarios. To rank three patterns from the highest resolution to the lowest involves whiskbroom imaging, pushbroom imaging, staring imaging. Whiskbroom imaging allows for micrometer-scale measurement and often seen in the microscopic imaging device. Pushbroom imaging harmonizes both spatial resolution and acquisition speed. When accompanied by mobile conveyor or moving stage, it is ideally suitable for inline application.12,13 While for the poor spatial resolution, staring imaging is favored for wide-field measurements that do not need refined images.14,15

2.3. Imaging parameter setting

The high-quality chemical images are the very foundation for the efficient CI analysis. Only a good measurement can provide unbiased information and further deduce meaningful inferences. Imaging parameter setting is hence discussed about how to construct appropriate measurement conditions and common measurement parameters, such as spatial pixel size, objective magnification (only for microscale device), sample area and acquisition time, are taken into account. Here is a research around how parameters affect the imaging quality of pharmaceutical tablets.16 The effects of focus levels (top, middle, valley), measurement modes (HR, SL) and accumulation times (1.0s/2.0s for SL, 0.1s/0.5s for SL) were investigated. Measurement mode was proved as the crucial factor according to the result of Soft Independent Modeling of Class Analogy (SIMCA), where groups of two measurement modes possessed the largest distance and the biggest dissimilarity. Note that the HR model had a minimum step size of 0.1um while SL model of 1.4mm. In other words, that was the spatial resolution that influenced spectral quality most.

An appropriate spatial resolution does need to be attached with great importance. Particularly, in the situation of low-content or small-size pharmaceutical substance, weak spatial resolution would cause a high possibility of matrix masking. It was reported that the CI of 70μm spatial resolution could not qualify magnesium stearate (MgSt) particles, which was less than 5μm size and only had 5%w/w composition.17 But it was also said that with similar instrument configuration and chemometrics method but a high spatial resolution of 25μm, another article achieved quantitative analysis of the same substance.18 Such comparison of spatial resolution was within NIR imaging and a same situation was found in the Raman imaging. Three objectives (×10 objective, ×50 objective, ×100 objective) analyzed the same extrudates but returned inconformity of solid state.19 The low spatial resolution setting (×10 objective, 30um pixel) depicted an image of predominantly amorphous drug. But the high spatial resolution setting (×100 objective, 0.5um pixel) detected crystalline drug particle over 0.5um size.

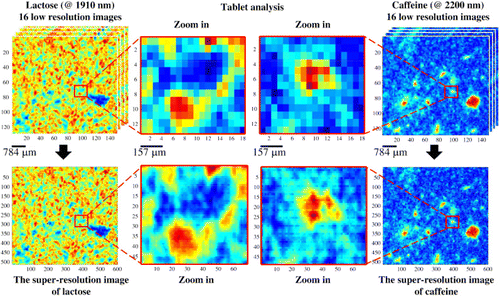

But it should be noted that high spatial resolution is not always the optimum solution as it is prohibitively time consuming and reduces sampling size as a compensation. A lengthy acquisition time rather limits its routine use in the industrial manufacture and a confined sampling area reduces representativeness of the sample studied. The tradeoff among spatial resolution, sample representativeness and reasonable experiment time is happening all the time in all the CI involvements. There seems to be a good approach to balance three factors simultaneously. This is the combination between staring imaging system and innovative chemometrics methodology of “super-resolution”.20 The global imaging pattern made a large sample area and a fast speed come true. The super-resolution method generated high-resolution images based on the data fusion of several low-resolution images. The NIR imaging of pharmaceutical tablets has demonstrated its superiority in a macroscopic scale (Fig. 4). Sixteen original images were integrated and the processed images of lactose at 1910nm and caffeine at 2200nm achieved high-pixel densities and presented more chemical details.

Fig. 4. Super-resolution for tablet evaluation. Reproduced from Ref. 20.

3. Image Data Analysis

3.1. Data pre-processing

This part targets at correcting the physical perturbations rising during the CI measurement. it is to remove the variations that are not related to sample constituent and prepare for the next step of data processing. Eliminating influences of irregular morphology, rough sample texture and detector artifact are all under the framework of pre-processing. Many methods are relevant and a small part will be said herein. A brief overview of the main pre-processing techniques is presented as two classifications: spectral pre-processing and imaging pre-processing.

3.1.1. Spectral pre-processing

The very beginning step of spectral pre-processing is the spectral axis calibration. This is about assigning each point in the spectral axis with a specific wavelength value.9 Note that in the devices attached to tunable filters (e.g., Acousto-optic Tunable Filter or Liquid Crystal Tunable Filter), calibration is needless since only specific wavelength can pass through the electronic controller. Other conventional operations like smoothing, scatter correction and derivatives are performed as that in the classical spectroscopy.21

| (1) | Smoothing: It is about denoising CI spectra in order to get a good signal-to-noise (SN) ratio. Here are many relevant algorithms but the Savitzky–Golay (SG) smoothing might be regarded as the most familiar one. | ||||

| (2) | Scatter correction: Correction is very necessary because real diffuse reflectance is too idealized to be realized. Standard normal variate (SNV) and the multiplicative scatter correction (MSC) are the two common algorithms. SNV is in the spectral normalization and MSC corrects each spectrum to keep up with the reference one (often the mean spectrum).22,23 Other methods in this group include inverse MSC, extended MSC and extended inverse MSC.24 | ||||

| (3) | Derivatives: Derivatives is about baseline correction. The first derivative can only deal with baseline removal and the second derivative enables it to eliminate both baseline and linear tendency.24 It would reduce SN ratio and smoothing is often accompanied before the derivative to suppress noise. Savitzky-Golay (SG) derivative and Norris–Williams (NW) derivative are two typical representations. | ||||

Beyond that, one has to notice the phenomenon of “Raman spikes” in the field of Raman imaging. It refers to an extremely sharp signal peak of being 103−106 times more intense than the normal peak.23 They are caused by cosmic rays and are always encountered in the charge coupled device (CCD) detector because of its capacity of detecting high-energy cosmic ray.

Raman spikes would mask details of chemical images and lead to misidentification of the signal of interest. Method “Nearest Neighbor Comparison (NNC)” is therefore raised to detect spikes and recover spectral signal.25 Based on the statistical assumption that neighboring pixels share similar intensity values, NNC determines an offset value to identify spikes and replace them with scaled value of the most similar neighbors. Most commercial software are also able to achieve spikes corrections and in a real-time manner. But some arguments are raised about the support post-correction after measurement so as to reduce the analysis time.26 Considering the massive time spent in Raman imaging, that is, tens of times longer than that in NIR imaging, correcting spikes afterwards may help a lot in terms of the fast acquisition.

3.1.2. Image pre-processing

Here are two missions about image pre-processing: how to improve image quality and how to select region of interest (ROI).

For the former part, we should realize that not all pixels are serviceable or meaningful because of detector artifact. It is estimated that approximately 1% of pixels are “dead pixels” in a NIR chemical image.27 Dead pixels are anomalous pixels of zero or extreme high values with their sizes varying from a single one a cluster to a pixel line. A linear interpolation correction method can recover dead pixels, utilizing neighboring information of suspicion object.27

Apart from that, chemical imagies could be influenced by illumination configuration or dark current of detectors or any other physical disturbance. Image calibration is therefore of great importance in terms of image quality improvement.8 Depending on the white reference image (Rwhite) and dark reference images (Rdark), a raw image (Rraw) is calibrated as Eq. (1) and Rcal is the calibrated image :

For the second part of ROI selection, it aims at extracting the sample area of interest out of the background. The method of binarization is often used to select ROI and threshold determination is the hardest step in the mathematical processing. Difficulty would be multiplied in the case of particle size estimation, where ROI is not a part of the data pre-processing anymore but is in the range of processing. A good idea has been offered that standard substance is being measured as a reference at the same condition as test sample measured.28 In virtue of the already-known particle size of the standard substance, an optimal threshold would be chosen according the similarity between calculated results and real size.

3.2. Data processing

Multivariate data analysis is of great importance to deal with tons of information in CI images. Here, we will introduce some of the most common multivariate analysis methods in view of their mathematical principles. Univariate data method can also be used but it is constrained to the particular scenario of composition-specific wavelength being analyzed.14,29

3.2.1. Principle component analysis

Well known as a variable-reduction technique, especially in the case of spectral data, principle component analysis (PCA) method could greatly decline the large number of spectral variables. PCA reduces the variable numbers by performing linear combinations of the original variables. The new returning combinations are called principal components (PCs). Loading matrixes (P) are those comprised of weight coefficient matrix denoting each original variable’s importance in the linear combination. The projections of original data (D′) to the PCs are scores (T). A PCA model usually contains several PCs. Each PC is ranked depending on how much variability from original data it has explained. The mathematical expression of decomposition process is indicated in Eq. (2), where E is the residuals matrix :

It is widely used in the aspect of semi-quantitative and qualitative study because of its straightforwardness and simplicity. Sometimes PC loading plots are highly associated with pure component spectrum. The PC score surface image could manifest the trend of studied indicator or sample clustering.3,30,31 But at other times, the score surfaces do not contain any chemical meaning and the method is inadaptable, especially when coping with compositional-complex chemical images.

3.2.2. Partial least squares

Partial least squares (PLS) correlates with PCA in a sense but the former one projects both independent variables (X) and predicted variables (Y) into a new space. T and U are score matrixes of X and Y, and P and Q are loading matrixes, and E and F are error terms, respectively. It is aimed at maximizing covariance between T and U. The basic PLS model is presented in Eqs. (3) and (4). PLS is a quantitative method that has been successfully applied in the many pharmaceutical analysis.14,32,33 Other relevant algorithms include t partial least squares 2 (PLS2) and classical least squares (CLS).

3.2.3. Multivariate curve resolution

Multivariate curve resolution (MCR) is based on the fundamental assumption of Beer–Lambert law in a multivariate scale.2 The spectral intensity is correlated with pure component concentration and the total spectral information in each pixel is a weighted result of each pure component.34 MCR resorts to a bilinear decomposition of original data (D′) into concentration profiles (C) and pure component spectra (S). If the pure spectra are attainable, concentration information can be easily estimated. Equation (5) shows MCR structure and E is the residual matrix :

The model is built via the iterative algorithm of alternating least squares (ALS). MCR–ALS starts with ST estimate inputting followed by alternatively computation. Finally, it ends up with the converging of residual matrix E (convergence criteria of 0.1% for example). The number of components should be given at the very first stage of the computation. It could be initialized by singular value decomposition (SVD) or evolving factors analysis (EFA) or already-known chemical knowledge of sample system.35,36,37 In addition, some constraints have to be added during the iteration process such as nonnegative constraint and unimodality constraint. The main advantage of MCR–ALS is the ability of only using bits of the component, as pure spectrum estimate, while the PLS methods above requiring large calibration sets.37

3.2.4. Classification method

Classification techniques target at grouping pixels into different classes according to the chemical component similarity and specialize in pharmaceutical counterfeiting or group sorting. “Classification method” mentioned here is a generalized concept comprised of “narrow classification” and “clustering method”. Narrow classifications are same as what we call supervised classification. They are based on the calibration set. But clustering methods are unsupervised and have no requirement of calibration set.

Common narrow classifications are comprised of k-nearest neighbor (k-NN), PLS discriminant analysis (PLS-DA), support vector machine (SVM) and Soft independent modeling by class analogy (SIMCA).8,38 These methods might return quite different classification results of the same study. There is no one-size-fits-all approach nor the best approach ever. What we can do is to try more methods and find the most appropriate one.

The plot of average cluster size against process time indicates that roller compaction produced the greatest reduction in cluster size as a responding sharp decline were observed. The obvious enhancement of API distribution was also found in chemical images. Besides, the complex ingredient in this study required a multivariate data-processing method to character drug cluster. A five-factor PLS-DA model was built, and only pixels with top 4% model score were utilized. Other isolated pixels were classified to the neighboring cluster based on spatial proximity, and finally achieve cluster size estimation.

For those clustering methods, K-means clustering has been used in pharmaceutical classification.39 Given a predetermined k value of the cluster numbers and distance information of pixel combinations, the clustering algorithm targets at maximizing intra-group homogeneity and inter-group heterogeneity. Nevertheless, K-means clustering is exclusionary that means one pixel completely belongs to one cluster. This is apparently unsuitable for certain pharmaceutical applications (e.g., tablet composition), since one pixel is usually composed of several substances. Those exclusionary methods are named as “hard modeling techniques”, and we can turn to the opposite “soft modeling techniques” for help. Taking fuzzy C-means algorithm (FCM) for instance, it assigns every pixel with a fractional degree to all the clusters simultaneously. It should be pointed out that both FCM and KM share similar computation steps, and the same problems of determining the appropriate number of clusters (k value) are encountered in both the algorithms.40,41

4. Pharmaceutical Application of CI

4.1. Final product testing

CI applications almost cover all the aspects of pharmaceutical product analysis. To list its use in detail, it includes component content determination, counterfeit detection, solid state transformation, dissolution monitoring, particle size estimation, tablet hardness analysis, etc. We do a brief overview as represented in Table 1.

When we look into Table 1, we find that two CI technologies are performed in the same system, or two CI technologies in their own studies, which achieve the same research objective. For the example of component visualization and polymorphic transformation monitoring, both UV imaging and NIR imaging were put to use in a study of 2014 and the same use of MSI was found in a study of 2015.35,42 We should have a clear awareness that CI coupled with different spectroscopy technologies still have a large extent of overlap among each other. Back to the study represented above, we focus on the unique strength of UV imaging. UV imaging has strong absorptivity than that in the NIR region and thus enabled scanning time reduction and fast-speed detection. Furthermore, with the help of expanding six channels (i.e., six wavelengths), UV imaging gets enough chemical information and returns similar performance as NIR-CI did.

Another point noticed from Table 1 is that both CI technology and individual spectroscopy exist in the same study and perform similar research work. In the pharmaceutical scenario of low content quantitation in 2015,43 Raman imaging provided spatial pixel histograms against component concentration and returned a weighted concentration value based on histograms. In that way, a larger sample area was considered and the risk of random sampling was therefore declined. The prominent advantage of CI was emphasized again.

| Type of application | Analysis technology | Sample analyzed | Data pre-processing | Data processing | Ref. |

|---|---|---|---|---|---|

| Polymorphic transformation | NIR imaging | Tablets comprised of three forms carbamazepines | Second derivative | PCA, MCR-ALS, parallel factor analysis (PARAFAC) | 36 |

| Visualization of API distribution and its solid state | NIR imaging | 3D printed tablet | SNV, SG smoothing, 2nd derivative, mean centering | MCR-ALS | 44 |

| Quantification of water content | NIR imaging, Raman spectroscopy | 2 simple model formulations | Radiometric calibration | PCA, PLS | 45 |

| Low-content quantification | Raman imaging | 61 powder mixtures of three component | Cosmic ray artifact removal, SNV, multiplicative scatter correction | PLS | 46 |

| Visualization of API distribution and its solid state | NIR imaging, UV imaging | Compact of two components | Second derivative SG filter, SNV | MCR-ALS | 17 |

| Content determination and polymorphic transformation | Mass spectrometry imaging (MSI) | a house manufactured tablet and a commercialized tablet | PCA, MCR-ALS, Independent component analysis (ICA) | 42 | |

| Characterization of dissolution behavior | UV imaging, Raman spectroscopy | Compacts of 3 mg weight | MCR-ALS | 35 | |

| Determination of the quantitative composition | NIR imaging | 4 brand commercial tablets | SNV, SG smoothing | MCR-ALS | 15 |

| API quantitative analysis | Raman imaging | Model mixture with SERS colloid | MCR-ALS | 43 | |

| Elucidation of falsified medicines composition | Raman imaging, FT-IR imaging | 2 reference batches and 5 falsified batches | Kubelka–Munk correction, Asymmetric Least Square baseline correction | MCR-ALS | 2 |

| Monitoring of tablets disintegration | Raman imaging | Tablets of a diameter 10mm and a weight of 350 mg | PCA, direct classical least squares (DCLS) | 3 | |

| API distributional homogeneity | NIR imaging | 6 brand commercial tablets | SG smoothing, normalizing, derivatives | Distributional Homogeneity Index (DHI) | 4 |

| Hardness and relative density determination | NIR imaging | Pure MCC compact | Reference standard spectrum calibration | PLS | 14 |

| Analysis of tablet inner structure | TPI | Multiple unit pellet system (MUPS) | Coating thickness calculation | 1 | |

| Counterfeit detection | NIR imaging | 30 generic tablets from five different suppliers | 2nd SG derivative | PCA, PLS | 47 |

| Classification of multiple components | NIR imaging | Binary compact | SNV, SG derivative | PLS-DA | 48 |

| Counterfeit detection | Raman imaging | 26 counterfeits and 8 genuine tablets | PCA, KNN, SIMCA, LDA | 38 | |

| Counterfeit detection | Raman imaging, near-infrared spectroscopy | 8 samples of anabolic tablets | PCA, MCR-ALS | 30 |

4.2. Industrial utilization

4.2.1. Blending

Blending units usually combined with mill module play a very basic role in the pharmaceutical industry. It targets at producing homogeneous mixtures with each composition in the desired particle size. We call it as “blend uniformity”. The uniformity testing of pharmaceutical intermediate enables early risk detection and lean production and make it easier to get a qualified final product. Major blend testing approach is high performance liquid chromatography (HPLC) based.49 We gather several samples from blend mixture, execute HPLC testing, and calculate relative standard deviation (RSD). Another alternative method is NIR spectroscopy utilizing spectral difference to monitor blend uniformity.50 NIR method is highly praised for its ability of noninvasive testing and the suitability for inline industrial use. But both HPLC and NIR methods get into the same dilemma of no spatial information. They can only reach a decision whether the nominal content meets the specification but cannot discover API agglomeration.

When caught in the dilemma of API agglomeration, we can resort to CI technique. Three batches were performed under various process conditions: Batch 1 (milled, 0.8mm screen), Batch 2 (no milled), Batch 3 (milled, 1.2mm screen).29 Each batch under went NIR imaging analysis. Significant API agglomeration of about 800um in diameter was found in the unmilled Batch 2. Batch 1 employing a smaller screen had no large API particle at all. Smaller but occasional agglomeration of about 180um was found in Batch 3 because of a larger screen.

CI can also investigate blend efficiency.33 In a more complicated blend process consisting of lots of blend subunits, samples from each step were imagined so as to track the breakdown process of drug clusters and determine work efficiency of each subunit. The great sharp decline in cluster size was observed in the roller compaction unit corresponding to the obvious enhancement of distributional homogeneity in the chemical images.

Another application is to reveal blend dynamic mechanism. Ten individual trials were implemented at various blending time (0.5, 1, 2, 5, 10, 15, 20, 25, 30 and 40min) while keeping all other parameters the same.51 Power blends were removed from each trial and compressed as a compact and be taken six NIR images from top, cross-section, bottom surface of compact. Multivariate analysis methods were performed and the PCA score plots denoted API trajectory during the blending. The blending was dominated by convective mixing. API accumulated in the bottom and top surfaces at the very beginning and began scattering along the top surface from the third trial (2min). Fifteen min was considered as a recommended endpoint time. In particular, this study performed in the mini-blender occupied a similar mechanism with scale-up one, according to some published literature. The result impressed researchers with a bright future for CI in process understanding and optimization.

4.2.2. Granulation

High shear granulation is a common granulation equipment in the solid dosage forms manufacturing. Well-formed granules with good flowability, proper particle size, or good homogeneity are beneficial for the following tablet steps. However, the phenomenon of over-granulation happens sometimes producing oversized granules and wasting energy in the meanwhile. To detect over-granulation condition and explain the internal mechanism, an NIR-CI study has been completed.32 The study contained nine batches on a scale of 5 kg under different granulation times and impeller speeds. Long granulation time and fast impeller speed were used to simulate over-granulation condition. The applied NIR-CI technique captured that particle sizes were getting more and more larger as over-granulation went on, like other traditional granulation tests had done but CI could detect the distribution change of chemical composition. A model of segregation process was therefore proposed. The over-granulation started with the accumulation of hydrophilic excipient through the water, then hydrophobic API segregated, and progressed to a consolidation stage.

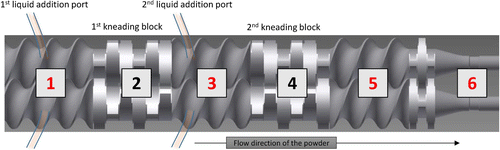

Twin-screw wet granulation (TSWG) is another effective granulation equipment. Its feature of continuous manufacture, different from traditional batch manufacture, attracts much attention from pharmaceutical manufacturers. But the granulation mechanism remains to be explored more. CI has been proved to be as an efficient tool in the understanding of TSWG, for example, in residence time distribution (RTD) and axial-directional mixing in TSWG.13 A set of experiment designs under different screw design parameters and process parameters were performed and imaged inline by NIR imaging. Quantitative indexes of RTD and axial-directional mixing were extracted from NIR imaging. Connecting quantitative indexes with independent variables (screw design parameters or process parameters), the most influential variable has been identified as the screw speed. But one thing to be aware of is that only granules from final exit compartment of TSWG were studied and the middle compartment was still under limited understanding. The research group’s subsequent work focused on the inner four compartments: compartment 1 (wetting area), compartment 3 (first kneading compartment), compartment 5 (second kneading compartment), compartment 6 (outlet unit), as shown in Fig. 5. Granules were removed from four compartments and the cross-sections were analyzed.11 The PCA score images of compartments 1 to 6 showed a clear trend of API redistribution among the TWSG barrel.

Fig. 5. Schematic diagram of the twin-screw granulation. Reproduced from Ref. 11.

4.2.3. Tableting

Tableting is another essential procedure and has strong impact on subsequent production. Wahl et al. were interested in the suitability of NIR imaging system in tablet manufacturing and built an inline system.12 They successfully achieved the continuous monitoring of component quantitation, component distribution and crushing strength. The continuous system was built by a flat belt conveyor of tablet transporting and a spectral detector that was located directly over the conveyor at a distance of ca. 50cm. The conveyor moved at a speed of 6cm/s cooperating with the spatial step of ca. 200um in the moving direction. It was said that the NIR imagined set-up has a strong potential to scale up and to be transferred to production stream. Such an inline approach is really promising, but at the same time, we should note the existing system only fits for single tablet analysis at one time. More exploration works remain to be carried out.

4.2.4. Coating

After a series of operations as blending, granulation and tableting, it comes to coating as the last production procedure. Quite a lot of process analytical technologies are capable of film coating monitoring, such as NIR or Raman sensors. These methods measure coating thickness in an indirect way according to the absorbance differences, and calibration models and complex multivariate data analysis are imperative.52,53,54 But terahertz technology is a direct approach making it almost the most popular one.55,56 Every time the incident terahertz pulse reaches the interface (e.g., air-coating interface, coating-core interface), it is subsequently reflected to the detector. The time delay between reflected signals is used to calculate coating thickness straightforwardly. Another advantage of terahertz technology relies on its strong signal intensity compared to other spectroscopic methods. The intensive signal makes it possible to penetrate tablet surface to detect maximum 300um-thick coating layer.57 The broad measurement range means a great applicability of all kinds of scenarios.

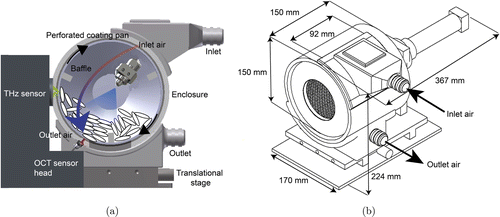

TPI inherits the superiority of direct measuring and broad measurement range. Besides, it could resolve inter-tablet coating distribution and three-dimensional thickness homogeneity. Since TPI provides the map of coating thickness over the whole dosage form, it does assist in a better understanding of tablet coating. Commercial TPI system coupled with designed laboratory scale pan coater has been developed to perform inline measurement as shown in Fig. 6.57 The terahertz sensor was perpendicular to the center of the coating unit to ensure specular reflection of terahertz pulse. The process of coating growth was tracked and a steady increase in thickness was observed. TPI started a great agreement against reference thickness after 50min of coating process, corresponding to a thickness larger than 50um. From another study in 2018 of the same system, it was found that there were slight discrepancies between inline and offline TPI measurement.6 But such disagreement could be corrected by adjusting the measurement number per minute.

Fig. 6. (a) Schematic diagram of the scale pan coater coupled to the TPI measurement and (b) A technical drawing of the pan coater in perspective view. Reproduced from Ref. 57.

Some other derivative applications have arisen based on the traditional TPI. It was reported that the tensile strength could be predicted and surface roughness could be correlated with the TPI-derived parameters, that are film thickness, surface reflectance, and interface density difference (between film layer and core table).58 When we look into future, feedback control is proposed to join in the TPI system considering its enormous potential of reducing risk of crack defect.

5. Challenges

CI possesses unique strengths because of the additional spatial dimension but accompanied we some challenges originating from spatial information about how to obtain high-quality sample images and how to image as fast as possible. Here, we narrow down the first question into the scale of undesirable sample geometry notwithstanding illumination configuration and imaging parameters setting are also problem-relevant. A detailed introduction is unfolded in the following part.

5.1. Undesirable sample geometry

Flat surface is obviously preferable to HSI going to the consistent path lengths between detector and sample surface. Whereas in the case of not-flat surface, undesirable artefacts arise and geometrical correction is necessary to be implemented to remove morphological effects but preserving meaningful information of interest in the meanwhile.41 We roughly classify various correction methods into two groups: instrument-based corrections and data-based corrections.

These instrument-based corrections always benefit from innovative equipment, carry out morphological correction during the measurement. Take LiveTrackTM, for example.59 It could constantly adjust the sample angle and allow for continuous auto-focus, and achieve a fast acquisition speed and a large tolerance of surface roughness at the same time. This technology has been applied in oblong tablets with embossments on surfaces.

These data-based corrections are performed after measurement. Here is a software correction relevant to physical impact adjustment.60 Three predominant principles are investigated, that are surface diffuse reflection, special propagation behavior, and the variation in arc length along the sample surface, and were applied to modify each pixel intensity value. The methodology was validated using a uniform spherical Teflon sample. The corrected intensity profile demonstrated a drastic reduction of variation from 43% to 7%. The software correction there is restricted to round objects. For the correction of relative complex shapes, here is another approach of spectroscopic pre-treatments with no shape assumption.61 Three classifications of pre-treatments are studied including response linearization, baseline correction and multiplicative correction. All the methods and their combinations compete for the optimal performance.

These correction operations are all primary solutions specialized in simple scenarios. But in the real industrial or commercial applications, with more complex sample morphology or physical environment, more powerful and generalized methods need to be created in the following study.

5.2. Lengthy acquisition time

CI is always lengthy with up to several hours or tens of hours being consumed. The time-consuming feature is not hard to understand. (1) Owing to the weak spectral response, especially weak Raman response, to get a sufficient SN ratio, there is a strong need of spectra averaging therefore leading to acquisition time increasement. (2) Another reason is the tiny spatial coverage. The great part of current commercial Raman instruments is equipped with global or point laser sources with only sub-millimeter or sub-centimeter measurement scales. In order to image enough sample area and increase measurement representatives, a lengthy acquisition time is inevitable. (3) Moreover, the poor detector performance might have negative influence either, in which multi-channel acquisition is beyond their ability.

In the past few years, several emerging instruments have been proposed to achieve relative fast imaging. Ishikawa et al. introduced a newly-developed device that was equipped with an upgraded InGaAs detector capable of high-quality spectra acquisition in the broad spectral region from 1000nm to 2350nm.10 It took less than 5s to scan an area of 150×200mm in the whole NIR band, while the traditional instruments need approximately 5–10min or oven more for the same area. Similar macro-scale imaging was seen in the Qin’s study of Raman imaging device.62 They constructed a line-scan Raman imaging system for high-throughput analysis. This innovative system had a custom-designed 785 nm line laser as excitation source, and furthermore, a long 24cm excitation line that was reflect from a 45∘ dichroic beam splitter. The long laser length enabled large area scanning along with the capability of rapid evaluation. It was said the scanning time could be shortened from hours to minutes. Other methods of fast Raman imaging raised here are about the involvement of compressive detection strategy.63,64 Compression was loaded on spatial light modulator (SLM) in the manner of compressive filters, which mean data processing was incorporated into hardware. Multivariate analysis method PLS could generate these filter functions. To say it a bit more specifically, the coefficient vector was being calculated as the first step, and next negative parts in the vector were converted into positive. Based on the filter functions, it could compress full spectra into single channel and achieved scanning speed exceeding 1ms per pixel.

Special sample preparation is another approach to achieve fast imaging. For those surface enhanced Raman spectroscopy (SERS) active substances, we could make composite samples with SERS colloid (primarily silver or gold nanoparticles). SERS is well known as a powerful Raman enhancing tool and thereby enables collecting times’ reduction and scanning speed increase. The speed improvement was quite evident in a comparative study.43 The measuring time of each SERS sample was only 20min with a map size of 49 pixel×49 pixel, compared to the 4.2h–53h of ordinary Raman imaging with a measurement area of 31 pixel×31 pixel. In addition, SERS colloid film could be fully removed from composite sample after imaging, indicating it as a nondestructive method like other ordinary Raman imaging.

Despite innovative applications mentioned, they were still questioned for the contradiction between time-saved and poor spatial resolution (or the operation complexity). Besides, all the above-discussed aspects are under the domain of acquisition time reduction, and the long processing time should also be well considered. More efficient chemometric tools are of real need to handle vast CI hypercubes.

6. Conclusion

There is no doubt that CI is a powerful tool in various pharmaceutical applications. Two groups of applications of final product test and industrial utilization are summed up over the range from analytical use to process understanding and manufactural control. Almost all of the great CI research works rely on its unique advantage of combination of both spectral and spatial information. But it has several shortcomings that limit widespread utilization. A major barrier is about the lengthy acquisition time because of the compromise of large map size or owing to the poor spectral response. Others like good image acquisition over the range from imaging parameter setting to sample geometry treatment along with efficient data processing are also of concern.