A comparative study of optimization algorithms for wavefront shaping

Abstract

By manipulating the phase map of a wavefront of light using a spatial light modulator, the scattered light can be sharply focused on a specific target. Several iterative optimization algorithms for obtaining the optimum phase map have been explored. However, there has not been a comparative study on the performance of these algorithms. In this paper, six optimization algorithms for wavefront shaping including continuous sequential, partitioning algorithm, transmission matrix estimation method, particle swarm optimization, genetic algorithm (GA), and simulated annealing (SA) are discussed and compared based on their efficiency when introduced with various measurement noise levels.

1. Introduction

Manipulation of the phase map of a wavefront of light allows optical focusing and imaging through complex media. Wavefront shaping has been used in different high-resolution imaging modalities such as optical coherence tomography (OCT), fluorescence microscopy, two-photon microscopy (TPM), and photoacoustic microscopy (PAM) to improve their resolution or penetration depth.1,2 Wavefront shaping using computer-controlled spatial light modulators (SLMs) has been widely used in these modalities1,3,4,5,6,7,8,9,10,11 and hence, choosing an appropriate optimization algorithm for a particular modality or application is of prime importance.

When a turbid medium is illuminated with coherent light, it forms a pattern, so-called speckle. According to Maxwell’s equations, light scattering is linear and fully deterministic. Light propagation through the turbid medium can be described using the concept of the transmission matrix. In this paper, we denote the incident and outgoing scattering channels as input and output channels, respectively. Transmission matrix of a sample relates the electric field of light at an output channel to the electric field of light at an input channel4 :

Typical experimental setup for focusing light through a scattering medium includes four main components: SLM, laser, CCD camera, and processing unit.

Several phase control algorithms have been introduced for wavefront optimization.4,12,14,15,16,17,18,19,20 We have also implemented several optimization algorithms for wavefront shaping purposes.21,22,23,24,25,26,27 In this study, we compare the performance of six of these optimization algorithms: Continuous Sequential (CS) algorithm, Partitioning Algorithm (PA), Transmission Matrix (TM) estimation method, Particle Swarm Optimization (PSO) method, Genetic Algorithm (GA) and Simulated Annealing (SA) algorithm in terms of their enhancement, stability and initial convergence speed. We have developed these algorithms and evaluated them.28 The enhancement, η, defined as the ratio between the output channel intensity, to the initial average intensity of the speckle pattern, 〈I0〉. The maximum theoretical enhancement depends on the system size N, i.e., the number of input channels being optimized. In a noise-free condition with a stable turbid media, the maximum achievable enhancement is4

The algorithms are also compared under various measurement noise levels which are introduced by adding Gaussian noise with a standard deviation of 0.3〈I0〉, 0.6〈I0〉 and 〈I0〉 to the measured value at the camera. The measurement noise of 0.3〈I0〉 is comparable to experimental observations.4

2. Materials and Methods

The CS algorithm optimizes each input channel independently that requires excessive computational time especially in systems with large size.4,29,30 CS is implemented as follows:

| (1) | Cycle the phase of each of the N channels of the SLM with respect to others from 0 to 2π. | ||||

| (2) | Store the phase for which the target intensity is maximal for each segment. | ||||

At initial stages of the experiment, enhancement is negligible as compared to measurement noise levels which may lead to unstable optimization.

CS is usually performed with 10 phase samples per input channel, i.e., 10N measurements to optimize the full phase mask of the SLM.4 To make a fair comparison, the termination condition for all the other algorithms were considered to be 10 measurements per input channel (i.e., 10N measurements in total).

The PA algorithm modulates a randomly selected half of the input channels during each iteration.4 Even though this characteristic helps PA reaching a faster initial enhancement than the CS algorithm, as the PA progresses, the rate of enhancement slows down. The PA can be implemented as follows:

| (1) | Divide the segments randomly over two equally sized partitions. | ||||

| (2) | Maximize the focus by cycling the phase of one partition with respect to the other from 0 to 2π (10 phases). | ||||

| (3) | Repeat this process and randomly repartition the input channels until the termination condition (2560 measurements) is met. | ||||

Unlike other algorithms, theoretical enhancement of PA does not depend on the number of channels, but on the sample persistence time.4 This algorithm performs well when the condition, NTp = Ti is met; where Tp is the sample persistence time4 and Ti is the time required for one iteration.

In the TM method, for each input channel n, the algorithm iteratively sets the phase retardation to 0, π/2, π, and 3π/2. Next, it measures the corresponding intensities in the mth output channel as I0m, Iπ/2m, Iπm, and I3π/2m. The transmission matrix elements can be estimated as (4) up to a multiplicative factor that can be eliminated as the factors are equal for all elements of the matrix.15

By measuring the transmission matrix of a solid scattering medium for all values of m, we have engineered an opaque lens based on the matching phase mask according to (5). This technique allows focussing light efficiently at any given point after the medium.

Here, t† is the conjugate transpose matrix of the transmission matrix t and Etarget is the output target vector with a value of 1 in mth channel, and 0 on the remaining channels. TM method is operated as follows:

| (1) | For a given output channel m do steps 2–4 for all input channels: | ||||

| (2) | Set the phase retardation of the input channel n with respect to others to 0, π/2, π, and 3π/2. | ||||

| (3) | Measure the I0m, Iπ/2m, Iπm, and I3π/2m intensities, respectively. | ||||

| (4) | Set tmn=[(I0m−Iπm)/4]+i[(I3π/2m−Iπ/2m)/4]. | ||||

| (5) | Set the SLM phase map according to (5). | ||||

The TM method only requires four measurements per input channel to find the optimum phase map. I0m can be measured for all input channels with a single measurement (set all phases to zero), TM matrix will yield the optimum phase map after only (3N+1) measurements.

The PSO algorithm is a numerical stochastic optimization technique inspired by the social behavior of bird flocks searching for food.14,31,32,33 First, an initial population of phase masks (particles) is generated. Each particle is then assigned with an initial random velocity which determines how the N-dimensional search space is explored. Then, the focus intensity (fitness value) for each particle is measured. The position (phase map) of each particle is stored in pbi, and the position with the highest intensity is stored in gb. The next step is to update the velocity and position of each particle according to (6) and (7)

| (1) | Choose N initial random phase masks (particles) and random velocities. | ||||

| (2) | Evaluate the fitness (focus intensity) of each particle and set pb, store the best solution in gb. | ||||

| (3) | Update the velocity and position of the particles according to (6) and (7). | ||||

| (4) | Repeat steps 2 and 3 until the termination condition is met. | ||||

The GA method is an optimization algorithm which uses the Darwinian evolutionary principle of survival of the fittest to “evolve” toward the best solution.34 The working principle of this method starts with generating an initial population of phase masks. Phase masks are realized by assigning each input channel value to a uniform pseudorandom distribution of phase values ranging from 0 to 2π. Next, the fitness value for each mask is measured, and the population is ranked in descending order, followed by the optimization of the phase masks through breeding and mutation operations. Two parent masks (ma,pa) are randomly selected from the population where the higher probability of selection is given to higher ranked phase masks. A random binary breeding template array B is then created to start the breeding process. The input channels of the two parent masks are combined using B to create a new offspring (OS) according to: OS=ma⋅B+pa⋅(1−B). This new mask is then mutated by randomly changing the phase of a small percentage of input channels.35 The operating process steps of GA are as follows:

| (1) | Random generation of an initial population P(0) of M phase masks. | ||||

| (2) | Evaluation of the fitness of each individual P(i) where, i=0,1,2,…,M | ||||

| (3) | Selection of ma and pa from P(i) (higher the fitness value, greater the chance of selection). | ||||

| (4) | Random generation of the binary breeding template, B to combine ma and pa. | ||||

| (5) | The next generation P(i+1) breeding using the formula: OS=ma⋅B+pa⋅(1−B) and mutation of a small percentage of it. | ||||

| (6) | Repeat steps 2–6 until the termination condition is met. | ||||

The SA algorithm, a well-established stochastic search algorithm, is originated from the physical process of heating a substance beyond the melting point followed by cooling down the substance gradually until it turns into a crystalline structure exhibiting lowest energy.34,36 This algorithm has previously been used for the optimization of optical systems.37,38,39,40,41 We have also implemented this algorithm for the optimization of optical systems.34,40,42,43,44 Initially, a control parameter, T (analogous to the substance temperature) is assigned to a known value and a random phase map x is generated. After that the intensity of the focus point, E(x) corresponding to the initial phase map is stored, a portion of the input channels are perturbed by adding a certain phase to their current phase values. The new phase map of the perturbed system can be denoted as y and E(y) is stored. If E(y)>E(x), the new perturbation is accepted. Otherwise, the perturbation is might be still accepted according to (8).

After applying L numbers of perturbations, the temperature is lowered by a factor of γ. As the algorithm proceeds, the decrement in the temperature makes it greedier, unlike the circumstances at the initial stages when the temperature is still high and occasionally accepts some unfavorable states. Thus, this technique ensures that the algorithm converges to the global optimum irrespective of the initial conditions. The steps of SA are given below.

| (1) | Set T to a known initial value and generate an initial random phase mask x. | ||||

| (2) | for T>Tmin do: | ||||

| (3) | Randomly perturb x to generate a neighboring phase mask y. | ||||

| (4) | Compute the enhancement E(y), | ||||

| (5) | if E(y)>E(x), set x=y | ||||

| (6) | else: set x=y with probability exp([E(y)−E(x)]/T) | ||||

Decrease T by a factor of γ after each set of L measurements.

3. Results

All the simulations were performed in MATLAB 2015, and a Core i7 CPU with 8GB of memory has been used for the computational purpose.

A new random transmission matrix with circular Gaussian distribution, zero mean and one standard deviation was generated for each run. The simulation parameters for the algorithms are as follows: The CS algorithm and PA were applied with 10 phase samples per input channel according to Ref. 4. The TM estimation method did not involve any free parameters and was implemented as described earlier.15 The PSO was implemented with a population size of 50 and run for 50 iterations. The constriction coefficient method36 was used to set the values of w=0.73 and c1=c2=1.5. The phases were chosen to be between 0 and 2π. The velocities were limited to the range of [−2π/10,2π/10] to avoid very large steps. The population size for the GA algorithm was also set to 50, and the crossover rate was set to 0.5. The parent masks were chosen with the tournament method.35 The mutation was applied to 10 percent of the population in each generation. Phase of 0.1 of input channels (mutation rate) of the selected population was mutated by a value of 4π/10. In order to prevent the algorithm from mutating too many optimized phase modes, the mutation rate was decreased from the initial value of 0.1 to the value of 0.002 as the algorithm reaches the end of optimization. The SA algorithm was implemented with the following parameters. The initial temperature was set to one. At each step, 50% of the input channels were perturbed by π/16. According to our experiments, L=N/16 and γ=0.9 resulted in the best performance for low noise environments (<0.5〈I0〉) and L=N/8 and γ=0.99 was suitable for noisy environments (>0.5〈I0〉). Note that GA and SA perturbation rates are value of 0.1 to the value of 0.002 as the algorithm reaches the end of optimization. The SA algorithm was implemented with the following parameters. The initial temperature was set to one. At each step, 50% of the input channels were perturbed by π/16. According to our experiments, L=N/16 and γ=0.9 resulted in the best performance for low noise environments (<0.5〈I0〉) and L=N/8 and γ=0.99 was suitable for noisy environments (>0.5〈I0〉).

Note that GA and SA performance are sensitive to the parameters of the algorithm. One might need to fine-tune the parameters according to the number of input channels and the persistence time of the sample.

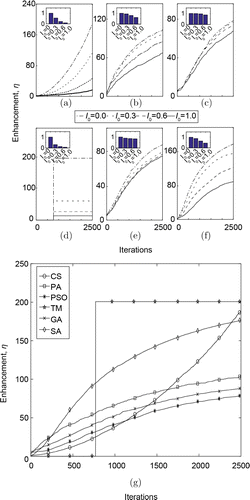

Figure 1 presents the simulation results of the algorithms explained earlier when introduced to different noise levels with N=256. CS optimizes each input channel independently. Thus, CS enhancement initially grows slowly as shown in Fig. 1(a). CS is sensitive to noise and cannot recover in very noisy environments. CS is a very straightforward algorithm and can be easily implemented. The performance of CS, unlike some other algorithms presented in this paper, does not depend on the wise choice of the algorithm parameters.

Fig. 1. Performance of the (a) Continuous sequential, (b) Partitioning algorithm, (c) Particle swarm optimization, (d) Transmission matrix method, (e) Genetic algorithm, and (f) Simulated annealing for N=256 under different noise conditions comparing the enhancement of the focus to the number of measurements with varying noise levels. A Gaussian noise at 30%, 60% and 100% of the initial average intensity 〈I0〉 was added for each algorithm. (g) Performance of the algorithms in terms of efficiency has been compared without any noise added. The curves are averaged over 20 runs. The area under the curve for different noise level is shown in the insets of each figure (a)–(f).

As shown in Fig. 1(b), PA has a very fast initial increase in the enhancement but slows down as the optimization progresses. Due to this phenomenon, PA can easily recover from disturbances due to instabilities in the sample and the surrounding environment. The performance of this algorithm in noisy environments does not decrease substantially. However, PA fails to reach beyond a certain enhancement limit. This disadvantage is more pronounced in systems with higher N. PA is simple and can easily be implemented as it requires limited numbers of parameters to be defined.

PSO algorithm possesses an efficient global information sharing mechanism. This helps to exhibit a robust performance in noisy environments as it is evident in Fig. 1(c). However, the enhancement is limited in this case which might be due to the complex and non-convex search space of this problem. Some studies had suggested that the performance of this algorithm can be improved by applying a mutation similar to GA.45

Estimation of the transmission matrix of a medium is an effective approach to focus through a medium. As it is shown in Fig. 1(d), this method offered the maximum enhancement (200) in the shortest time as compared to other algorithms under a noise-free condition. However, the performance deteriorates considerably in noisy conditions. TM method can also be used to focus the light on multiple targets simultaneously by setting Etarget appropriately in (5).

As illustrated in Fig. 1(e), GA performs robustly in the presence of noise. It has also proven to perform well when exposed to a noise level of 2〈I0〉. As GA optimizes an entire mask concurrently, the fitness function measurement is capable of quickly rising above the noise level and showing a fast-initial enhancement growth.

According to Fig. 1(f), the SA algorithm shows an initial fast increase in the enhancement. This makes this algorithm suitable in cases where a fast optimization and high enhancement is necessary, especially when the samples have low persistence time. SA performs well in various system sizes, and its advantage is more apparent in larger systems.34

Table 1 provides a summary of the key features of these six algorithms. Here, simplicity Si represents the implementation difficulty level as well as complexity in tuning the algorithm parameters. The ideal η is the enhancement percentage in a noise-free environment. Initial η is the enhancement percentage after 0.3 of the total time which will be 3∗N measurements (750 for N=256). This parameter determines the recovery speed from disturbances and noises and the potential to yield adequate results in a short time. The enhancement percentage is calculated by dividing the enhancement obtained from the simulation by the theoretical value η=200 for N=256 based on (3). Stability St is the average enhancement percentage with 0.3〈I0〉 and 0.6〈I0〉 measurement noise levels. An overall goodness factor, OGF is considered as the average of ideal η, stability and initial η to determine the optimum algorithm for wavefront shaping.

| Type | Si | Ideal η (%) | Initial η (%) | St (%) | OGF (%) |

|---|---|---|---|---|---|

| CS | Easy | 100 | 6 | 42 | 49 |

| PA | Easy | 50 | 23 | 46 | 40 |

| TM | Medium | 100 | 37 | 23 | 53 |

| PSO | Medium | 40 | 16 | 38 | 31 |

| GA | Difficult | 45 | 20 | 40 | 35 |

| SA | Difficult | 85 | 30 | 69 | 61 |

4. Discussion and Conclusion

In this paper, we have compared six optimization algorithms including CS, PA, TM, PSO, GA, and SA for focusing light through a turbid medium based on the following aspects: ideal enhancements, stability, initial enhancement growth rate. The performance of the algorithms was also explored under different noise conditions. CS and TM operate on the channel individually and rely on detecting small improvements in the target focus. Hence, the resiliency to the measurement noise is poor. CS is more suitable for low noise (<0.3〈I0〉) environments, and the TM method yields the most optimum phase map in the shortest time when the environment is noise-free. PA, PSO, GA, and SA optimize channels in parallel. As a result, they have a rapid initial enhancement growth. PA is more suitable for smaller system sizes where a fast-initial enhancement increase is desired. PSO is robust and noise tolerant; however, confined to less enhancements. GA shows its advantage in very noisy (>0.3〈I0〉) environments. SA presents an adequate performance in mid-range noisy environments (0.3〈I0〉 to 0.6〈I0〉).

Although in the simulation studies performed, we tried to mimic practical environments, the experiments are still ideal. Having said that it has been shown that the simulations that have been performed in a similar manner as phantom experiments have a good qualitative agreement with each other. Furthermore, the initial enhancement increase, and the longtime convergence behavior are in good correspondence with the experimental results.3 The only significant difference is that in most studies 20–50% higher enhancement reached in the simulations.3,8 Therefore, phantom studies can be performed to compare and experimentally verify the effectiveness of the presented algorithms.

Moreover, these algorithms can be applied to binary amplitude wavefront shaping which is a similar method for controlling the transmission of light. Another novel method is Binary phase wavefront shaping which achieves a higher enhancement than binary amplitude wavefront shaping, and it also achieves a higher speed than full phase wavefront shaping, which is crucial for in vivo applications where the sample persistence time is very short.11,46

With the advancement of the development of the SLM, the wavefront shaping will be more spread out and can be used in optical imaging techniques such as optical coherence topography for deep structural imaging.44,47,48

Acknowledgments

Special thanks go to Chris Haig and Daniela Maldonado from Hamamatsu Photonics for their fruitful discussions.