Automated cone photoreceptor cell identification in confocal adaptive optics scanning laser ophthalmoscope images based on object detection

Abstract

Cone photoreceptor cell identification is important for the early diagnosis of retinopathy. In this study, an object detection algorithm is used for cone cell identification in confocal adaptive optics scanning laser ophthalmoscope (AOSLO) images. An effectiveness evaluation of identification using the proposed method reveals precision, recall, and F1-score of 95.8%, 96.5%, and 96.1%, respectively, considering manual identification as the ground truth. Various object detection and identification results from images with different cone photoreceptor cell distributions further demonstrate the performance of the proposed method. Overall, the proposed method can accurately identify cone photoreceptor cells on confocal adaptive optics scanning laser ophthalmoscope images, being comparable to manual identification.

1. Introduction

Vision is essential for human activity and thriving in modern life. Therefore, its decline and loss due to eye diseases can cause major losses to individuals, families, and society. In recent years, retinopathy has become increasingly common, being a major cause of blindness. Nevertheless, most patients suffering retinopathy can prevent blindness with early diagnosis and treatment. Retinopathy is mainly diagnosed by observing pathological changes and the degree of damage to the retina through imaging. The main instruments used for retinal imaging are the fundus camera,1,2 scanning laser ophthalmoscope (SLO),3,4 and optical coherence tomography system,5,6 which only achieve tissue-level resolution under ocular aberrations. For early diagnosis of retinopathy, adaptive optics (AO) has been introduced to correct ocular aberrations during retinal imaging.7,8,9 By integrating AO, the fundus camera,7 SLO,8 and optical coherence tomography system9 can achieve cellular-level resolution. Among these instruments, adaptive optics scanning laser ophthalmoscope (AOSLO) provides real-time and clear imaging of cone photoreceptor cells.8,10,11,12,13 Moreover, AOSLO can contribute to cone photoreceptor cell identification, which is important for the early diagnosis of retinopathy.14,15,16,17 Although manual cone photoreceptor cell identification on AOSLO images is reliable, it is time-consuming and subjective. Therefore, many semi-automatic and automatic identification algorithms have been developed.18,19,20,21,22,23,24,25,26,27,28,29,30,31,32 These algorithms are based on either centroid detection,18,19,20,21 segmentation of the cone photoreceptor cells,24,25,26,27,28,31,32 or image patch classification step with threshing the probability map.22,23,29,30

In this study, we develop our cone photoreceptor cells identification algorithm based on another kind of technique called object detection which is introduced to the cone photoreceptor cells identification task for the first time as far as we know, and apply it to confocal AOSLO images. The object detection algorithm provides not only centers but also bounding boxes of cone photoreceptor cells which may be helpful for the diagnosis of retinopathy, and its computational complexity is lower than segmentation algorithm generally. Among the available object detection algorithms, we adopt YOLO (You Only Look Once)33 as the backbone for cone photoreceptor cell identification, given its high performance. To confirm the effectiveness of the proposed method, its identification results were compared with manual identification results in terms of precision, recall, and F1-score in the confocal AOSLO images. To further demonstrate the performance of the proposed method, we obtained cone photoreceptor cell detection and identification results from images with different cone photoreceptor cell distributions in the confocal AOSLO images.

2. Methods

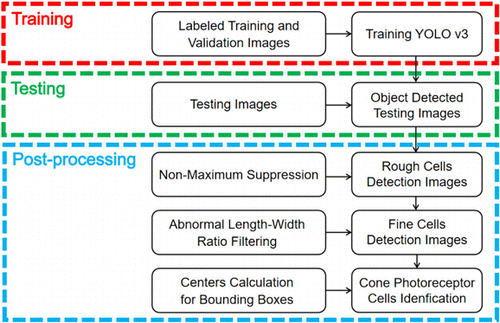

The proposed method was evaluated on a publicly available dataset34 that contains 840 confocal AOSLO images. The ground truths of training process are taken from the results of graph-based method.25 They contain not only centroid selections but also very accurate cone photoreceptor cells segmentation results which can be used to generate circumscribed rectangles of cone photoreceptor cells for the training process. We used 720 confocal AOSLO images for training, 80 confocal AOSLO images for validation, and the remaining 40 confocal AOSLO images for testing. The proposed cone photoreceptor cell identification algorithm comprises the three main steps illustrated in Fig. 1: training, testing, and post-processing. First, labeled training and validation datasets containing confocal AOSLO images34 and bounding boxes of cone photoreceptor cells generated by circumscribed rectangles of the segmentation results using a graph-based method25 are used to train the YOLO v3 model.33 Second, testing confocal AOSLO images are input to the trained YOLO v3 model33 to generate the object detection results. Third, the object detection results are sequentially processed using nonmaximum suppression35 and abnormal length–width ratio filtering, obtaining the final cone photoreceptor cell detection results. Using the bounding boxes of these outputs, the cone photoreceptor cells are individually identified by calculating their centers.

Fig. 1. Diagram of the proposed cone photoreceptor identification method in confocal AOSLO images based on object detection.

2.1. Training

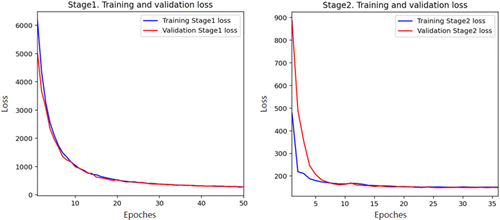

First, k-means clustering36 was used to determine anchor boxes for nine clusters and three scales as the requirement inputs of YOLO v3. The sizes of the nine clusters determined by k-means in the training datasets are listed in Table 1. Second, YOLO v333 pretrained on the COCO dataset,37 which is a large labeled dataset for object detection and image segmentation, was further trained on the labeled confocal AOSLO images with anchor boxes. Training consisted of two stages. In the first stage, DarkNet-53, which is the backbone of YOLO v3, was frozen, and the rest of the YOLO v3 architecture33 was trained with a batch size of 32 for 50 epochs. In the second stage, DarkNet-53 was unfrozen, and the complete YOLO v3 was trained using the same datasets as in the first stage with a batch size of 8 for 50 epochs. Early stopping38 and an adaptive learning rate39 were applied for training. The loss curves of the training and validation process are shown in Fig. 2. As shown in the figure, the loss value decreased and then converged in the two stages, and early stopping happened at the 36th epoch in the second stage.

| Cluster | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 |

|---|---|---|---|---|---|---|---|---|---|

| Size (pixels) | 30 × 30 | 24 × 30 | 36 × 36 | 36 × 42 | 36 × 30 | 42 × 36 | 42 × 42 | 48 × 48 | 54 × 48 |

Fig. 2. Loss curves of the training and validation process.

2.2. Testing and post-processing

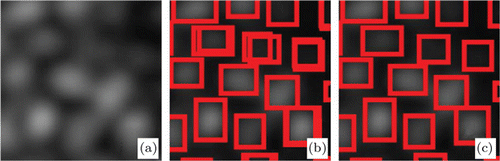

For testing, the trained YOLO v3 was used to obtain the object detection results per testing confocal AOSLO image. These object detection results contain the initial cone photoreceptor cells detection information with over-detection as the other YOLO v3 applications. To solve the over-detection problem, we first applied nonmaximum suppression35 to the object detection results. Then, as a cone photoreceptor cell has an approximately circular shape, the length–width ratio should be close to 1. Accordingly, we filtered out object detection results with abnormal length–width ratios after nonmaximum suppression.35 In detail, object detection results with the length–width ratios below 1/3 or above 3 were discarded, obtaining the final cone photoreceptor cell detection results. An example of nonmaximum suppression35 and abnormal length–width ratio filtering using a representative image patch is shown in Fig. 3. As shown in the figure, the over-detection problem is solved to a large extent by the two processing steps. To individually identify the cone photoreceptor cells, the centers of the bounding boxes in the final detection results were calculated.

Fig. 3. Effectiveness of post-processing: (a) Input confocal AOSLO image, (b) original object detection results, and (c) object detection results after nonmaximum suppression and abnormal length–width ratio filtering.

3. Results

The computations took 16.4h for training and 189.7s for testing and post-processing. These computation times were obtained from a computer running 64-bit Python and equipped with an Intel Xeon E5-2620 processor (2.10GHz), 32-GB memory, and an NVIDIA GeForce GTX 1080Ti graphics card.

To confirm the effectiveness of the proposed method and compare it with similar methods,24,25,26,29,31,32 the identification results were evaluated by calculating the overall precision, recall, and F1-score on the testing dataset considering manual identification as the ground truth, obtaining the results listed in Table 2. The proposed method achieves more accurate cone photoreceptor cell identification than our previous methods,24,26,31,32 which confirms its effectiveness. However, it did not quite reach the performance of methods such as graph-based method25 and confocal convolutional neural network.29

| Method | Precision | Recall | F1-score |

|---|---|---|---|

| Proposed method | 95.8% | 96.5% | 96.1% |

| Confocal convolutional neural network29 | 99% | 99.1% | 99% |

| Graph-based method25 | 98.2% | 98.5% | 98.3% |

| DeepLab-based method31 | 96.7% | 94.6% | 95.7% |

| Watershed-based method24 | 93.2% | 96.6% | 94.9% |

| The k-means clustering-based method32 | 93.4% | 95.2% | 94.3% |

| Superpixel-based method26 | 80.1% | 93.5% | 86.3% |

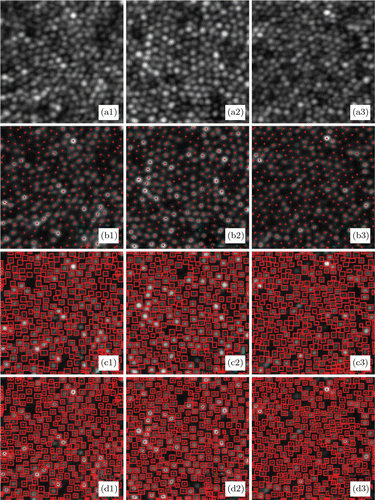

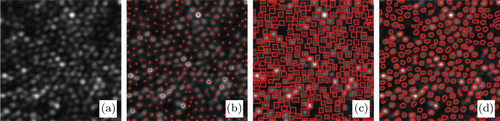

To illustrate the identification performance of the proposed method, Fig. 4 shows the cone photoreceptor cell detection and identification results of three typical examples with different cone photoreceptor cell distributions from the testing dataset. The cone photoreceptor cells were identified on the three confocal AOSLO images with high accuracy using the proposed method.

Fig. 4. Results of the proposed algorithm with different cone photoreceptor cell distributions: (a) Input confocal AOSLO images, (b) identification results, (c) detection results, and (d) identification and detection results which are the combination of (b) and (c).

4. Discussion

To the best of our knowledge, this is the first method based on object detection to identify cone photoreceptor cells, and it has achieved highly accurate results. Although we adopted YOLO v3, other object detection algorithms may be more suitable for cone photoreceptor cell identification. Thus, other object detection algorithms should be evaluated to determine those with possibly higher identification accuracy.

In previous methods,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32 there are two types of results which can be obtained: identified results18,19,20,21,22,23 [Fig. 5(b)] and segmentation results24,25,26,27,28,29,30,31,32 [Fig. 5(d)]. In this paper, we provide the third types of results — object detection results [Fig. 5(c)]. These results provide more geometry information of cone photoreceptor cells by the output of height and width of each cone photoreceptor cell, which may be helpful for the diagnosis of retinopathy. Although segmentation results provide even more geometry information of cone photoreceptor cells, but the accurate segmentation process needs generally more complex computation results in more computational time.

Fig. 5. Three types of results: (a) Input confocal AOSLO images, (b) identification results, (c) object detection results (our method), and (d) segmentation results.

Like every application of deep learning object detection, the proposed method is expected to fail when the intensity distribution of cone photoreceptor cell in the testing dataset is too much different than that in the training dataset. Although we cannot apply our method to pathological images, vessel shadow images, rod and cone photoreceptor cell images, and low-quality images due to shortage of large dataset, we believe that our method can provide accurate object detection results if we have enough number of these kinds of images for training.

5. Conclusions

We propose an automated cone photoreceptor cell identification method based on object detection. The proposed method achieved precision, recall, and F1-score of 95.8%, 96.5%, and 96.1% considering manual identification as the ground truth. To illustrate the performance of the proposed method, cone photoreceptor cell identification and detection results of three typical examples with different cone photoreceptor cell distributions were obtained. The experimental results show the effectiveness and high accuracy of the proposed method for cone photoreceptor cell detection and identification. The proposed method may assist health professionals in the early diagnosis of retinopathy.

Conflict of Interest

The authors declare that there are no conflicts of interest regarding the publication of this paper.

Acknowledgments

The authors wish to thank the anonymous reviewers for their valuable suggestions. This work was supported in part by the Natural Science Foundation of Jiangsu Province (BK20200214); National Key R&D Program of China (2017YFB0403701); Jiangsu Province Key R&D Program (BE2019682 and BE2018667); National Natural Science Foundation of China (61605210, 61675226, and 62075235); Youth Innovation Promotion Association of Chinese Academy of Sciences (2019320); Frontier Science Research Project of the Chinese Academy of Sciences (QYZDB-SSW-JSC03); Strategic Priority Research Program of the Chinese Academy of Sciences (XDB02060000); and Entrepreneurship and Innovation Talents in Jiangsu Province (Innovation of Scientific Research Institutes).