Observing single cells in whole organs with optical imaging

Abstract

Cells are the basic unit of human organs that are not fully understood. The revolutionary advancements of optical imaging allowed us to observe single cells in whole organs, revealing the complicated composition of cells with spatial information. Therefore, in this review, we revisit the principles of optical contrast related to those biomolecules and the optical techniques that transform optical contrast into detectable optical signals. Then, we describe optical imaging to achieve three-dimensional spatial discrimination for biological tissues. Due to the milky appearance of tissues, the spatial information blurred deep in the whole organ. Fortunately, strategies developed in the last decade could circumvent this issue and lead us into a new era of investigation of the cells with their original spatial information.

1. Introduction

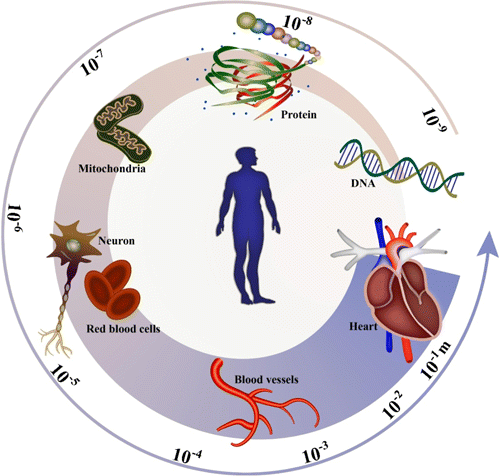

The human body is a complex system with vast numbers of unveiled mysteries. Billions of cells with diverse types composed of organs like the brain, heart, liver, and lung perform different functions together to maintain a living life.1 Although cells are the basic unit of humans, they are not a simple unit fully understood. A cell consists of parts such as a membrane and cytoplasm, which contain lipids, proteins, nucleic acids, and many other biomolecules. Nuclear acid is mainly used for the storage and expression of inherited information in cells, which fundamentally determines the function of the cell. After gene expression, the generated proteins provide the cytoskeleton of cells and perform various determined functions for cells. Lipids constitute a variety of membranes in cells and are also widely involved in cells’ metabolism and signal transduction. As shown in Fig. 1, investigation of these biomolecules will expand our knowledge of the composition of different cells and help us explore the diversity of cell types and comprehend the function of cells in the organ.2

Fig. 1. Complicated composition of cells in the organ at different scales.

Traditional cell studies tend to classify and analyze the cell population directly, ignoring spatial information. However, spatial information is also a critical dimension to be considered. In this review, the spatial information will refer to three aspects, the morphology, the location, and the connection of the cells. For centuries, the morphology of cells has been studied by isolating them for observation, not only in biological research but also in diagnosis. The morphology of normal red blood cells is biconcave to exchange oxygen and carbon dioxide efficiently. It is also flexible when the red blood cells go through microvasculatures of a few microns in diameter. Moreover, the red blood cells will become sickle shape along with an inherited disorder, sickle cell anemia. Therefore, morphology is highly related to the physiology and pathology condition of the cells. The location, which indicates the cell’s relative position in the whole organ, typically suggests the cells’ function. Take the brain as an example. In the late 19th century, the language-related region of the human brain cortex was discovered for the first time.3 Since then, neurobiologists have divided the brain into hundreds of areas related to different functions, from sensation, vision, and hearing to emotion and memory.4 Furthermore, the cells can not perform normal functions independently but with cell–cell and cell–matrix interactions.5 Billion neurons all over the brain cannot work without connections.6 Each is involved in neuronal circuits based on the synaptic signal exchange.7 The patterns of the synaptic connections of neuronal circuits determined their specific functions, which combined to perform the brain’s normal function.

To acquire the composition of the cells with spatial information in the whole organ, we need contrast to make these biomolecules visible and spatial resolution to discriminate them, both of which could be provided by modern biomedical imaging. In general, biomedical imaging uses waves, including electromagnetic and mechanical waves, or particles, including electrons and neutrons, to reveal the mysteries of biological objects that are not directly visible to our eyes.8 Biomedical optical imaging, which covers the spectrum of photons from ultraviolet to near-infrared, can fulfill our requirement in contrast and resolution.9 On the one hand, the light within this spectrum can give us many different contrasts depending on the detectable interactions between cells and photons. On the other hand, the photons within this spectrum could be focused into a spot as small as a submicron, perfectly fit for the spatial resolution for observation of cells. Therefore, this review focuses on three aspects. First, how can we optically produce contrast to make biological macromolecules visible? Second, how can we make biomolecules clear at optical resolution? At last, how can we investigate the single cells in whole organs?

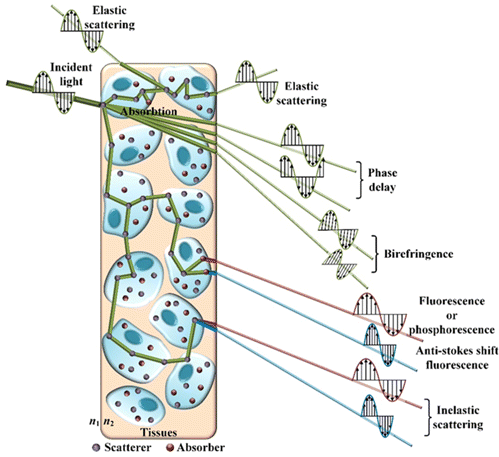

2. Making the Biomolecules Visible

Passive imaging modalities, such as thermal imaging with near-infrared light10 and bioluminescence imaging,11 depend on detecting light emission from the object. In addition, active imaging methods are based on detecting changes in the probing light produced from the object after interacting with highly complex biological tissues. The photons mainly interact with the tissues through absorption and scattering, which can be explained with either wave theory or particle theory. As an electromagnetic wave, a source irradiates light waves with the initial phase, wavelength, vibration direction, propagation direction, and radiant intensity.12 The interaction between photons and tissue will change these properties as they pass through the tissue in Fig. 2, which can be used to identify different biomolecules on spatial and temporal scales accordingly.13 Considering the heterogeneity of organizations, there is no doubt that some of these changes must be interrelated. For instance, the red blood cells will absorb and scatter part of photons, which may change the intensity and direction of incident light and produce new photons with different wavelengths. However, we will focus on the dominant results after the interaction between the photons and tissues that provide the contrasts and discuss them separately.

Fig. 2. Interactions between light and biological tissues.

2.1. Changing the radiant intensity of the incident light

The absorption of incident light results in photon annihilation and energy transfer to the emitting chromophore.14 One photon’s transferred energy can promote an electron from a low energy level orbital to an unoccupied orbital with a high energy level, which means one chromophore in the ground state will be excited. The transfer of energy can also be expressed by the change in the intensity of the incident light, which decays exponentially with the product of the molar absorption coefficient, molar concentration, and transmission length according to the Beer–Lambert law.15 In the human body, hemoglobin in red blood cells is the primary optical absorption chromophore in visible light.16 The heme is a porphyrin compound in hemoglobin dominating optical absorption and is responsible for binding and exchanging oxygen and carbon dioxide for the human body.17 The absorption is mainly located at the Soret band (around 420nm) and Q-band (540–580nm),18 determined by the corresponding excitation state that makes our blood red. Therefore, it is possible to monitor the hemoglobin concentration in tissues which determines the blood volume. Furthermore, the absorption spectra of oxygenated and deoxygenated hemoglobin are different in visible light, allowing us to obtain the ratio between them and thus reveal the blood oxygen saturation.19 In addition to the hemoglobin, chromosomes, and lipid are good absorbers in the ultraviolet and infrared spectrum.

2.2. Changing the propagation direction of the incident light

The incident photons will change their initial orientation through elastic collisions with the tissue, called elastic scattering, without energy transfer. Usually, elastic scattering can be modeled by Mie theory, which describes the interaction of photons with particles of a diameter comparable to the wavelength,20 approximately at the cellular scale of biological tissues. On the other hand, the Rayleigh theory represents the scattering of particles at wavelengths much smaller than that of photons, which is a reduced version of the Mie theory, approximately on the biomolecular scale.9 Those biomolecules, organelles, cells, and intercellular substances in tissues have different refractive indices and sizes. That is why biological tissues are usually called turbid media, which means the photons will be highly scattered and become more and more irrelevant to their initial incident directions as going deeper into the tissues.21 And we can only see the veins beneath the skin, while those arteries in deep usually are invisible. Neurons in the nervous system communicate via neurotransmitters regulated by the action potential. During the propagation of action potential, the dipoles in the membrane reoriented subsequently.22 This will change the membrane’s refractive index accordingly and influence the optical scattering.23

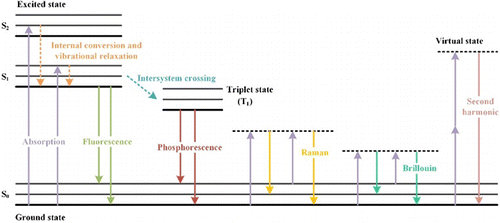

2.3. Changing the wavelength of the incident light

The changing of the wavelength implies that the incident light changes its color as it passes through the tissue. This phenomenon can attribute to several reasons, including optical absorption, inelastic scattering, harmonic effects, and the Doppler effect. Overall, there will be energy transferring between the incident light and the tissue (Fig. 3). The wavelength becomes longer after the energy loss and vice versa. Optical absorption leads to the excitation of the chromophore. In the previous part, we focus on the attenuation of the incident light introduced by the annihilation of the photons. But the energy of the photons will be transformed into heat and new photons, which the Jablonski diagram24 of nonradiative and radiative relaxation of the excited chromophore, respectively, can describe. The emission of the new photon, as the chromophore returns to the ground state, is known as photoluminescence.25 The new photon’s wavelength is usually longer than that of the absorbed photon due to the vibrational relaxation before de-excitation, which we call the increasing of the wavelength as Stokes shift. Fluorescence is the most common photoluminescence in tissues. Flavins, lipofuscins, elastin, and many other biomolecules can emit strong fluorescence in the visible range.26 Oxidative phosphorylation is the primary approach for producing adenosine triphosphate, regarded as energy currency in cells.27 During oxidative phosphorylation, nicotinamide adenine dinucleotide (NADH) is the key biomolecule responsible for transporting electrons to oxygen in this pathway.28 Therefore, NADH’s emission fluorescence can reveal the tissue’s redox state.29 Phosphorescence produced when the chromophore returns from the triplet state to the ground state has a longer wavelength and lifetime of the emitted photons than fluorescence. We can observe phosphorescence from horny and calcareous materials with a lifetime of tens of seconds in the human body.30 Chromophores can produce photons with a shorter wavelength than the incident light, known as anti-Stokes shift fluorescence. Multiphoton absorption is a typical source of anti-Stokes shift, as several photons are absorbed simultaneously, and a new photon with a shorter wavelength will be observed. These fluorescent-emitting biomolecules can usually also be excited by multiphoton absorption.31,32,33 Considering the extremely low possibility of simultaneous absorption of several photons, fluorescence induced by multiphoton absorption is much less efficient.

Fig. 3. Jablonski diagram of fluorescence, phosphorescence, Raman scattering, Brillouin scattering, and second harmonic.

Inelastic scattering in the tissues will change the incident light’s direction and wavelength. We will introduce two inelastic scattering in the tissues, Raman scattering and Brillouin scattering. Raman scattering is similar to Rayleigh scattering except for the changing of the wavelength. As described in Fig. 3, there will be a virtual state where the incident photon” energy is absorbed without being conserved, and a new photon is immediately created.34,35,36 Stokes and anti-Stokes shifts of wavelength can be found in Raman scattering, where the shifts are determined only by differences in biological macromolecules’ vibrational or rotational energy states. Sphingomyelin is the dominant sphingolipid compound in cell membranes. It is also the backbone of the myelin sheath of nerve fibers, which is responsible for the protection of fibers and isolation of transmitted electrical impulses. The Raman spectra of sphingomyelin include some unique bands, like 1670 and 1654cm−1, determined by C=O stretching coupling with N–H bending vibration.37 Therefore, Raman spectra can be regarded as a “fingerprint” of the sphingomyelin. It can even differentiate the sphingomyelin and other phospholipids or determine their ratio. Besides lipids, Raman scattering can be adopted to identify proteins, carbohydrates, and many metabolic products.38 It is worth mentioning that Stokes shift in Raman scattering is usually smaller than in fluorescence because it depends on the rotational or vibrational transitions. Brillouin scattering is an inelastic process that can be described as the interaction of incident light and the so-called acoustic phonons of the material.39 The phonons induced by the heat act as a moving optical grating related to the mechanical properties. Therefore, due to the Doppler effect, they will generate Stokes and anti-Stokes light. Compared to Raman scattering, Brillouin scattering has a much smaller Stokes shift and intensity since the energy of the phonons and the interaction possibility are extremely low.40 Brillouin scattering has been investigated in material science for decades. However, it is still debated which mechanical properties it does measure exactly in biological tissues. Pioneering works have shown it is sensitive to the cytoskeleton41 and extracellular matrix, which both provide the supporting framework for the cells and the tissues.

The second harmonic is a nonlinear coherent process highly dependent on the incident light and the material. Two virtual states are assumed in this scenario to represent the virtual absorption of two incident photons.42 New photons following the virtual absorption are twice the frequency and half the wavelength.43 Higher-order harmonic generation will follow a similar principle but with a much lower efficiency. Collagen is the most widespread biomolecule in the extracellular matrix, which is also related to the tumor microenvironment. The second harmonic signal from collagen typically arises from the noncentrosymmetric of collagen assembly, due to the requirement of large hyperpolarizability.44 Similarly, the microtubules and myosin can generate strong second harmonic signals.

Doppler effect refers to the frequency shift of the wave when there is relative moving between source and observer, where the shift is determined by the relative speed linearly.45 Blood is pumped by the heart in the circulation system. The blood flow velocity can reveal the local metabolic rate of oxygen in tissues. The movement of the scatterers in the blood, such as red blood cells, leukocyte can be regarded as moving light sources when the incident light illuminates them. Therefore, the Doppler shift of the scattered light can be used to evaluate the blood flow velocity.

2.4. Changing the polarization of incident light

Light is a transverse wave whose displacement vector is perpendicular to the propagation direction. The polarization will indicate the orientation of the displacement vector, in analogy to the vibration direction in the mechanical wave, through time. Tissues with anisotropic structure may exhibit birefringence, which separates incident light into two vibrational components with different directions.20 The combination of the different components changes the original polarization of the incident light due to the different refractive indices between them. Collagens, elastin fibers, and nerve fibers can show strong birefringence.46 Interestingly, many deposits related to various diseases are also birefringent, such as urates in gout, calcium oxalate in uremia, cholesterol ester in atheromatosis, and amyloid in amyloidosis.47

2.5. Changing the phase of the incident light

According to the wave equation, the speed of light is determined by the dielectric constant of the biological tissues since they are nonmagnetic materials. If we consider the composition of the cells, the cell membrane, cytoplasm, and cell nucleus have quite different dielectric constants. Therefore, the light passes through the cell experiences pathways with similar lengths but different speeds and optical path lengths.48 The delayed light will perform a delayed phase compared to undisturbed light.

3. Looking at the Biomolecules with Optical Resolution

Harnessing the changing of the incident light after passing through the biological tissues, we may observe those biomolecules. However, until we develop various techniques to obtain the corresponding light signals and extract the information, many obstacles still prevent us from getting this information. First, the contrast mentioned above should be measurable to bridge the gap between the biomolecules and the electronic signals. Second, the spatial resolution should achieve submicron at least in three dimensions for observing the details of the cells.

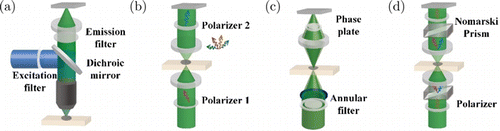

3.1. Transforming the optical contrast to the intensity of the optical signal

In general, the commercialized photodetectors widely adopted in optical imaging, including photodiodes, photomultiplier tubes, charge-coupled devices, and complementary metal–oxide–semiconductor cameras, are sensitive only to the intensity of the light.49 Therefore, the scattered light can be directly observed, while the absorbed light can be quantified by measuring the passing light indirectly. In any case, sensing changes in the wavelength, polarization, and phase of light requires additional techniques. Separating the emission light from the incident light can be realized by introducing optical filters into the light path (Fig. 4(a)). Excitation and emission filter can select incident and emitted light with designated wavelength. Dichroic mirrors can reflect the incident light and pass the emission light, and vice versa, making fluorescence imaging with a reflection mode possible. In harmonic imaging, the emission light is with a double wavelength. While in some fluorescence imaging, the emission light may overlap with the incident light on the spectrum to some extent. In this case, high-performance interference filters and dichroic mirrors are requisite,50 especially for those faint fluorophores, given the high-level background from incident light. Similarly, polarized light imaging relies on the polarizers, which could determine the polarization direction of incident light and analyze the direction of the emission light (Fig. 4(b)). On the contrary, the inspection of the phase differences of the light is quite tricky. The first breakthrough is the phase contrast microscope (PCM), which transfers the phase into intensity by introducing optical interference (Fig. 4(c)).51,52 In PCM, an annular ring mask replaces the condenser aperture diaphragm and generates unidirectional illumination. Then, a phase ring, attenuating and retarding the passing light, is placed at the rear focal plane of the objective, conjugating with the condenser aperture. Following Abbe’s theory of microscopic imaging, the final image is the interference of the light diffracted by the specimen and objective. Therefore, the specimen’s zero-order light (undeviated light) will suffer a delay and attenuation by the phase plate. In contrast, the higher-order light will retard by the specimen with about 1/4 wavelength, spreading across the aperture of the objective and interfering with zero-order light at the imaging plane. By separately adjusting the phase and the intensity of undeviated light, we can get the best contrast to reveal the phase difference introduced by the observing cells.53 After that, another method, named differential interference contrast (DIC) microscope, was proposed to do a similar job in a more straight-forward manner (Fig. 4(d)). The principle of DIC is to generate two illumination lights with slight differences in position, which will induce two wavefronts interfering at the imaging plane.54 The interference of the two wavefronts will represent the difference in the optical path between any position of the samples with their adjacent areas. Birefringent Nomarski prism plays a key part in DIC by splitting and merging the light path. In addition, polarizers and analyzers are also used to ensure efficient beam splitting and interference. In the following studies, interference was adopted as an efficient method to transfer the phase information into intensity,55 such as holography and lensless imaging.

Fig. 4. Transform the optical contrast into the optical signal. (a) In fluorescence microscopy, the filter sets are used to separate the light with wavelength changing. (b) Polarizers can inspect the changes in polarization of the light. PCM in (c) and (d) transforms the difference in phase delay to the optical intensity with interference.

3.2. Making the optical signal detectable

In other cases, the required signal may be either too low or flooded by the incident light, making them impractical in biological samples. There is no doubt that many electronic methods can extract weak signals, but this review still focuses on optical techniques. Darkfield imaging is a typical technique to suppress the incident light (Fig. 5(a)). Oblique illumination is generally introduced by blocking the center part of the incident light in darkfield imaging. Then, the detection light path will only collect the Rayleigh scattered light that changes its initial direction while avoiding collecting the light following the incident azimuth.56 The sensitivity limits Raman imaging in biological samples due to the exceptionally small cross section (description of the possibility of interaction) of molecules for Raman scattering. Coherent Raman scattering (CRS), instead of spontaneous Raman scattering, can boost the magnitude of Raman scattering with several orders.57 Dominated CRS techniques were coherent anti-Stokes Raman (CARS) microscopy (Fig. 5(b)) and stimulated Raman scattering (SRS) microscopy (Fig. 5(c)). CRS required two lasers focusing both in space and time on samples, including a pump laser and a Stokes laser. When their beating frequency approach specific molecular vibration frequency, resonance will generate and largely amplify the CARS signal.58 SRS microscopy can achieve better signal quality by detecting the variation of pump or Stokes laser with lock-in mode, which is not contaminated by non–Raman background.59

Fig. 5. Transform the optical contrast into the detectable optical signal. (a) Darkfield imaging only detects the scattered light (yellow part) using an objective with lower numerical aperture (NA) to avoid the high background of illumination (green part). (b) CARS detected the generated Raman signal (blue), while the variation of the pump laser (green) was detected in SRS (c).

3.3. Contrast agent

The exogenous contrast agent is another pathway leading to contrast enhancement, which could date back to the years of the innovation of optical microscopes.60 Huge numbers of exogenous contrast agents based on various biochemical designs have been adopted to almost every optical imaging method.61,62 Nevertheless, their mission consists of binding to specific molecules and amplifying the optical contrast by exploiting their interaction with light.8 Covering this whole field is far beyond the scope of this review. We will only introduce several representative examples briefly. Staining the cells with dyes is the first attempt to increase the contrast in optical imaging, for the dyes are light absorbers. The silver staining proposed by Golgi accelerated the discovery of neurons by randomly labeling the whole neurons with increasing light absorption.62 It makes every detail, soma, axon, and protrusion on the dendrites appear black in the view of the wide-field optical microscope. Fluorescence dyes revolutionize this field, which has been widely applied from biomedical research to clinical diagnosis. Especially, fluorescence protein (FP),63 including green FP, red FP, and their mutants covering the visible and near-infrared spectrum, can be used as “dye” for gene expression. Surface-enhanced Raman spectroscopy can enhance the Raman scattering thousands of times without the CRS technique, using Au or Ag nanoparticles as the contrast agent.64 In fact, the biomolecules that change any optical properties which we mentioned before with a detectable level could be used as contrast agents.

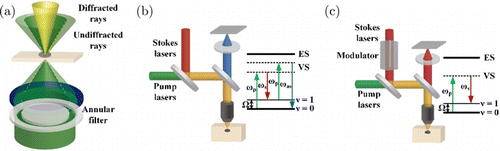

3.4. Achieving the spatial discrimination in three dimensions

The innovation of the optical microscope dates from the 17th century. Using the microscope, the “cell” was first proposed to describe the pores in the cork, which our eyes cannot directly discriminate.65 Since then, we have stridden into a new era of microscopy. Modern optical microscopes still use compound optics, generally composed of objective, tube lens, and eyepiece. In a bright field microscope, whether the microscope is with transmission or reflection mode, Köhler illumination is always required to promise an even light field with parallel incident light. The objective collects light from the focal plane and forms a parallel beam, which is then incident into a tubular lens to form an intermediate image. Then the magnified virtual image can be seen by our eyes through the secondary magnification of the intermediate image made by the eyepiece. It seems that the higher the total magnification determined by the whole light path, the better the resolving power of the microscope. However, Abbe’s formula indicates the fundamental limitation is the wavelength and NA that reveals the collection azimuth of the microscope.20 Abbe supposed that the samples are quasi-transparent gratings. Then following his imaging theory, the objective must collect at least one diffractive order, except the zeroth order of the grating, to recover the image. Airy also deduced the diffraction limit by a circular aperture that can represent the blurring after imaging with a microscope.66 According to the Rayleigh criterion, the distance between the central maximum and the first-order minimum of the Airy disk can be regarded as the microscope’s resolving power (Fig. 6(a)),67 which also confirms the conclusion from Abbe. It is worth mentioning that if the illumination is with a coherent light source, the complex amplitude of the Airy disk must be considered for resolving two adjacent points.68 Although many efforts have been made for a century, we can only improve the resolution by either decreasing the wavelength or increasing the NA of the objective. Moreover, the upper limit spatial resolution of optical microscopy for biological samples is around 200 nm, which is not enough to observe some organelles. In the last two decades, three different strategies have been proposed to break the diffraction limit, modulation-based method (Fig. 6(b)), localization-based method (Fig. 6(c)), and local excitation-restricted method (Fig. 6(d)).69 Modulation-based methods supposed that the emission linearly depends on the excitation intensity.70 Then, the emission of high-frequency information from the samples can be moved to the passing frequency spectrum of the microscopy, in analogy to the modulation and de-modulation in communications. The most common modulation-based super-resolution system is structured illumination microscopy (SIM).71,72,73 It adopted a fringe pattern with a single spatial frequency as the illumination. Therefore, there will be band-pass, sum, and beating frequencies of the fringe frequency and the original frequency superimposed. Three excitation fringes with different phases can be used to separate those components. Then, they will be moved to their correct position in the Fourier domain.74 The frequency of the fringe and the microscopy’s passing band determines the resolution’s upper limit for SIM. Speckle and point-like patterns have also demonstrated resolution improvement with two folds in the epi-illumination mode.75,76,77 Naturally, the spatial resolution could also be further elevated by generating higher frequency modulations. This can be achieved with nonlinear excitation of the samples illuminated by sinusoidal fringes, which generally require very high-intensity excitation and fluorophores resisting photobleaching.78,79 The second approach relies on single molecular localization.80 Photoactivatable localization microscopy (PALM) and stochastic optical reconstruction microscopy (STORM) are representative works.81,82,83 They used light to control the state of photoactivatable fluorophores for sparse fluorescence emission. Therefore, there will be only no more than one fluorophore detected in an Airy disk. Algorithms for single molecular localization can determine the position of the active fluorophore. Repeated sparse emission and detection will last hundreds of thousands of times. The statistical positions of the fluorophores consist of the final image, of which the accuracy of the localization determines resolution.84,85 At last, squeezing the excitation spot size is the most straightforward approach. Stimulated emission depletion (STED) microscopy adopted a focused doughnut-shaped beam to suppress the fluorophores in the exterior of the Airy disk.86 Then, only fluorophores in the central part of the Airy disk can emit fluorescence excited by another Gaussian beam.87 Therefore, the excitation Airy disk can be restricted to a much smaller size. In order to depress the exterior of the Airy disk, photoswitchable fluorophores can also achieve a similar goal.87,88 In another aspect, nonlinear excitation can narrow the effective spot size since it is determined by the Nth power when the Nth order nonlinear effect is introduced.89,90 Till date, a hybrid super-resolution system combining doughnut-shape illumination and single molecular localization performs unprecedented resolution.91 This method took advantage of the small size of the central part of the doughnut to narrow the scope of the localization of single molecules. Therefore, it further elevated the localization accuracy and achieved a better resolution. We have discussed the efforts in far-field optical microscopy. In the near field, which means the emission light is within the range around one wavelength, details beyond wavelength will be preserved. However, near-field optical microscopy is yet to be adopted in biomedical imaging44 because a subwavelength-scale tip is required to detect near-field information, which would result in near-contact with the sample.

Fig. 6. Break the diffraction limit of optical imaging. (a) Two close Airy disks that can be easily resolved, just resolved, and unresolved. (b) Generation of high spatial frequency illumination (fringe pattern) and the principle of expanding the Fourier domain (different color represents the illumination with different direction). (c) Principle of PALM. Only one fluorophore (green dot) emits light at each exposure in an Airy disk. Multiple imaging can determine the position of each fluorophore using Gaussian fitting. (d) Principle of STED. The depletion light (red) made the fluorescence can only be emitted from the central part (smaller green spot) of the Airy disk.

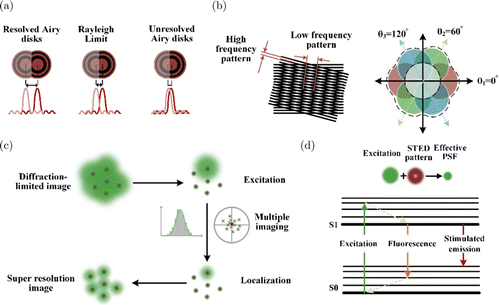

A spatial resolution in three dimensions is requisite to depict the whole organ. Unfortunately, optics are difficult to discriminate along their propagation direction with a pulse-echo mode, just like that in ultrasonic imaging. Assuming a pulsed light train with a width of 100 fs propagating in the tissues, it will last for more than 20 microns, considering the light speed. To discriminate the pulses in space, we need detectors and digitizers with tens of terahertz bandwidth and sampling rate, which is unrealistic in electronics. No doubt that an objective can form an Airy disk not only in the transversal direction but also in the axial direction. Moreover, the length in the axial direction is inversely proportional to the square of the NA of the objective. However, we can only build a panel detector instead of a three-dimensional sensor. Then, the whole optical field will project on the panel detectors, although the in-focus information is dominated when an objective with high NA is adopted. Therefore, developing techniques to remove the crosstalk out-of-focus is critical to promise the imaging in-focus. We often refer to these techniques as optical sectioning,92 since they would only preserve the in-focus information, just like mechanical sectioning of the in-focus tissues. Different strategies have been developed to achieve optical sectioning, restricting the illumination area, blocking the out-of-focus light, and modulation. In theory, if only the in-focus tissue is illuminated, no out-of-focus information is generated, which is the ultimate solution for optical sectioning. Light sheet microscopy (LSM) faithfully followed this concept (Fig. 7(a)).93 Unlike other optical microscopy, the optical axis of illumination and detection in LSM no longer overlaps.94 Instead, illumination is often perpendicular to the detection path, which could avoid the out-of-focus excitation.95 Various illumination shapes have been investigated to generate a large, thin, and uniform light sheet for better sectioning.96,97,98,99 Two-photon excitation is a nonlinear effect where two photons are absorbed almost simultaneously, and a photon with higher energy is released shortly after that.100 Two-photon microscopy (TPM) was introduced into biological research following the innovation of the femtosecond pulsed laser. The two-photon absorption required a highly focused laser both in space and time.101 We took advantage of the nonlinear response of the fluorescence to the excitation light, out-of-focus fluorescence decays quadratically away from the focus plane (Fig. 7(b)).102,103 Apart from manipulating the light from the excitation path, directly blocking the fluorescence out-of-focus from the detection path is also widely adopted.104 Confocal microscopy (CM) is the most famous apparatus in biological labs over the world (Fig. 7(c)). Spot light with the diffraction-limited size is commonly used as an excitation. While a pinhole was placed conjugately with the spot light in front of the detector to block the out-of-focus fluorescence directly,105 its size will balance the sectioning capability and the signal level.106 It is worth mentioning that both TPM and CM work in a low-efficiency raster scanning mode. Parallelization systems sharing similar principles, like Nipkow confocal or aperture correlation, can largely speed up image acquisition at the cost of lateral cross-talk.107,108,109 Therefore, to achieve fast optical sectioning, various modulation-based methods have been devised (Fig. 7(d)).110 When we illuminate the samples with some designated patterns, the in-focus information will be modulated by the pattern. On the contrary, the out-of-focus information will be modulated by the counterpart defocused pattern. Then, as we slightly alter the pattern, the in-focus information will alter accordingly, and the out-of-focus information will not, due to the blurring of the pattern out-of-focus.111 In practice, sinusoidal or speckle patterns will play a similar role in illumination,112,113 but with different image reconstruction methods.

Fig. 7. Optical sectioning microscopy. (a) Inclined illumination only excited the fluorophore in the focal plane of the detection objective. (b) The nonlinear effect makes the excitation only happens in the focal spot, and the background decays quadratically away from the focal spot. (c) Pinhole in front of the detector block the generated fluorescence out-of-focus. (d) The fringes in the focal plane have a clear pattern while blurred out of focus. Changing the phase of fringes will introduce an obvious difference in the focal plane and negligible changing out of focus.

Except for the sectioning capability, resolution along the axial direction is even more desired to be improved than that along the transversal direction, since the axial resolution is much worse than the transversal resolution for the same objective.114,115 The methods of axial resolution improvement actually follow similar strategies used in the aforementioned super-resolution microscopy. Inspired by the better resolution along the transversal direction of the objective, inclined illumination could slightly elevate the axial resolution determined by the Airy disk along the axial direction of the detection objective and the transversal direction of the illumination objective (Fig. 8(a)).116 When the inclined angle turns 90 degrees, the setup becomes LSM and the axial resolution is solely decided by the illumination objective. Nonlinear excitation also works along the axial direction, as we described before. However, the restricted excitation thickness is also diffraction-limited. Total internal reflection fluorescence microscopy took advantage of the evanescent field, which does not propagate away from the source, with an exponential decay away from the interface between materials with different refractive indices (Fig. 8(b)).117 Therefore, the incident light greater than the critical angle will produce an illumination section with a thickness of a few hundred nanometers just near the interface, breaking the diffraction limit along the axial direction. On the contrary, supercritical angle fluorescence microscopy directly acquires the near field emission of fluorophore beneath the cover glass above the critical angle, which will also exponentially decay away from the glass.118 At last, three-dimensional STED shares the same principle with the two-dimensional setup, except for the doughnut-shaped beam spot in the three dimensions (Fig. 8(c)).119 Among various modulation-based strategies, 4pi microscopy and I2M used two opposite objectives to generate modulation fringes along the axial direction by interference (Fig. 8(d)).120,121,122 If we utilize the excited fluorescence collected by the two objectives for interference, the performance will be further improved.123,124 While, in three-dimensional SIM, 3D fringes are generated by three-beam interference, so reconstruction also needs to be extended to 3D (Fig. 8(e)).125 Moreover, combining nonlinear excitation can offer high-order modulation similar to that we described in nonlinear SIM. Localization-based methods for axial super-resolution are different from that for transversal. In essence, the axial position in this strategy is encoded by some special focal beam with different shapes along the axial direction, which is called PSF engineering.126

Fig. 8. Elevate axial resolution of optical microscopy. (a) Inclined illumination can generate a much small size spot along the optical axis of the detection objective. (b) Evanescent wave only exists near the interface with a thickness of hundreds of nanometers, where the fluorophore can be excited. (c) 3D STED uses a 3D depletion to limit the excitation range in depth. (d) 4Pi microscopy used interference of the upper and lower light to make a narrow main lobe in depth. (e) 3D SIM generates fringes in 3D with interference to expand the Fourier domain in 3D. (f) The cylindrical lens introduces extra focal power to generate two different focal planes. Fluorophores at different depths will be modulated by PSF with different shapes.

Take the astigmatic PSF for instance (Fig. 8(f)). When we insert a cylindrical lens in the imaging path to slightly change the focal power along one direction, astigmatism will be introduced in the focal beam. Then, the focal beam will be circular only in the focal plane, and ellipticity will be used to determine the exact position of the single molecule.127

4. Observe the Single Cells in the Whole Organs

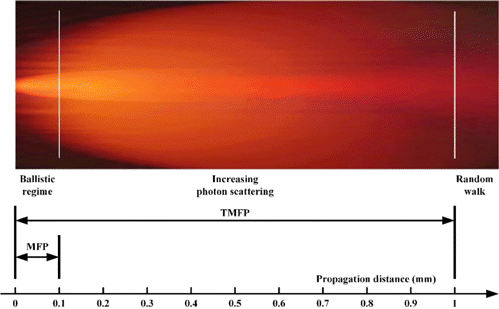

Since we have realized the contrast measurement with a sufficient three-dimensional resolution, the last job we should do is duplicate these techniques to the whole organ. However, this part may be the most challenging till now. There is no doubt that the photons could penetrate into the tissues with about 5 cm at most, which could cover the whole human organ with bilateral illumination. However, as we described before, biological tissues are highly heterogeneous on refractive index.128 Different organs have quite different optical properties, leading to high scattering and relatively lower absorption. Generally speaking, the mean free path (MFP), regardless of the absorption, which indicates the mean path length between scattering interactions, for biological tissues is about 100 μm. This suggests that, on average, the photons passing through the tissues with a range of 100 μm will undergo one scattering. When we further go into the tissues around 1mm, the transport mean free path (TMFP), a half number of photons will lose their information of incident directions (Fig. 9). Therefore, between the MFP and TMFP, the ballistic photons preserving their original direction will be dominated. However, after that, the propagation largely becomes diffusive. And we will eventually achieve a diffusion regime as we go further inside the tissues.129

Fig. 9. Transportation of light in biological tissues.

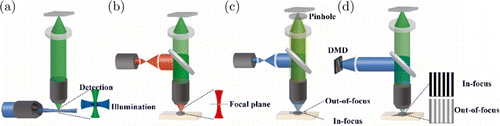

4.1. Imaging within 1 TMFP

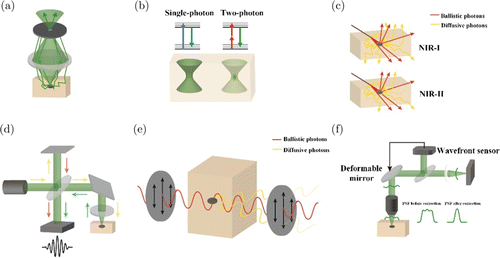

Unfortunately, the state-of-art high-resolution optical microscopy mentioned before is normally based on the assumptions of no scattering or weak scattering during light propagation. Especially for those super-resolution microscopes, the presence of the scattering for excitation and emission, introducing even slight deviation from the original direction, will disable the focusing or the localization strategy. Therefore, the useful depth for super-resolution microscopes is restricted to about 10–20μm. Regarding the diffractive-limited microscopes, the scattering will elevate the signal background and broaden the Airy disk in three dimensions. They could be adopted around MFP since the signal from ballistic photons is still strong. However, they are usually invalid around the depth of 1mm without further improvement.130 The aim of the developing techniques for imaging within 1TMFP is discrimination of the ballistic and diffusive photons. The fundamental principle is based on the different performances of the ballistic and diffusive photons on propagation direction, propagation pathway, coherence, and polarization.131,132,133 Ballistic photons, after propagation through tissues, maintain their original incident direction and spatial frequency. Therefore, directly introducing spatial gating into the detection path could block the diffusive photons from changing their initial incident directions. Confocal imaging is the typical setup based on this principle (Fig. 10(a)). However, the capability of spatial gating is quite limited for a few reasons. First, no spatial gating could be applied in the illumination light path. Second, bulk volume excited diffusive photons may also pass through the spatial gating. Therefore, the effective imaging depth for CM is around 1 MFP. In contrast, a multiphoton microscope is based on the spatial gating in excitation instead of that in the emission of the CM. As we have described, MPM relies on the very local excitation near the focus.134 Therefore, the excitation fluorescence can be regarded as the signal regardless of whether they are ballistic or diffusive. In this case, the background will be depressed, and the collected signal will be enhanced simultaneously (Fig. 10(b)), compared with single photon CM.103 Furthermore, the excitation wavelength in MPM is two or three times that used in single photon excitation, normally located in the near-infrared band. As we all know, the MFP of near-infrared light in tissues is much longer than that of visible light, as suggested in Rayleigh scattering. Therefore, MPM could promise a much deeper penetration into the tissues, around 1 TMFP. In another aspect, diffusive photons will undergo a longer optical path after multiple scattering, making them delay more quickly than ballistic photons. However, as we have indicated, the speed of light is so fast that the capability in time gating cannot fulfill the high requirement for diffractive-limited microscopes. It is worth mentioning that the fluorescence imaging in the second near-infrared windows (about 1300 nm) used a wavelength similar to MPM for deep imaging,135 but with single-photon excitation. This imaging model’s high resolution mainly attributes to the higher absorption instead of lower scattering in this waveband.136 Although absorption for ballistic or diffusive photons increases simultaneously, diffusive photons experience a much longer pathway and more attenuation as a consequence (Fig. 10(c)).137 The coherence property of ballistic photons with the illumination light can be exploited to depress the diffusive photons.133 Coherent gating can be realized based on the setup of the Michelson interferometer, known as optical coherence tomography (OCT).138 OCT consists of the light source, sample arm, reference arm, and detection arm. The light reflected by the sample will interfere with the light reflected by the mirror in the reference arm and have the same optical path length (Fig. 10(d)).139 The interference fringe will be dominated by the ballistic photons from the foci. Depth information can be acquired by scanning the mirror in the reference arm along the axial direction. Instead of a monochrome laser, low-coherence light is required in OCT since the axial resolution is inversely proportional to the coherence length of the light source.140 In practice, OCT can approach 1 TMFP due to the high efficiency of coherence gating combined with spatial gating by introducing a single-mode fiber conjugate with the foci.141 Polarization state of the photons that experience multiple scattering could be rarely preserved. To realize this character, we can insert an analyzer into the detection light path to retrieve the output light orthogonal and parallel to the incident polarization state, containing diffusive information and a mixture of ballistic and diffusive information, respectively (Fig. 10(e)).142,143 Then, we can separate the ballistic information by analyzing the two orthogonal polarizations.144 The polarization gating can only alleviate the scattering because it is a soft gating where the diffusive information occupies a large part of the dynamic range of the sensor. Besides all these gating methods, the adaptive optics (AO) system, which was adopted in astronomy for aberration correction, has emerged to combine with the aforementioned microscopes for the further elevation of the imaging depth (Fig. 10(f)).145 For the AO system, the diffusive photons will be regarded as deviated light introduced by the heterogeneity of the refractive index of tissues,146,147 similar to the optical aberrations induced by the atmosphere in astronomy. Therefore, it consists of two major components: Wavefront sensing and correction. Direct wavefront sensing introduces additional sensors for microscopes to acquire the wavefront, while indirect wavefront sensing takes advantage of the original detector in the microscope to evaluate the wavefront. After that, a spatial light modulator or deformable mirror will correct the wavefront to make the deviated light in the correct direction. The AO systems could manage the slightly deviated light but hardly deal with the highly diffused light, which introduces high-order optical aberrations. The AO systems cannot fundamentally resolve the problem, but a high-efficiency approach can help to boost the performance of the microscopes.

Fig. 10. Techniques for anti-scattering optical imaging within 1 TMFP. (a) CM can block the scattered light with the pinhole. (b) TPM excited local fluorophore, and the generated fluorescence scattered by the tissues can still be collected since we know they are from the focal spot. (c) The diffusive light (yellow) will be weakened via higher absorption for NIR-II than NIR-I. (d) The diagram of OCT is similar to the Michelson interferometer. (e) The scattered light (yellow) will change its polarization and be depressed by a polarizer. (f) In AO, the deformable mirror and wavefront sensor work in a feedback mode. The deformable mirror will output a wavefront after optimization to compensate for the aberration introduced by tissues. The PSF of the system will be corrected.

4.2. Imaging beyond 1 TMFP

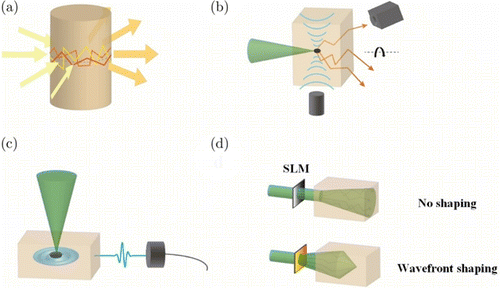

When we stride over 1 TMFP into the tissues, diffusive photons will dominate the light propagation. Significantly, few ballistic photons could be observed, and the noise level of the sensor may limit its observation. Then, most microscopies, depending on the ballistic photons, fail to operate in this regime. Moreover, new strategies such as diffusive-photons-based or ballistic-photons-based methods have been devised. Direct usage of diffusive photons, named as diffusive optical tomography (DOT), has been proposed for several decades (Fig. 11(a)). DOT is a computed tomographic imaging method in analogy to ultrasonic tomography or X-ray computed tomography.148,149,150 Projection images at the different positions around the imaging object are acquired before the reconstruction. Then, the theories describe the light propagation, such as the diffusion equation151 and Monte-Carlo simulations,152 which could be used as the forward model in the reconstruction. And the inverse problems will be resolved with various optimization algorithms.153 The gating methods we mentioned before also could be combined with DOT to improve the performance.154 But it still performs a spatial resolution with millimeters. Although DOT shares a similar principle with ultrasonic tomography, it cannot achieve a comparable spatial resolution, considering the ultrasound experience scattering roughly two orders of magnitude lower than the photon’s propagation in the tissues. This inspired us to introduce other low scattered waves for improving the resolutions. Two hybrid image modalities, ultrasound and light, will be introduced: Ultrasound-modulated optical tomography (UOT) and photoacoustic imaging (PAI). UOT introduces a focused ultrasound into the tissues, where the photons passing by the ultrasonic focal zone will be tagged due to the modulated refractive index and scatterers in this area (Fig. 11(b)).155 This will induce modulation of optical path length for the tagged photons and change the pattern of speckles determined by these photons on the imaging sensor.156 Overall, UOT tries to confine the detected photon area to a size as small as the ultrasonic focal zone. On the contrary, PAI confines the detected ultrasound related to the ultrasonic focal zone. The photoacoustic phenomenon is based on the optical absorption of biomolecules.157 After the instant absorption, the excited molecule returns to its ground state and releases the energy by heat, leading to a rise of local temperature proportional to the absorption coefficient. Then local pressure will generate due to the difference in temperature distribution in the tissues and could be detected by the ultrasonic transducer (Fig. 11(c)).158 Although the ultrasonic signal is detected from the tissues instead of the photons in other optical techniques, it should still be categorized into the optical imaging modality since the contrast originates from optical absorption.159 The spatial resolution for both UOT and PAI are determined by the ultrasound ultimately deep in the tissues, which is at least an order of magnitude better than that in DOT.160 Unfortunately, the wavelength of ultrasound used in biomedicine is tens to hundreds of microns. Then, the diffraction limit for ultrasound is much larger than that of optics. Achieving the optical diffraction limit requires gigahertz ultrasound that could penetrate into the tissues with only tens of microns.161 There is still a debate about whether we can exploit the complete diffusive photons to recover images with diffraction-limited resolution, given that these photons are not extinct.162 Several approaches were raised in recent years, which demonstrated the capability of focusing through a highly scattering medium (Fig. 11(d)), generally based on phase conjugation,163 focusing optimization,164 and memory effect.165 Although they inspired many effective works for improving the performance of various optical microscopy around 1TMFP, no one could genuinely realize the diffraction-limited resolution beyond 1TMFP.132

Fig. 11. In-depth optical imaging of biological tissue beyond 1 TMFP. (a) DOT detected the output light from the tissues. (b) UOT adopts a camera to observe the speckle pattern with and without the ultrasound modulation. (c) PAI uses an ultrasound transducer to receive the photoacoustic signal excited by the optical absorption deep in the tissues. (d) Wavefront shaping technique preload a compensation phase on SLM to make the light focus.

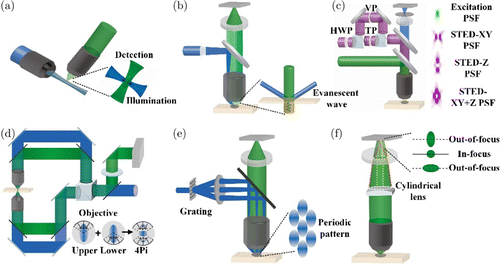

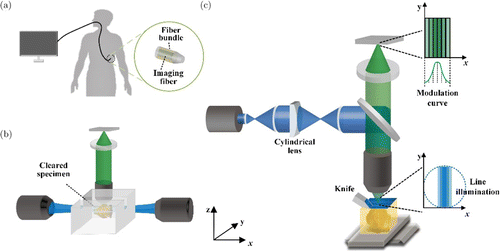

4.3. Imaging inside the whole organ with optical resolution

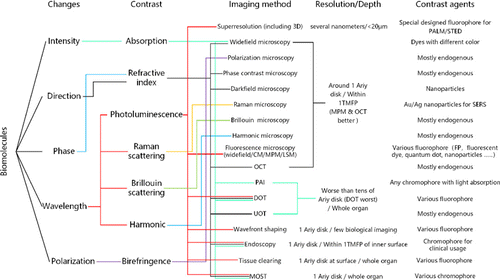

Since we cannot get enough spatial resolution from diffusive photons for single-cell imaging in the whole organ till now, acquisition of the ballistic photons would be the only choice we have. Endoscopy is the minimally invasive optical imaging modality that uses optical fibers to deliver light into the tissues (Fig. 12(a)).166 Due to the flexible and compact size of various optical fibers, it could easily enter the hollow tissue tracts. It permits us directly to acquire the ballistic photons without diffusion from the inner part of the organ. Most clinical endoscopes are equipped with an imaging fiber bundle transferring the superficial images of the digestive tract or blood vessel. Modern endoscope essentially becomes fiber-based optical microscopy, facilitated by the rapid growth of the capability of precision micromachining. CM, MPM, OCT, and PAI have shown great potential in the endoscope for clinical diagnosis and biomedical research.167,168,169 Some miniaturized endoscopes even could be inserted into solid tissue, like the brain, for deep imaging.170 But the ballistic photons we captured only reveal the structure around the probe of the endoscope within a distance of no more than 1TMFP. The radical solutions to acquire the ballistic photons no longer introduce the other wave but other disciplines from chemistry and mechanical engineering. The straightforward idea to get the ballistic photons is either changing the optical properties of the tissue or exposing the inner part of the tissue. The two techniques experienced a long development period and made critical progress in the last decade.171 Optical clearing attempt to use different recipes to make the whole organ transparent (Fig. 12(b)).172,173,174 Considering the lipid in the cell membrane is the major origin of the turbid for most organs, completed dissolving and removal of the lipid always play the central part in high-efficiency clearing methods.175,176,177 Then, refractive index matching will be performed to approach the remaining proteins’ refractive index. Decalcification and decolorization may be necessary for special organs before the clearing procedure.178 There is no doubt that the LSM is the best imaging method fit for the cleared organs, given the high data throughput and long working distance. There are several insuperable flaws of optical clearing preventing us from eventually achieving diffraction-limited rendering of the whole organ.179 First, the objective’s high NA and long working distance are physically contradictory, which suggests a balance between the spatial resolution and the organ’s size. Second, complete transparency needs the removal of lipids and fully matching the refractive index, which could hardly be satisfied, especially for the organs from large animals.180 Due to the long path length of the photons traveling from the inner of the organs, any subtle difference of refractive index throughout the whole organs leads to the serious deterioration of the Airy disk. Therefore, in practice, the perfect images are always demonstrated near the organ’s surface. The history of mechanical sectioning is much longer than the optical clearing, dating back to the invention of the first optical microscope. Combined with the optical microscope, it has become the cornerstone of histology and pathology. After the sectioning of the whole organ into slices with a thickness of microns to tens of microns, the diffusive photons will be avoided. Modern mechanical sectioning approaches mainly included rotary microtome, sledge microtome, and vibratome, which deal with tissues with different embedding methods.181,182,183,184 Although optical clearing has been introduced to make the thick slices transparent, some inevitable difficulties, such as loss in sectioning and deformation, hamper slices’ imaging.179,185 Direct optical imaging on the block face after sectioning may alleviate these problems but introduce a much more serious background ruining the image quality.186,187 Micro-optical sectioning tomography (MOST) first bridged the gap by coupling the line-scanning microscope with the sectioning using a diamond knife.188 The stained sample embedded in the resin will be sectioned by the knife stripe by stripe to form the whole slice.189 At the same time, the reflection mode microscope will acquire the images on-the-fly during the sectioning from the knife’s blade. Consequently, the axial resolution of MOST is determined by the sectioning thickness, and the lateral resolution still depends on the objective. This design completely circumvented the problems of out-of-focus background and demonstrated the dataset from the whole mouse brain with unprecedented spatial resolution. The following studies transformed the reflection microscope into a confocal or two-photon microscope for imaging fluorescent-labeled samples.190,191 There is another elegant approach to imaging the block face controlled by the diffusion of reagent, either by reactivating the fluorescent or staining with the fluorescent (or non-fluorescent) within the superficial surface of the sectioned block.192,193 The essential idea is to limit the thickness of samples emitting fluorescence by elaborately controlling the diffusion depth of the reagent to achieve a background-free image. The depth of diffusion is determined by the axial resolution for this approach. Nevertheless, block face imaging is limited by its relatively low efficiency and ultra-high quality section surface requirement. Since 1MFP for tissues is about 100 μm, we could achieve subsurface imaging of the block with the advent of optical sectioning microcopies. SIM and TPM were first introduced for this purpose.194,195 Then spin-disk CM and LSM pushes the imaging speed to camera-limited, at the cost of imaging quality.196,197 Recently developed line-illumination modulation microscope presents much better optical sectioning capability than CM and superiority in speed concurrently, taking advantage of parallel imaging with line scanning (Fig. 12(c)).198 It could be easily combined with a sledge microtome or vibratome for different samples, from mouse brain to macaque brain.199 Major criticism for the MOST type methods, or imaging on the block, focus on the destruction of the sample after data acquisition. However, several researchers validated the samples’ reuse by carefully collecting the sectioned slices.200,201 Therefore, MOST methods pave the way for single-cell observation in the whole organ.202,203,204,205,206,207 At last, we connect the major points discussed in this review in Fig. 13, from the biomolecules to the physics of the interaction between light and tissues and the imaging methods that can measure the interaction. Furthermore, the performance of the imaging methods in terms of resolution and depth, as well as their contrast agents, are presented for a quick view.

Fig. 12. High-resolution optical imaging inside the whole organ. (a) Endoscopy uses optical fibers to deliver light into and out of the organs without diffusion. (b) Imaging a cleared mouse brain with LSM (c) Diagram of fMOST. The knife will remove the surface of the embedded brain, and the exposed surface will be imaged with a line-illumination modulation microscope for a much better depression of out-of-focus background from the residual samples. Since the imaging is performed on the surface after sectioning, no registration is required, and each image’s distortion is minimal. Moreover, the image quality will be reserved without any scattering.

Fig. 13. Connection of the major points discussed in this review.

5. Discussion and Outlook

In this review, we intended not to include the techniques for observation in time scale, not because it is a trivial area. On the contrary, it is critical for organisms since they live on the foundation of numerous chemical reactions in a four-dimensional world. For instance, the hemoglobin will bind ligands like carbon dioxide, oxygen, and carbon monoxide with a time scale of picoseconds to femtoseconds.208 While depolarization plays an essential role in communication among cells, like action potential in neurons and electrical impulse produced by the sinoatrial node typically happens within milliseconds.209 To observe these dynamic processes, a large number of optical methods were devised. Pumping-probe technique with femtosecond laser could reveal the chemical reaction process.56 Fluorescence lifetime imaging and fluorescence resonance energy transfer imaging indicate the dynamic structural changes of protein in cells.210,211 Fluorescence correlation spectroscopy can monitor the diffusion of the biomolecules in the cells.25 Furthermore, fast scanning with the particular unit in three dimensions,212,213,214 parallel imaging design,215,216,217 and computational compressed imaging218,219,220 tremendously accelerate the volume imaging. Nevertheless, a thorough revisiting of this area is requisite but far beyond the scope of this review.

Although the diffraction limit has been broken, it still prevents us from high-resolution imaging deep inside the tissues of more than 1TMFP without changing their chemical properties or physical forms. It seems that the organs keep their original shape after optical clearing. However, lipids and many other molecules disappear along with the treatment. PAI combined with some concepts from a superresolution microscope showed potential for better deep performance.221,222 However, the large size of the focal spot for ultrasound constrains the resolution by around 10 microns, which is insufficient for single-cell observation.223 Controlling the beam propagation in tissue may be the final solution to this problem. In practice, the tissues are prone to decorrelate the speckles in milliseconds. Moreover, the available modulators perform too slowly and have insufficient freedom to compensate for the multiple scattering. Furthermore, biological tissues consist of various scatterers with different sizes and refractive indices, much more complicated than the diffusers, making these methods unrealistic till now.132 After all, there is a long way before we achieve the ultimate goal.

Along with accomplishing the three-dimensional rendering of many organs from mice, organs of nonhuman primates and humans became the next target, despite several obstacles. Considering the volume of a mouse brain is about 1cm3, the data for the brain with a pixel pitch of 0.5 microns requires 8 tera pixels, spending about four days for data acquisition. The macaque brain, 200 times bigger than the mouse brain, costs two months to complete the imaging.199 The human brain could even demand a year, which hinders the research’s possibility.224,225 Accelerating the imaging speed by LSM has been shown for a macaque brain but sacrificing the image quality due to the limited Rayleigh length of the light sheet.185 Advanced LSM, based on Bessel beam or Lattice, could be further introduced for the LSM to exhibit the most data acquisition efficiency.226,227 Besides retrieving each pixel one by one, many approaches have shown compressed imaging capability, with or without sparse priors, based on the compress sensing theory. Light field microscope takes advantage of the lens array to observe the samples with different angles for reconstruction. Combined with the cutting-edge neural network, it could recover the three-dimensional data approaching the diffraction limit.228,229 Holography with a phase retrieval algorithm can acquire phase and intensity images simultaneously to reconstruct the images from a different depth.230,231 Those sparse sampling techniques could fundamentally speed up the data acquisition for large organs.

Furthermore, enriching the observation of the cells with high-content imaging meets the demand of specific research in the organ.232 As we described before, investigation of the nuclear acid, lipid, and protein in the cells gives us abundant information about the cells. Given the capability of multiwavelength imaging, we can label various molecules using different fluorophores with different colors. Cutting-edge fluorescence microscopes could easily acquire these results using the corresponding filter set, avoiding complicated system modification.233 Other optical microscopes, such as photoacoustic imaging,234 SRS microscopy,235 and polarization microscopy,236 also introduce different contrasts from cells and reveal different biomolecules. In addition, combining with other imaging modalities, like X-ray computed tomography,237 magnetic resonance imaging,4 nuclear imaging,238 multi-scale information that correlated the clinics will be disclosed. At last, omics data from RNA,239 protein,240 and metabolites241 also should be included for their in-depth data collection from the cells.2

After investigating optical contrast from tissues, optical imaging has achieved a significant step forward in spatial resolution in breaking the diffractive limit in the last few decades. While the diffusion limit could be partially alleviated at the cost of destructive imaging for the whole organ. There is no doubt that the stage of optical imaging that completely breaks through the diffusion limit is still in its infancy. But with the progress in optical labeling and artificial intelligence, we are going into a new era of single-cell investigation, from slide to whole organ, from rodent to human, and from static to dynamic.

Acknowledgments

This work was supported by the National Science and Technology Innovation 2030 Grant No. (2021ZD0200104) and National Nature Science Foundation of China (81871082). The authors would like to thank Prof. Yong Deng and Zhuoyao Huang for text editing. Xiaoquan Yang and Tao Jiang contributed equally to this work.

Conflicts of Interest

The authors declare no conflict of interest.